Plugins 〉OpenSearch

OpenSearch

OpenSearch Grafana Data Source

With the OpenSearch Grafana data source plugin, you can run many types of simple or complex OpenSearch queries to visualize logs or metrics stored in OpenSearch. You can also annotate your graphs with log events stored in OpenSearch. The OpenSearch Grafana data source plugin uses Piped Processing Language (PPL) and also supports AWS Sigv4 authentication for Amazon OpenSearch Service.

Adding the data source

- Install the data source by following the instructions in the Installation tab of this plugin's listing page.

- Open the side menu by clicking the Grafana icon in the top header.

- In the side menu under the

Connectionslink you should find a link namedData Sources. - Click the

+ Add data sourcebutton in the top header. - Search for OpenSearch in the search bar.

Note: If you're not seeing the

Data Sourceslink in your side menu it means that you do not have theAdminrole for the current organization.

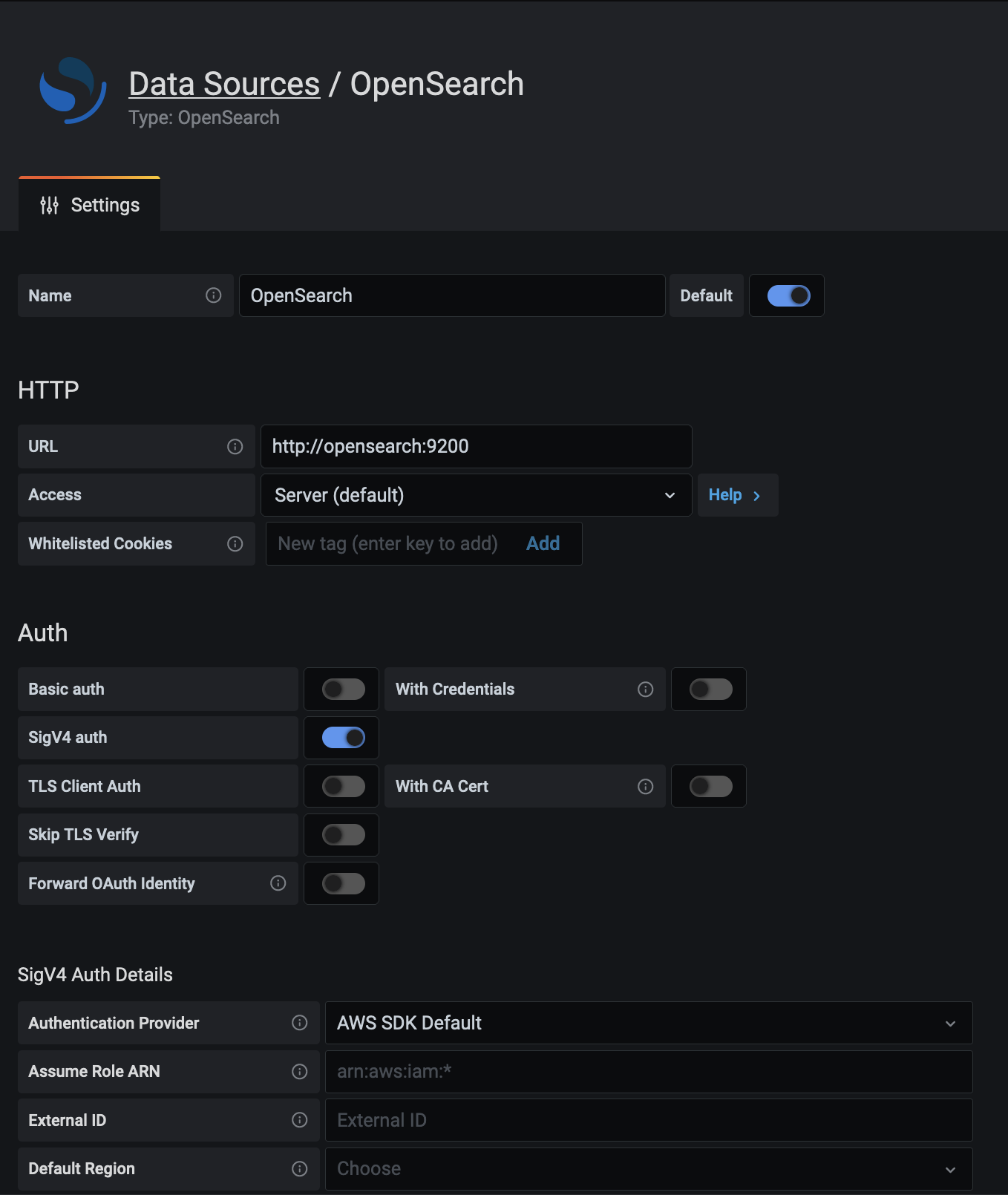

| Name | Description |

|---|---|

Name | The data source name. This is how you refer to the data source in panels and queries. |

Default | Default data source means that it will be pre-selected for new panels. |

Url | The HTTP protocol, IP, and port of your OpenSearch server. |

Access | Server (default) = URL needs to be accessible from the Grafana backend/server, Browser = URL needs to be accessible from the browser. |

Access mode controls how requests to the data source will be handled. Server should be the preferred way if nothing else stated.

Server access mode (Default)

All requests will be made from the browser to Grafana backend/server which in turn will forward the requests to the data source and by that circumvent possible Cross-Origin Resource Sharing (CORS) requirements. The URL needs to be accessible from the grafana backend/server if you select this access mode.

Browser (Direct) access

Warning: Browser (Direct) access is deprecated and will be removed in a future release.

All requests will be made from the browser directly to the data source and may be subject to Cross-Origin Resource Sharing (CORS) requirements. The URL needs to be accessible from the browser if you select this access mode.

If you select Browser access you must update your OpenSearch configuration to allow other domains to access OpenSearch from the browser. You do this by specifying these two options in your opensearch.yml config file.

http.cors.enabled: true

http.cors.allow-origin: "*"

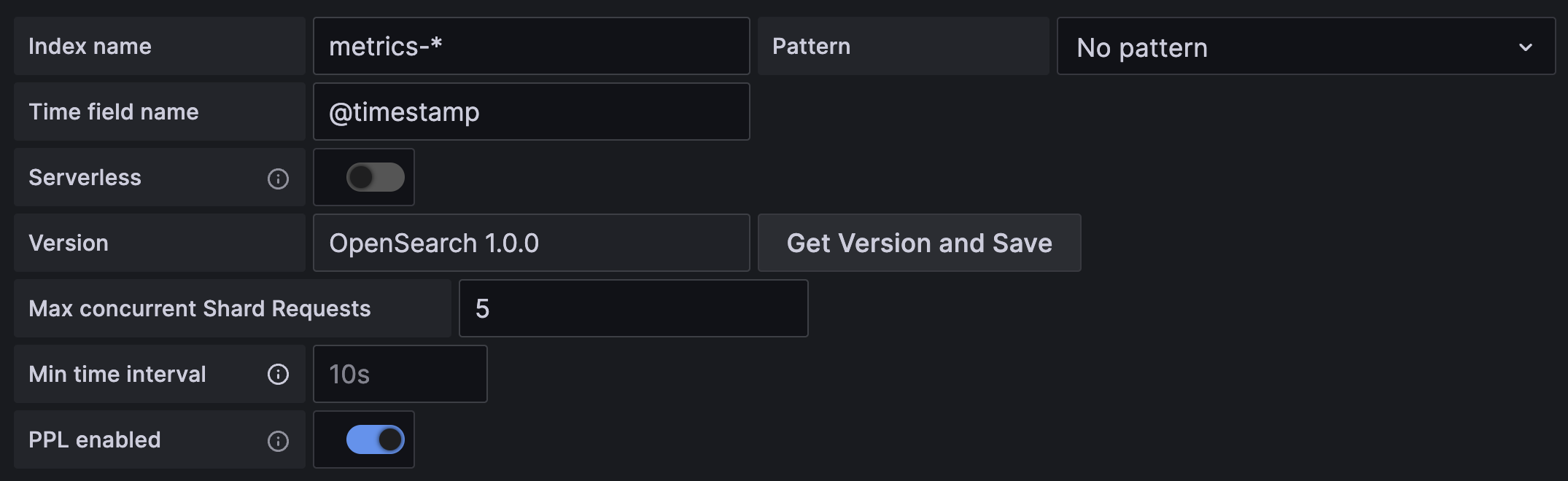

Index settings

Here you can specify a default for the time field and specify the name of your OpenSearch index. You can use

a time pattern for the index name or a wildcard.

OpenSearch version

Be sure to click the Get Version and Save button. This is very important as there are differences on how queries are composed.

Min time interval

A lower limit for the auto group by time interval. Recommended to be set to write frequency, for example 1m if your data is written every minute.

This option can also be overridden/configured in a dashboard panel under data source options. It's important to note that this value needs to be formatted as a

number followed by a valid time identifier, e.g. 1m (1 minute) or 30s (30 seconds). The following time identifiers are supported:

| Identifier | Description |

|---|---|

y | year |

M | month |

w | week |

d | day |

h | hour |

m | minute |

s | second |

ms | millisecond |

Logs (BETA)

Only available in Grafana v6.3+.

There are two parameters, Message field name and Level field name, that can optionally be configured from the data source settings page that determine

which fields will be used for log messages and log levels when visualizing logs in Explore.

For example, if you're using a default setup of Filebeat for shipping logs to OpenSearch the following configuration should work:

- Message field name: message

- Level field name: fields.level

Data links

Data links create a link from a specified field that can be accessed in logs view in Explore.

Each data link configuration consists of:

- Field - Name of the field used by the data link.

- URL/query - If the link is external, then enter the full link URL. If the link is internal link, then this input serves as query for the target data source. In both cases, you can interpolate the value from the field with

${__value.raw }macro. - Internal link - Select if the link is internal or external. In case of internal link, a data source selector allows you to select the target data source. Only tracing data sources are supported.

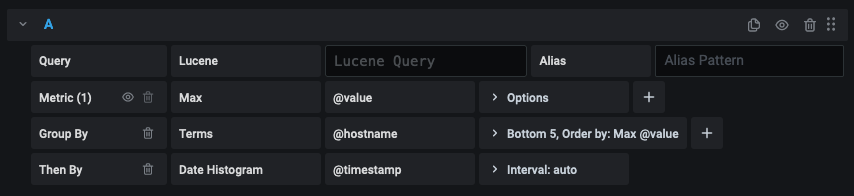

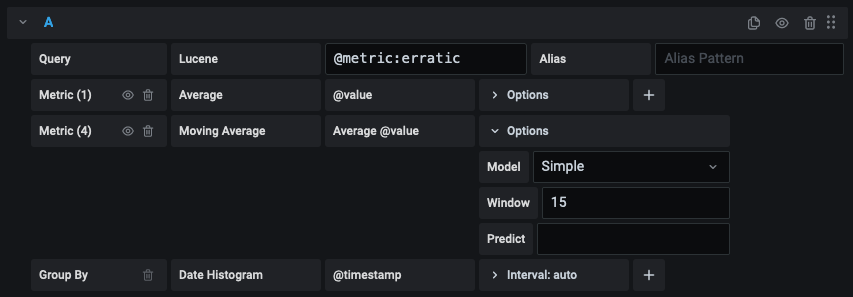

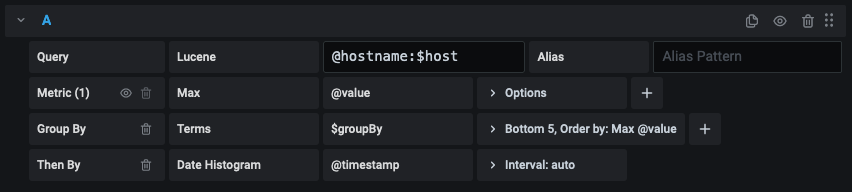

Metric Query editor

The OpenSearch query editor allows you to select multiple metrics and group by multiple terms or filters. Use the plus and minus icons to the right to add/remove metrics or group by clauses. Some metrics and group by clauses haves options, click the option text to expand the row to view and edit metric or group by options.

Series naming and alias patterns

You can control the name for time series via the Alias input field.

| Pattern | Description |

|---|---|

{{term fieldname}} | replaced with value of a term group by |

{{metric}} | replaced with metric name (ex. Average, Min, Max) |

{{field}} | replaced with the metric field name |

Pipeline metrics

Some metric aggregations are called Pipeline aggregations, for example, Moving Average and Derivative. OpenSearch pipeline metrics require another metric to be based on. Use the eye icon next to the metric to hide metrics from appearing in the graph. This is useful for metrics you only have in the query for use in a pipeline metric.

Templating

Instead of hard-coding things like server, application and sensor name in your metric queries you can use variables in their place. Variables are shown as dropdown select boxes at the top of the dashboard. These dropdowns make it easy to change the data being displayed in your dashboard.

Check out the Templating documentation for an introduction to the templating feature and the different types of template variables.

Query variable

The OpenSearch data source supports two types of queries you can use in the Query field of Query variables. The query is written using a custom JSON string.

| Query | Description |

|---|---|

{"find": "fields", "type": "keyword"} | Returns a list of field names with the index type keyword. |

{"find": "terms", "field": "@hostname", "size": 1000} | Returns a list of values for a field using term aggregation. Query will use current dashboard time range as time range for query. |

{"find": "terms", "field": "@hostname", "query": '<lucene query>'} | Returns a list of values for a field using term aggregation and a specified lucene query filter. Query will use current dashboard time range as time range for query. |

{"find": "terms", "script": "if( doc['@hostname'].value == 'something' ) { return null; } else { return doc['@hostname']}", "query": '<lucene query>'} | Returns a list of values using term aggregation, the script API, and a specified lucene query filter. Query will use current dashboard time range as time range for query. |

If the query is multi-field with both a text and keyword type, use "field":"fieldname.keyword" (sometimes fieldname.raw) to specify the keyword field in your query.

There is a default size limit of 500 on terms queries. Set the size property in your query to set a custom limit.

You can use other variables inside the query. Example query definition for a variable named $host.

{"find": "terms", "field": "@hostname", "query": "@source:$source"}

In the above example, we use another variable named $source inside the query definition. Whenever you change, via the dropdown, the current value of the $source variable, it will trigger an update of the $host variable so it now only contains hostnames filtered by in this case the

@source document property.

These queries by default return results in term order (which can then be sorted alphabetically or numerically as for any variable).

To produce a list of terms sorted by doc count (a top-N values list), add an orderBy property of "doc_count".

This automatically selects a descending sort; using "asc" with doc_count (a bottom-N list) can be done by setting order: "asc" but is discouraged as it "increases the error on document counts".

To keep terms in the doc count order, set the variable's Sort dropdown to Disabled; you might alternatively still want to use e.g. Alphabetical to re-sort them.

{"find": "terms", "field": "@hostname", "orderBy": "doc_count"}

Using variables in queries

There are two syntaxes:

$<varname>Example: @hostname:$hostname[[varname]]Example: @hostname:[[hostname]]

Why two ways? The first syntax is easier to read and write but does not allow you to use a variable in the middle of a word. When the Multi-value or Include all value options are enabled, Grafana converts the labels from plain text to a lucene compatible condition.

In the above example, we have a lucene query that filters documents based on the @hostname property using a variable named $hostname. It is also using

a variable in the Terms group by field input box. This allows you to use a variable to quickly change how the data is grouped.

Annotations

Annotations allow you to overlay rich event information on top of graphs. You add annotation queries via the Dashboard menu / Annotations view. Grafana can query any OpenSearch index for annotation events.

| Name | Description |

|---|---|

Query | You can leave the search query blank or specify a lucene query. |

Time | The name of the time field, needs to be date field. |

Time End | Optional name of the time end field needs to be date field. If set, then annotations will be marked as a region between time and time-end. |

Text | Event description field. |

Tags | Optional field name to use for event tags (can be an array or a CSV string). |

Querying Logs

Querying and displaying log data from OpenSearch is available in Explore, and in the logs panel in dashboards. Select the OpenSearch data source, and then optionally enter a lucene query to display your logs.

Piped Processing Language (PPL)

The OpenSearch plugin allows you to run queries using PPL. For more information on PPL syntax, refer to the OpenSearch documentation.

Log Queries

Once the result is returned, the log panel shows a list of log rows and a bar chart where the x-axis shows the time and the y-axis shows the frequency/count.

Note that the fields used for log message and level is based on an optional data source configuration.

Filter Log Messages

Optionally enter a lucene query into the query field to filter the log messages. For example, using a default Filebeat setup you should be able to use fields.level:error to only show error log messages.

Configure the data source with provisioning

It's now possible to configure data sources using config files with Grafana's provisioning system. You can read more about how it works and all the settings you can set for data sources on the provisioning docs page

Here is a provisioning example for this data source:

apiVersion: 1

datasources:

- name: opensearch

type: grafana-opensearch-datasource

url: http://opensearch-cluster-master.opensearch.svc.cluster.local:9200

basicAuthUser: grafana

basicAuth: true

version: 1

jsonData:

pplEnabled: false

version: 2.16.0

maxConcurrentShardRequests: 5

flavor: “Opensearch”

timeField: “@timestamp”

logMessageField: log # only applicable for logs

logLevelField: level # only applicable for logs

secureJsonData:

basicAuthPassword: ${GRAFANA_OPENSEARCH_PASSWORD}

editable: true

Amazon OpenSearch Service

AWS users using Amazon's OpenSearch Service can use this data source to visualize OpenSearch data. If you are using an AWS Identity and Access Management (IAM) policy to control access to your Amazon OpenSearch Service domain, then you must use AWS Signature Version 4 (AWS SigV4) to sign all requests to that domain. For more details on AWS SigV4, refer to the AWS documentation.

AWS Signature Version 4 authentication

Note: Only available in Grafana v7.3+.

In order to sign requests to your Amazon OpenSearch Service domain, SigV4 can be enabled in the Grafana configuration.

Once AWS SigV4 is enabled, it can be configured on the OpenSearch data source configuration page. Refer to AWS authentication for more information about authentication options.

IAM policies for OpenSearch Service

Grafana needs permissions granted via IAM to be able to read OpenSearch Service documents. You can attach these permissions to IAM roles and utilize Grafana's built-in support for assuming roles. Note that you will need to configure the required policy before adding the data source to Grafana.

Depending on the source of the data you'd query with OpenSearch, you may need different permissions. AWS provides some predefined policies that you can check here.

This is an example of a minimal policy you can use to query OpenSearch Service.

NOTE: Update the ARN of the OpenSearch Service resource to match your domain.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": ["es:ESHttpGet", "es:ESHttpPost"],

"Resource": "arn:aws:es:{region}:123456789012:domain/{domain_name}"

}

]

}

Amazon OpenSearch Serverless

Note: OpenSearch Serverless support is only available in Grafana v9.4+.

Access to OpenSearch Serverless data is controlled by data access policies. These policies can be created via the Console or aws-cli.

Data access policies for OpenSearch Serverless

The following example shows a policy that allows users to query the collection_name collection and the index_name index.

NOTE: Make sure to substitute the correct values for

collection_name,index_name, and Principal.

[

{

"Rules": [

{

"Resource": ["collection/{collection_name}"],

"Permission": ["aoss:DescribeCollectionItems"],

"ResourceType": "collection"

},

{

"Resource": ["index/{collection_name}/{index_name}"],

"Permission": ["aoss:DescribeIndex", "aoss:ReadDocument"],

"ResourceType": "index"

}

],

"Principal": ["arn:aws:iam:{region}:123456789012:user/{username}"],

"Description": "read-access"

}

]

Traces Support

OpenSearch plugin has support for viewing a list of traces in table form, and a single trace in Trace View, which shows the timeline of trace spans

Note: Querying OpenSearch Traces is only available using Lucene queries

How to make a trace query using the query editor:

- View all traces:

- Query (Lucene)

leave blank - Lucene Query Type: Traces

- Rerun the query

- If necessary, select Table visualization type

- Clicking on a trace ID in the table opens that trace in the Explore panel Trace View

- Query (Lucene)

- View Single trace

- Query: traceId: {traceId}

- Lucene Query Type: Traces

- Rerun the query

- If necessary, select Traces visualization type

Service Map

Version 2.15.0 of the OpenSearch plugin introduces support for visualizing Service Map for Open Search traces ingested with Data Prepper.

Note: Service Map for OpenSearch plugin doesn't yet support querying Jaeger trace data stored in OpenSearch in raw form (without Data Prepper)

Service map in Grafana enables customers to view a map of their applications built using microservices architecture. With this map, customers can easily detect performance issues, or increase in error rates in any of their services.

Each service in the map is represented by a circle (node). Numbers on the inside show average latency per service and average throughput per minute. Borders around the node represent error and success rates of operations targeting that service. Clicking on any node opens a dialogue with all the metrics in one place.

Requests between services are represented by arrows between the nodes. Clicking on any arrow opens a dialogue that lists all operations that are involved in the requests between the two services.

Visualizing service map data is available for

- all traces within a time range defined in Grafana

- a single trace when querying traceId

Visualizing service map data:

- For all traces in a time range:

- Query (Lucene)

leave blank - Lucene Query Type: Traces

- Toggle Service Map

on - Run the query

- in Explore view, the Node Graph visualization will appear alongside the table of traces

- if querying from a dashboard panel, select Node Graph from the list of visualizations

- Query (Lucene)

- For one trace

- Query: traceId: {traceId}

- Lucene Query Type: Traces

- Toggle Service Map

on - Run the query

- in Explore view, the Node Graph visualization will appear alongside the Trace visualization

- if querying from a dashboard panel, select Node Graph from the list of visualizations

Note: Note that querying service map data requires sending additional queries to OpenSearch.

Grafana Cloud Free

- Free tier: Limited to 3 users

- Paid plans: $55 / user / month above included usage

- Access to all Enterprise Plugins

- Fully managed service (not available to self-manage)

Self-hosted Grafana Enterprise

- Access to all Enterprise plugins

- All Grafana Enterprise features

- Self-manage on your own infrastructure

Grafana Cloud Free

- Free tier: Limited to 3 users

- Paid plans: $55 / user / month above included usage

- Access to all Enterprise Plugins

- Fully managed service (not available to self-manage)

Self-hosted Grafana Enterprise

- Access to all Enterprise plugins

- All Grafana Enterprise features

- Self-manage on your own infrastructure

Grafana Cloud Free

- Free tier: Limited to 3 users

- Paid plans: $55 / user / month above included usage

- Access to all Enterprise Plugins

- Fully managed service (not available to self-manage)

Self-hosted Grafana Enterprise

- Access to all Enterprise plugins

- All Grafana Enterprise features

- Self-manage on your own infrastructure

Grafana Cloud Free

- Free tier: Limited to 3 users

- Paid plans: $55 / user / month above included usage

- Access to all Enterprise Plugins

- Fully managed service (not available to self-manage)

Self-hosted Grafana Enterprise

- Access to all Enterprise plugins

- All Grafana Enterprise features

- Self-manage on your own infrastructure

Grafana Cloud Free

- Free tier: Limited to 3 users

- Paid plans: $55 / user / month above included usage

- Access to all Enterprise Plugins

- Fully managed service (not available to self-manage)

Self-hosted Grafana Enterprise

- Access to all Enterprise plugins

- All Grafana Enterprise features

- Self-manage on your own infrastructure

Installing OpenSearch on Grafana Cloud:

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

For more information, visit the docs on plugin installation.

Installing on a local Grafana:

For local instances, plugins are installed and updated via a simple CLI command. Plugins are not updated automatically, however you will be notified when updates are available right within your Grafana.

1. Install the Data Source

Use the grafana-cli tool to install OpenSearch from the commandline:

grafana-cli plugins install The plugin will be installed into your grafana plugins directory; the default is /var/lib/grafana/plugins. More information on the cli tool.

Alternatively, you can manually download the .zip file for your architecture below and unpack it into your grafana plugins directory.

Alternatively, you can manually download the .zip file and unpack it into your grafana plugins directory.

2. Configure the Data Source

Accessed from the Grafana main menu, newly installed data sources can be added immediately within the Data Sources section.

Next, click the Add data source button in the upper right. The data source will be available for selection in the Type select box.

To see a list of installed data sources, click the Plugins item in the main menu. Both core data sources and installed data sources will appear.

Change Log

All notable changes to this project will be documented in this file.

2.23.1

- Fix logs display when _source is log message field in 565

2.23.0

- Remove openSearchBackendFlowEnabled toggle in #545

- Make Traces size settable in #550

- Bump the all-node-dependencies group across 1 directory with 16 updates in #560

2.22.4

- Fix: cast trim edges setting from a string to int in #558

- Fix: adhoc filters with number fields in #554

- Terms aggregation: Change min doc count to 1 in #556

- Fix: interval parsing in #553

- Bump the all-go-dependencies group with 2 updates in #548

- Fix: prevent hidden queries from being used as supplementary queries in #551

- Update provisioning to work with docker in #546

2.22.3

- Bump github.com/grafana/grafana-plugin-sdk-go from 0.261.0 to 0.262.0 in the all-go-dependencies group in #542

- Bump the all-node-dependencies group across 1 directory with 35 updates in #540

- Bump github.com/grafana/grafana-plugin-sdk-go from 0.260.3 to 0.261.0 in the all-go-dependencies group in #537

- Bump the all-go-dependencies group across 1 directory with 3 updates in #527

- Bump the npm_and_yarn group with 2 updates in #533

- Bump golang.org/x/crypto from 0.28.0 to 0.31.0 in the go_modules group in #524

- Bump @eslint/plugin-kit from 0.2.1 to 0.2.3 in the npm_and_yarn group in #504

- Add e2e smoke tests in #526

2.22.2

- fix: parse geohash precision as string instead of int in #523

2.22.1

- Add error source, remove some impossible errors in #514

2.22.0

- Bump grafana-plugin-sdk-go to 0.259.4

- Add support for logs volumes in #483

- Opensearch: Replace error source http client with a new error source methods in #505

2.21.5

- Fix: Pass context in Query Data requests in #507

2.21.4

- Fix: Revert to using resource handler for health check in #503

2.21.3

- Upgrade grafana-plugin-sdk-go (deps): Bump github.com/grafana/grafana-plugin-sdk-go from 0.258.0 to 0.259.2

2.21.2

- Fix: backend health check should accept empty index #495

2.21.1

- Fix: build each query response separately #489

- Bump the all-node-dependencies group across 1 directory with 4 updates #482

- Bump the all-github-action-dependencies group with 4 updates #479

2.21.0

2.20.0

- Chore: update dependencies #476

- Migrate Annotation Queries to run through the backend #477

- Upgrade grafana-plugin-sdk-go (deps): Bump github.com/grafana/grafana-plugin-sdk-go from 0.252.0 to 0.256.0 #475

- Docs: Improve provisioning example in README.md #444

- Upgrade grafana-plugin-sdk-go (deps): Bump github.com/grafana/grafana-plugin-sdk-go from 0.250.2 to 0.252.0 #474

- Migrate getting fields to run through the backend #473

- Migrate getTerms to run through the backend #471

2.19.1

- Chore: Update plugin.json keywords in #469

- Fix: handle empty trace group and last updated values in #445

- Dependabot updates in #463

- Bump dompurify from 2.4.7 to 2.5.6

- Bump path-to-regexp from 1.8.0 to 1.9.0

- Bump braces from 3.0.2 to 3.0.3

- Chore: Add Combine PRs workflow to the correct directory in #462

- Chore: Add Combine PRs action in #461

2.19.0

- Reroute service map trace queries to the backend in #459

- Use resource handler to get version in #452

- Bump grafana-aws-sdk to 0.31.2 in #456

- Bump grafana-plugin-sdk-go to 0.250.2 in #456

2.18.0

2.17.4

- Bugfix: Update aws/aws-sdk-go to support Pod Identity credentials in #447

- Bump webpack from 5.89.0 to 5.94.0 in #446

2.17.3

- Bump fast-loops from 1.1.3 to 1.1.4 in #438

- Bump ws from 8.15.1 to 8.18.0 in #439

- Bump micromatch from 4.0.5 to 4.0.8 in #441

- Chore: Rename datasource file #430

- Chore: Add pre-commit hook in #429

2.17.2

- Fix serviceMap when source node doesn't have stats in #428

2.17.1

- Use tagline to detect OpenSearch in compatibility mode in #419

- Fix: use older timestamp format for older elasticsearch in #415

2.17.0

2.16.1

- Send all queries to backend if feature toggle is enabled in #409

2.16.0

- Bugfix: Pass docvalue_fields for elasticsearch in the backend flow in #404

- Use application/x-ndjson content type for multisearch requests in #403

- Refactor ad hoc variable processing in #399

2.15.4

- Chore: Improve error message by handling

caused_by.reasonerror messages in #401

2.15.3

- Fix: Add fields to frame if it does not already exist when grouping by multiple terms in #392

2.15.2

- security: bump grafana-plugin-sdk-go to address CVEs by @njvrzm in https://github.com/grafana/opensearch-datasource/pull/395

2.15.1

- Revert Lucene and PPL migration to backend #8b1e396

2.15.0

- Trace analytics: Implement Service Map feature for traces in #366, #362, #358

- Backend Migration: Run all Lucene queries and PPL logs and table queries on the backend in #375

- Backend Migration: migrate ppl timeseries to backend in #367

- Traces: Direct all trace queries to the BE in #355

- Fix flaky tests in #369

2.14.7

- Fix: data links not working in explore for Trace List queries #353

- Chore: add temporary node graph toggle #350

- Chore: update keywords in plugin.json #347

2.14.6

- Annotation Editor: Fix query editor to support new react annotation handling in #342

2.14.5

- Bugfix: Forward http headers to enable OAuth for backend queries in #345

- Allow to use script in query variable by @loru88 in #344

- Chore: run go mod tidy #338 #338

- Chore: adds basic description and link to github by @sympatheticmoose in #337

2.14.4

- Fix: Move "Build a release" in CONTRIBUTING.md out of TLS section in #324

- Backend Migration: Add data links to responses from the backend in #326

2.14.3

- Update grafana-aws-sdk to 0.22.0 in #323

2.14.2

- Support time field with nanoseconds by Christian Norbert Menges christian.norbert.menges@sap.com in #321

- Refactor tests to remove Enzyme and use react-testing-library in #319

2.14.1

- Upgrade Grafana dependencies and create-plugin config in #315

2.14.0

- Backend refactor and clean by @fridgepoet in #283

- Backend: Add trace list query building by @fridgepoet in #284

- Migrate to create-plugin and support node 18 by @kevinwcyu in #286

- PPL: Execute Explore PPL Table format queries through the backend by @iwysiu in #289

- Bump semver from 7.3.7 to 7.5.2 by @dependabot in #292

- Bump go.opentelemetry.io/contrib/instrumentation/net/http/httptrace/otelhttptrace from 0.37.0 to 0.44.0 by @dependabot in #293

- Bump @babel/traverse from 7.18.6 to 7.23.2 by @dependabot in #297

- Backend: Refactor trace spans (query building + response processing) by @idastambuk in #257

- Refactor Response Parser by @sarahzinger in #309

- Upgrade dependencies by @fridgepoet in #307

- All trace list requests go through backend by @sarahzinger in #310

- Use github app for issue commands workflow by @katebrenner in #312

2.13.1

- Backend: Fix Lucene logs so it only uses date_histogram by @fridgepoet in #277

- Backend: Remove _doc from sort array in query building, Remove limit from response processing by @fridgepoet in #278

2.13.0

- [Explore] Migrate Lucene metric queries to the backend by @fridgepoet as part of https://github.com/grafana/opensearch-datasource/issues/197

- The Lucene metric query type has been refactored to execute through the backend in the Explore view only. Existing Lucene metric queries in Dashboards are unchanged and execute through the frontend. Please report any anomalies observed in Explore by reporting an issue.

2.12.0

- Get filter values with correct time range (requires Grafana 10.2.x) by @iwysiu in https://github.com/grafana/opensearch-datasource/pull/265

- Backend (alerting/expressions only) Lucene metrics: Parse MinDocCount as int or string by @fridgepoet in https://github.com/grafana/opensearch-datasource/pull/268

- Backend (alerting/expressions only) Lucene metrics: Fix replacement of _term to _key in terms order by @fridgepoet in https://github.com/grafana/opensearch-datasource/pull/270

- Backend (alerting/expressions only) Lucene metrics: Remove "size":500 from backend processTimeSeriesQuery by @fridgepoet in https://github.com/grafana/opensearch-datasource/pull/269

2.11.0

- [Explore] Migrate PPL log queries to the backend by @kevinwcyu in https://github.com/grafana/opensearch-datasource/pull/259

- The PPL Logs query type has been refactored to execute through the backend in the Explore view only. Existing PPL Logs queries in Dashboards are unchanged and execute through the frontend. Please report any anomalies observed in Explore by reporting an issue.

2.10.2

- Dependencies update

2.10.1

- Backend: Refactor http client so that it is reused

2.10.0

- [Explore] Migrate Lucene log queries to the backend by @fridgepoet in https://github.com/grafana/opensearch-datasource/pull/228

- The Lucene Logs query type has been refactored to execute through the backend in the Explore view only. Existing Lucene Logs queries in Dashboards are unchanged and execute through the frontend. Please report any anomalies observed in Explore by reporting an issue.

- Apply ad-hoc filters to PPL queries before sending it to the backend by @kevinwcyu in https://github.com/grafana/opensearch-datasource/pull/244

2.9.1

- upgrade @grafana/aws-sdk to fix bug in temp credentials

2.9.0

- Update grafana-aws-sdk to v0.19.1 to add

il-central-1to the opt-in region list

2.8.3

- Fix: convert ad-hoc timestamp filters to UTC for PPL queries in https://github.com/grafana/opensearch-datasource/pull/237

2.8.2

- Add ad hoc filters before sending Lucene queries to backend by @fridgepoet in https://github.com/grafana/opensearch-datasource/pull/225

2.8.1

- Fix template variable interpolation of queries going to the backend by @fridgepoet in https://github.com/grafana/opensearch-datasource/pull/220

2.8.0

- Fix: Take into account raw_data query's Size and Order by @fridgepoet in https://github.com/grafana/opensearch-datasource/pull/210

- Backend: Default to timeField if no field is specified in date histogram aggregation by @fridgepoet in https://github.com/grafana/opensearch-datasource/pull/215

- Backend: Change query sort to respect sort order by @fridgepoet in https://github.com/grafana/opensearch-datasource/pull/211

- Backend: Add raw_document query support by @fridgepoet in https://github.com/grafana/opensearch-datasource/pull/214

v2.7.1

- Dependency update

v2.7.0

- Add raw_data query support to backend by @fridgepoet in https://github.com/grafana/opensearch-datasource/pull/203

v2.6.2

- Backend: Convert tables to data frames by @fridgepoet in https://github.com/grafana/opensearch-datasource/pull/186

- Refactor PPL and Lucene time series response processing to return DataFrames by @idastambuk in https://github.com/grafana/opensearch-datasource/pull/188

- Backend: Use int64 type instead of string for from/to date times by @fridgepoet in https://github.com/grafana/opensearch-datasource/pull/191

v2.6.1

- Backend: Fix SigV4 when creating client by @fridgepoet in https://github.com/grafana/opensearch-datasource/pull/183

v2.6.0

- Ability to select order (Desc/Asc) for "raw data" metrics aggregations by @lvta0909 in https://github.com/grafana/opensearch-datasource/pull/88

- Backend: Set field.Config.DisplayNameFromDS instead of frame.name by @fridgepoet in https://github.com/grafana/opensearch-datasource/pull/180

v2.5.1

- Fix backend pipeline aggregation query parsing and data frame building in https://github.com/grafana/opensearch-datasource/pull/168

v2.5.0

Features and Enhancements:

- OpenSearch version detection added #120

Bug Fixes:

- Fix query editor misalignment (#163)

- Fix use case when a panel has queries of different types (#141)

v2.4.1

Bug Fixes:

- Security: Upgrade Go in build process to 1.20.4

- Update grafana-plugin-sdk-go version to 0.161.0

v2.4.0

Features and Enhancements:

- Support for Trace Analytics #122, @idastambuk, @katebrenner, @iwysiu, @sarahzinger

Bug Fixes:

- Update Backend Dependencies #148, @fridgepoet

- Fix view of nested array field in table column #128, @z0h3

v2.3.0

- Add 'Use time range' option, skip date type field validation by @z0h3 in https://github.com/grafana/opensearch-datasource/pull/125

- Create httpClient with grafana-plugin-sdk-go methods by @fridgepoet in https://github.com/grafana/opensearch-datasource/pull/118

v2.2.0

- Fix moving_avg modes to correctly parse coefficients as floats (

alpha,beta, andgamma) (#99) - Use grafana-aws-sdk v0.12.0 to update opt-in regions list (#102)

v2.1.0

Enhancements

- Add option to query OpenSearch serverless (#92)

v2.0.4

Bug fixes

- Backend: Fix index being read from the wrong place (#80)

v2.0.3

Bug fixes

- Fixed missing custom headers (#73)

v2.0.2

Enhancements

- Upgrade of

grafana-aws-skdto0.11.0(#69)

v2.0.1

Bug fixes

- Fixed timestamps in the backend being handled wrong (#31)

- Fixed timestamps in the frontend being assumed as local, whereas they should be UTC (#21, #66)

v2.0.0

Features and enhancements

- Upgrade of

@grafana/data,@grafana/ui,@grafana/runtime,@grafana/toolkitto 9.0.2 (#46)

Breaking Changes

- Use

SIGV4ConnectionConfigfrom@grafana/ui(#48)

v1.2.0

Features and enhancements

- Upgrade of

@grafana/data,@grafana/ui,@grafana/runtime,@grafana/toolkitto 8.5.5 (#35, #41) - Upgrade of further frontend and backend dependencies (#42, #43)

v1.1.2

Bug fixes

- Improve error handling

- Fix alias pattern not being correctly handled by Query Editor

v1.1.0

New features

- Add support for Elasticsearch databases (2f9e802)

v1.0.0

- First Release