Important: This documentation is about an older version. It's relevant only to the release noted, many of the features and functions have been updated or replaced. Please view the current version.

Introduction to Alerting

Whether you’re just starting out or you’re a more experienced user of Grafana Alerting, learn more about the fundamentals and available features that help you create, manage, and respond to alerts; and improve your team’s ability to resolve issues quickly.

Tip

For a hands-on introduction, refer to our tutorial to get started with Grafana Alerting.

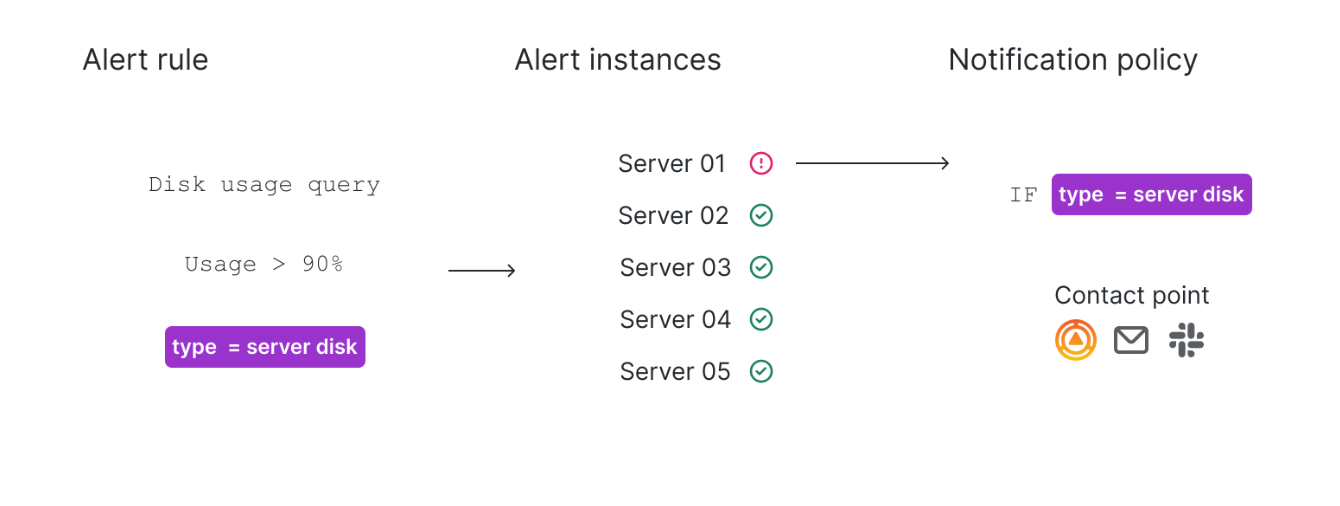

The following diagram gives you an overview of Grafana Alerting and introduces you to some of the fundamental features that are the principles of how Grafana Alerting works.

How it works at a glance

- Grafana alerting periodically queries data sources and evaluates the condition defined in the alert rule

- If the condition is breached, an alert instance fires

- Firing and resolved alert instances are routed to notification policies based on matching labels

- Notifications are sent out to the contact points specified in the notification policy

Fundamentals

The following concepts are key to your understanding of how Grafana Alerting works.

Alert rules

An alert rule consists of one or more queries and expressions that select the data you want to measure. It also contains a condition, which is the threshold that an alert rule must meet or exceed in order to fire.

Add labels to uniquely identify your alert rule and configure alert routing. Labels link alert rules to notification policies, so you can easily manage which policy should handle which alerts and who gets notified.

Once alert rules are created, they go through various states and transitions.

Alert instances

Each alert rule can produce multiple alert instances (also known as alerts) - one alert instance for each time series. This is exceptionally powerful as it allows us to observe multiple series in a single expression.

sum by(cpu) (

rate(node_cpu_seconds_total{mode!="idle"}[1m])

)A rule using the PromQL expression above creates as many alert instances as the amount of CPUs we are observing after the first evaluation, enabling a single rule to report the status of each CPU.

Alert rules are frequently evaluated and the state of their alert instances is updated accordingly. Only alert instances that are in a firing or resolved state are routed to notification policies to be handled.

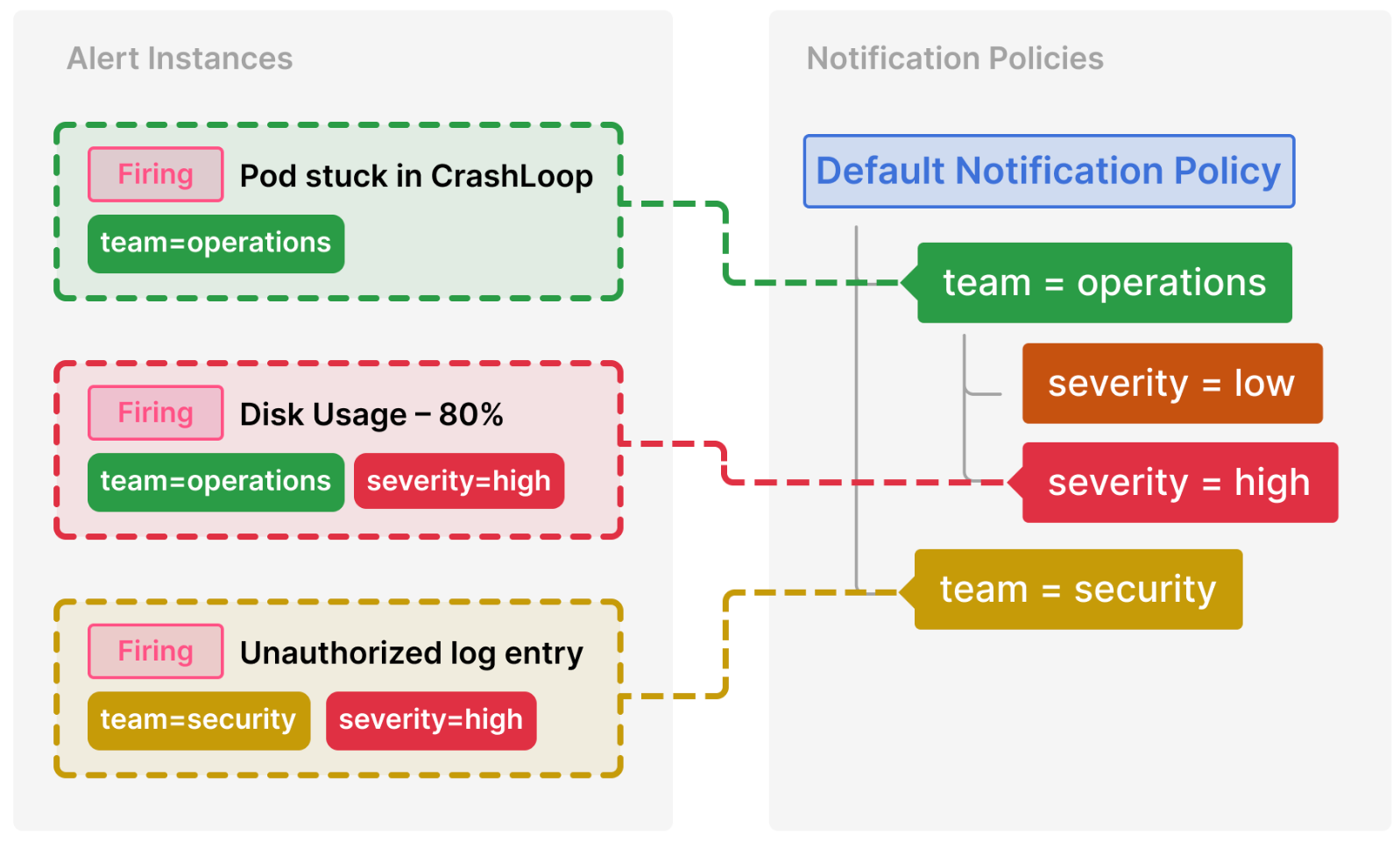

Notification policies

Notification policies group alerts and then route them to contact points. They determine when notifications are sent, and how often notifications should be repeated.

Alert instances are matched to notification policies using label matchers. This provides a flexible way to organize and route alerts to different receivers.

Each policy consists of a set of label matchers (0 or more) that specify which alert instances (identified by their labels) they handle. Notification policies are defined as a tree structure where the root of the notification policy tree is called the Default notification policy. Each policy can have child policies.

Contact points

Contact points determine where notifications are sent. For example, you might have a contact point that sends notifications to an email address, to Slack, to an incident management system (IRM) such as Grafana OnCall or Pagerduty, or to a webhook.

Notifications sent from contact points are customizable with notification templates, which can be shared between contact points.

Silences and mute timings

Silences and mute timings allow you to pause notifications for specific alerts or even entire notification policies. Use a silence to pause notifications on an ad-hoc basis, such as during a maintenance window; and use mute timings to pause notifications at regular intervals, such as evenings and weekends.

Architecture

Grafana Alerting is built on the Prometheus model of designing alerting systems.

Prometheus-based alerting systems have two main components:

- An alert generator that evaluates alert rules and sends firing and resolved alerts to the alert receiver.

- An alert receiver (also known as Alertmanager) that receives the alerts and is responsible for handling them and sending their notifications.

Grafana doesn’t use Prometheus as its default alert generator because Grafana Alerting needs to work with many other data sources in addition to Prometheus.

However, Grafana can also use Prometheus as an alert generator as well as external Alertmanagers. For more information about how to use distinct alerting systems, refer to the Grafana alert rule types.

Design your Alerting system

Monitoring complex IT systems and understanding whether everything is up and running correctly is a difficult task. Setting up an effective alert management system is therefore essential to inform you when things are going wrong before they start to impact your business outcomes.

Designing and configuring an alert management set up that works takes time.

Here are some tips on how to create an effective alert management set up for your business:

Which are the key metrics for your business that you want to monitor and alert on?

Find events that are important to know about and not so trivial or frequent that recipients ignore them.

Alerts should only be created for big events that require immediate attention or intervention.

Consider quality over quantity.

Which type of Alerting do you want to use?

- Choose between Grafana-managed Alerting or Grafana Mimir or Loki-managed Alerting; or both.

How do you want to organize your alerts and notifications?

- Be selective about who you set to receive alerts. Consider sending them to whoever is on call or a specific Slack channel.

- Automate as far as possible using the Alerting API or alerts as code (Terraform).

How can you reduce alert fatigue?

- Avoid noisy, unnecessary alerts by using silences, mute timings, or pausing alert rule evaluation.

- Continually tune your alert rules to review effectiveness. Remove alert rules to avoid duplication or ineffective alerts.

- Think carefully about priority and severity levels.

- Continually review your thresholds and evaluation rules.