Upgrade Kubernetes Monitoring

Choose the following instructions appropriate for your existing configuration of Kubernetes Monitoring.

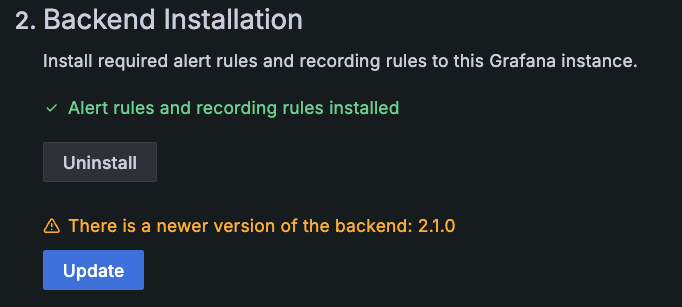

Update Kubernetes Monitoring features

When a new version of alerting rules and recording rules becomes available, an Update button is available within step 2 of the configuration steps on the Cluster configuration tab. Click this button to install the latest alerting and recording rules to your Grafana instance.

If you receive an error that the upgrade has failed, refer to troubleshooting instructions.

Upgrade Kubernetes Monitoring Helm chart

If you previously configured with the Kubernetes Monitoring Helm chart, including when it deployed Grafana Agent in Flow mode, and want to upgrade to the latest configuration for Kubernetes Monitoring, copy and run the following to upgrade with the newer version of the Helm chart:

helm repo update

helm upgrade grafana-k8s-monitoring grafana/k8s-monitoring --namespace "${NAMESPACE}" --values <your values file>Also refer to the breaking change announcements for the Helm chart.

Migrate from static Grafana Agent configuration

If you previously deployed Grafana Agent in static mode, and want to migrate to the latest configuration for Kubernetes Monitoring that uses Grafana Alloy, complete the following steps to remove the agent and supporting systems before deploying Grafana Kubernetes Monitoring Helm chart.

Note

If you have customized your Agent configuration (including adding Kubernetes Integrations to scrape local services or adding scrape targets for your own application metrics, logs, or traces), you must add the customizations again after deploying the Kubernetes Monitoring Helm chart. Save your existing configuration and refer to instructions on deploying integrations and how to set up Application Observability.

Save custom configuration

If you customized your Grafana Agent configuration to add metric sources, log sources, relabeling rules, or any other changes, save your config file outside of your cluster.

Note

If you have written custom relabeling rules for the static mode of Grafana Agent, you must rewrite these rules for Grafana Agent in Flow mode. Refer to Filter Prometheus metrics for more information.

Export common variables

Copy and run the following to use these variables throughout the remaining steps.

# Set this to the namespace where Grafana Agent was deployed

export NAMESPACE="default"

# This will extract the installed Grafana Agent version (e.g. "v0.34.1")

export AGENT_VERSION=$(kubectl get statefulset grafana-agent -n "${NAMESPACE}" -o jsonpath='{$.spec.template.spec.containers[:1].image}' | sed -e 's/grafana\/agent:\(v[.0-9]*\.1\)/\1/')Remove Grafana Agent for Metrics

Copy and run the following to remove the grafana-agent StatefulSet and associated ConfigMap that was used for scraping metrics.

MANIFEST_URL=https://raw.githubusercontent.com/grafana/agent/${AGENT_VERSION}/production/kubernetes/agent-bare.yaml /bin/sh -c "$(curl -fsSL https://raw.githubusercontent.com/grafana/agent/${AGENT_VERSION}/production/kubernetes/install-bare.sh)" | kubectl delete -f -

kubectl delete configmap grafana-agent -n "${NAMESPACE}"Remove Grafana Agent for Logs

Copy and run the following to remove the grafana-agent-logs DaemonSet and associated ConfigMap that was used for scraping metrics.

MANIFEST_URL=https://raw.githubusercontent.com/grafana/agent/${AGENT_VERSION}/production/kubernetes/agent-loki.yaml /bin/sh -c "$(curl -fsSL https://raw.githubusercontent.com/grafana/agent//${AGENT_VERSION}/production/kubernetes/install-bare.sh)" | kubectl delete -f -

kubectl delete configmap grafana-agent-logs -n "${NAMESPACE}"Remove supporting metric systems

Copy and run the following to be redeployed with the Kubernetes Monitoring Helm chart:

helm delete ksm -n "${NAMESPACE}"

helm delete nodeexporter -n "${NAMESPACE}"

helm delete opencost -n "${NAMESPACE}"Check cardinality

As a best practice after upgrading and to ensure the gathered metrics are what you expect, check the current metrics usage and associated costs from the billing and usage dashboard located in your Grafana instance.

Refer to Metrics control and management for more details.

Migrate from Grafana Agent Operator configuration

If you previously deployed Grafana Agent in Operator mode, and want to migrate to the latest configuration for Kubernetes Monitoring with Alloy, complete the following to clean up before deploying the Kubernetes Monitoring Helm chart.

Note

If you have deployed additional ServiceMonitors, PodMonitors, PodLogs, or integrations objects to customize your Grafana Agent configuration (including Kubernetes integrations to scrape local services or adding scrape targets for your own application metrics, logs, or traces), you must add those again after deploying the Kubernetes Monitoring Helm chart. Save your existing configuration and refer to instructions on deploying integrations and how to set up Application Observability.

Persist a custom configuration

If you customized your Agent configuration with additional Integration objects:

- Refer to the Agent Flow mode documentation for the Flow-equivalent components.

- Add the components to the

.extraConfigvalue in the Kubernetes Monitoring Helm chart.

If you are using additional PodMonitor or ServiceMonitor objects, no change is necessary. The Grafana Agent deployed by the Kubernetes Monitoring Helm chart still detects and utilizes those objects.

Export common variables

Copy and run the following to use this variable throughout the remaining steps.

# Set this to the namespace where Grafana Agent was deployed

export NAMESPACE="default"Remove monitoring objects

Next, you must remove all the objects deployed during the Agent Operator deployment process:

| Kind | Name |

|---|---|

| ServiceAccount | grafana-agent |

| ClusterRole | grafana-agent |

| ClusterRoleBinding | grafana-agent |

| GrafanaAgent | grafana-agent |

| Secret | metrics-secret |

| Integration | node-exporter |

| MetricsInstance | grafana-agent-metrics |

| ServiceMonitor | cadvisor-monitor |

| ServiceMonitor | kubelet-monitor |

| ServiceMonitor | kube-state-metrics |

| ClusterRole | kube-state-metrics |

| ClusterRoleBinding | kube-state-metrics |

| Service | kube-state-metrics |

| Deployment | kube-state-metrics |

| ServiceMonitor | ksm-monitor |

| Secret | logs-secret |

| LogsInstance | grafana-agent-logs |

| PodLogs | kubernetes-logs |

| PersistentVolumeClaim | agent-eventhandler |

| Integration | agent-eventhandler |

| ServiceAccount | opencost |

| ClusterRole | opencost |

| ClusterRoleBinding | opencost |

| Service | opencost |

| Deployment | opencost |

| ServiceMonitor | opencost |

Copy and run the following:

kubectl delete -n "${NAMESPACE}" \

serviceaccount/grafana-agent \

clusterrole/grafana-agent \

clusterrolebinding/grafana-agent \

grafanaagent/grafana-agent \

secret/metrics-secret \

integration/node-exporter \

metricsinstance/grafana-agent-metrics \

servicemonitor/cadvisor-monitor \

servicemonitor/kubelet-monitor \

serviceaccount/kube-state-metrics \

clusterrole/kube-state-metrics \

clusterrolebinding/kube-state-metrics \

service/kube-state-metrics \

deployment/kube-state-metrics \

servicemonitor/ksm-monitor \

secret/logs-secret \

logsinstance/grafana-agent-logs \

podlogs/kubernetes-logs \

persistentvolumeclaim/agent-eventhandler \

integration/agent-eventhandler \

serviceaccount/opencost \

secret/opencost \

clusterrole/opencost \

clusterrolebinding/opencost \

service/opencost \

deployment/opencost \

servicemonitor/opencost \Remove Grafana Agent Operator and associated CRDs

Follow the instructions for the deployment method you used.

Configured with Helm

If you previously deployed Grafana Agent operator with Helm, copy and run the following to remove Grafana Agent Operator along with the associated Operator CRDs:

helm delete -n "${NAMESPACE}" grafana-agent-operatorConfigured without Helm

If you deployed without Helm, you must manually remove Grafana Agent Operator and the associated Operator CRDs:

| Kind | Name |

|---|---|

| ServiceAccount | grafana-agent-operator |

| ClusterRole | grafana-agent-operator |

| ClusterRoleBinding | grafana-agent-operator |

| Deployment | grafana-agent-operator |

To perform this manual removal, copy and run the following:

kubectl delete -n "${NAMESPACE}" \

deployment/grafana-agent-operator serviceaccount/grafana-agent-operator \

clusterrole/grafana-agent-operator clusterrolebinding/grafana-agent-operator

kubectl delete

-f https://raw.githubusercontent.com/grafana/agent/main/production/operator/crds/monitoring.coreos.com_podmonitors.yaml \

-f https://raw.githubusercontent.com/grafana/agent/main/production/operator/crds/monitoring.coreos.com_probes.yaml \

-f https://raw.githubusercontent.com/grafana/agent/main/production/operator/crds/monitoring.coreos.com_servicemonitors.yaml \

-f https://raw.githubusercontent.com/grafana/agent/main/production/operator/crds/monitoring.grafana.com_grafanaagents.yaml \

-f https://raw.githubusercontent.com/grafana/agent/main/production/operator/crds/monitoring.grafana.com_integrations.yaml \

-f https://raw.githubusercontent.com/grafana/agent/main/production/operator/crds/monitoring.grafana.com_logsinstances.yaml \

-f https://raw.githubusercontent.com/grafana/agent/main/production/operator/crds/monitoring.grafana.com_metricsinstances.yaml \

-f https://raw.githubusercontent.com/grafana/agent/main/production/operator/crds/monitoring.grafana.com_podlogs.yamlCheck cardinality

As a best practice after upgrading and to ensure the gathered metrics are what you expect, check the current metrics usage and associated costs from the billing and usage dashboard located in your Grafana instance.

Refer to Metrics control and management for more details.

Upgrade to use cost monitoring

If you have already deployed Kubernetes Monitoring using Agent or Agent Operator, follow the instructions on this page to upgrade from Agent in static mode or from Agent Operator.

Create an access policy token

OpenCost needs the ability to read metrics from your hosted Prometheus.

Create an Access Policy Token that has the scope of read metrics.

Deploy OpenCost

Deploy OpenCost via its Helm chart. To do so, copy this code into your terminal and run it:

helm repo add opencost https://opencost.github.io/opencost-helm-chart &&

helm repo update && \

helm install opencost opencost/opencost -n "default" -f - <<EOF

fullnameOverride: opencost

opencost:

exporter:

defaultClusterId: "REPLACE_WITH_CLUSTER_NAME"

extraEnv:

CLOUD_PROVIDER_API_KEY: AIzaSyD29bGxmHAVEOBYtgd8sYM2gM2ekfxQX4U

EMIT_KSM_V1_METRICS: "false"

EMIT_KSM_V1_METRICS_ONLY: "true"

PROM_CLUSTER_ID_LABEL: cluster

prometheus:

password: "REPLACE_WITH_ACCESS_POLICY_TOKEN"

username: "REPLACE_WITH_GRAFANA_CLOUD_PROMETHEUS_USER_ID"

external:

enabled: true

url: "REPLACE_WITH_GRAFANA_CLOUD_PROMETHEUS_QUERY_ENDPOINT"

internal: { enabled: false }

ui: { enabled: false }

EOF| Value | Description |

|---|---|

CLOUD_PROVIDER_API_KEY | Supplied for evaluation. For example, the GCP pricing API requires a key. |

password | The access policy token that was just created |

username | The Prometheus user ID (which is a number) |

url | The Prometheus query endpoint |