Manage your Kubernetes Monitoring configuration

Kubernetes Monitoring gathers metrics, logs, and events, and calculates costs for your infrastructure. It also provides recording rules, alerting rules, and allowlists.

How Kubernetes Monitoring works

Kubernetes Monitoring uses the following to provide its data and visualizations.

cAdvisor

cAdvisor (one per Node) is present on each Node in your Cluster, and emits container resource usage metrics such as CPU usage, memory usage, and disk usage. Alloy collects these metrics and sends them to Grafana Cloud.

Cluster events

Kubernetes Cluster controllers emit information about events concerning the lifecycle of Pods, deployments, and Nodes within the Cluster. Alloy pulls these Cluster events using the Kubernetes API server and converts them into log lines, then sends them to Grafana Cloud logs.

Grafana Alloy

- Collects all metrics, Cluster events, and Pod logs

- Receives traces pushed from applications on Clusters

- Sends the data to Grafana Cloud

kube-state-metrics

Kubernetes Monitoring uses kube-state-metrics service (one replica, by default) to enable you to see the links between Cluster, Node, Pod, and container. The kube-state-metrics service listens to Kubernetes API server events, and generates Prometheus metrics that document the state of your Cluster’s objects. Over a thousand different metrics provide the status, capacity, and health of individual containers, Pods, deployments, and other resources.

kube-state-metrics:

- Generates metrics without modification

- Is present on each Node

- Emits metrics specific to the kubelet process, such as

kubelet_running_podsandkubelet_running_container_count - Provides metrics on the state of objects in your Cluster (Pods, Deployments, DaemonSets)

The Kubernetes Monitoring Cluster navigation feature requires the following metrics:

kube_namespace_status_phasecontainer_cpu_usage_seconds_totalkube_pod_status_phasekube_pod_start_timekube_pod_container_status_restarts_totalkube_pod_container_infokube_pod_container_status_waiting_reasonkube_daemonset.+kube_replicaset.+kube_statefulset.+kube_job.+kube_node.+kube_cluster.+node_cpu_seconds_totalnode_memory_MemAvailable_bytesnode_filesystem_size_bytesnode_namespace_pod_containercontainer_memory_working_set_bytes

kubelet

kubelet (one per Node):

- Is the primary “Node agent” present on each Node in the Cluster

- Emits metrics specific to the kubelet process like

kubelet_running_podsandkubelet_running_container_count - Ensures containers are running

- Provides metrics on Pods and their containers

Grafana Alloy collects these metrics and sends them to Grafana Cloud.

Kubernetes mixins

Kubernetes Monitoring is heavily indebted to the open source kubernetes-mixin project, from which the recording and alerting rules are derived. Grafana Labs continue to contribute bug fixes and new features upstream.

Node Exporter

The Prometheus exporter node-exporter runs as a DaemonSet on the Cluster to:

- Gather metrics on hardware and OS for Linux Nodes in the Cluster

- Emit Prometheus metrics for the health and state of the Nodes in your Cluster

Grafana Alloy collects these metrics and sends them to Grafana Cloud.

OpenCost

Kubernetes Monitoring uses the combination of OpenCost and Grafana to allow you to monitor and managing costs related to your Kubernetes Cluster. For more details, refer to Manage costs.

Pod logs

Alloy pulls Pod logs from the workloads running within containers, and sends them to Loki.

Note

Log entries must be sent to a Loki data source with

cluster,namespace, andpodlabels.

Traces

Traces generated by applications within the Cluster are pushed to Grafana Alloy. The address options listed during the process of configuring with the Helm chart contain the configuration endpoints where traces can be pushed.

Windows Exporter

When monitoring Windows Nodes, the configuration installs the windows-exporter DaemonSet to ensure metrics are available for scraping.

Recording rules

Recording rules calculate in advance any mathematical expressions that are frequently needed or take a lot of computation, and then save the result as a new set of time series. This decreases the time for query and calculation.

Note

Recording rules may emit time series with the same metric name, but different labels. To modify these programmatically, refer to Set up Alerting for Cloud.

Kubernetes Monitoring includes the following recording rules to speed up queries and the evaluation of alerting rules.

apiserver_request:availability30dapiserver_request:availability30dapiserver_request:availability30dapiserver_request:burnrate1dapiserver_request:burnrate1dapiserver_request:burnrate1hapiserver_request:burnrate1hapiserver_request:burnrate2hapiserver_request:burnrate2hapiserver_request:burnrate30mapiserver_request:burnrate30mapiserver_request:burnrate3dapiserver_request:burnrate3dapiserver_request:burnrate5mapiserver_request:burnrate5mapiserver_request:burnrate6hapiserver_request:burnrate6h

cluster:namespace:pod_cpu:active:kube_pod_container_resource_limits

cluster:namespace:pod_cpu:active:kube_pod_container_resource_requests

cluster:namespace:pod_memory:active:kube_pod_container_resource_limits

cluster:namespace:pod_memory:active:kube_pod_container_resource_requests

cluster_quantile:apiserver_request_sli_duration_seconds:histogram_quantile

cluster_quantile:apiserver_request_sli_duration_seconds:histogram_quantile

cluster_quantile:scheduler_binding_duration_seconds:histogram_quantile

cluster_quantile:scheduler_binding_duration_seconds:histogram_quantile

cluster_quantile:scheduler_binding_duration_seconds:histogram_quantile

cluster_quantile:scheduler_e2e_scheduling_duration_seconds:histogram_quantile

cluster_quantile:scheduler_e2e_scheduling_duration_seconds:histogram_quantile

cluster_quantile:scheduler_e2e_scheduling_duration_seconds:histogram_quantile

cluster_quantile:scheduler_scheduling_algorithm_duration_seconds:histogram_quantile

cluster_quantile:scheduler_scheduling_algorithm_duration_seconds:histogram_quantile

cluster_quantile:scheduler_scheduling_algorithm_duration_seconds:histogram_quantile

cluster_verb_scope:apiserver_request_sli_duration_seconds_count:increase1h

cluster_verb_scope:apiserver_request_sli_duration_seconds_count:increase30d

cluster_verb_scope_le:apiserver_request_sli_duration_seconds_bucket:increase1h

cluster_verb_scope_le:apiserver_request_sli_duration_seconds_bucket:increase30d

code:apiserver_request_total:increase30dcode:apiserver_request_total:increase30d

code_resource:apiserver_request_total:rate5mcode_resource:apiserver_request_total:rate5m

code_verb:apiserver_request_total:increase1hcode_verb:apiserver_request_total:increase1hcode_verb:apiserver_request_total:increase1hcode_verb:apiserver_request_total:increase1hcode_verb:apiserver_request_total:increase30d

container_cpu_usage_seconds_totalcontainer_memory_rsscontainer_memory_working_set_bytes

namespace_cpu:kube_pod_container_resource_limits:sum

namespace_cpu:kube_pod_container_resource_requests:sum

namespace_memory:kube_pod_container_resource_limits:sum

namespace_memory:kube_pod_container_resource_requests:sum

namespace_workload_pod:kube_pod_owner:relabelnamespace_workload_pod:kube_pod_owner:relabelnamespace_workload_pod:kube_pod_owner:relabelnamespace_workload_pod:kube_pod_owner:relabel

node_namespace_pod_container:container_cpu_usage_seconds_total:sum_irate

node_namespace_pod_container:container_memory_cache

node_namespace_pod_container:container_memory_rss

node_namespace_pod_container:container_memory_swap

node_namespace_pod_container:container_memory_working_set_bytes

node_quantile:kubelet_pleg_relist_duration_seconds:histogram_quantile

node_quantile:kubelet_pleg_relist_duration_seconds:histogram_quantile

node_quantile:kubelet_pleg_relist_duration_seconds:histogram_quantile

Alerting rules

Kubernetes Monitoring comes with preconfigured alerting rules to alert on conditions such as “Pods crash looping” and “Pods getting stuck in not ready”. The following alerting rules create alerts to notify you when issues arise with your Clusters and their workloads.

To learn more, refer to the upstream Kubernetes-Mixin’s Kubernetes Alert Runbooks page. To update programmatically the alerting rule links to point your own runbooks in these preconfigured alerts, use a tool like cortex-tools or grizzly.

Kubelet alerting rules

KubeNodeNotReadyKubeNodeReadinessFlappingKubeNodeUnreachableKubeletClientCertificateExpiration- 7 day expirationKubeletClientCertificateExpiration- 1 day expirationKubeletDownKubeletPlegDurationHighKubeletPodStartUpLatencyHighKubeletServerCertificateExpiration- 7 day expirationKubeletServerCertificateExpiration- 1 day expirationKubeletClientCertificateRenewalErrorsKubeletServerCertificateRenewalErrorsKubeletTooManyPods

Kubernetes alerting rules

KubeContainerWaitingKubeDaemonSetMisScheduledKubeDaemonSetNotScheduledKubeDaemonSetRolloutStuckKubeDeploymentGenerationMismatchKubeDeploymentReplicasMismatchKubeDeploymentRolloutStuckKubeHpaMaxedOutKubeHpaReplicasMismatchKubeJobFailedKubeJobNotCompletedKubePodCrashLoopingKubePodNotReadyKubeStatefulSetGenerationMismatchKubeStatefulSetReplicasMismatchKubeStatefulSetUpdateNotRolledOut

Kubernetes API alerting rules

KubeAggregatedAPIDownKubeAggregatedAPIErrorsKubeAPIDownKubeAPIErrorBudgetBurnKubeAPIErrorBudgetBurnKubeAPIErrorBudgetBurnKubeAPIErrorBudgetBurnKubeAPITerminatedRequestsKubeClientCertificateExpiration- less than 7 daysKubeClientCertificateExpiration- less than 1 day

Kubernetes resource usage alerting rules

CPUThrottlingHighKubeCPUOvercommitKubeCPUQuotaOvercommitKubeMemoryOvercommitKubeMemoryQuotaOvercommitKubeQuotaAlmostFullKubeQuotaExceededKubeQuotaFullyUsed

Kubernetes storage alerting rules

KubePersistentVolumeErrorsKubePersistentVolumeFillingUp- 1 hourKubePersistentVolumeFillingUp- 1 minuteKubePersistentVolumeInodesFillingUp- 1 hourKubePersistentVolumeInodesFillingUp- 1 minute

Kubernetes system alerting rules

KubeClientErrorsKubeVersionMismatch

Metrics management and control

If your account is based on billable series, there are ways to control and manage metrics.

Identify unnecessary or duplicate metrics

To identify unnecessary or duplicate metrics generated from a Cluster, you can:

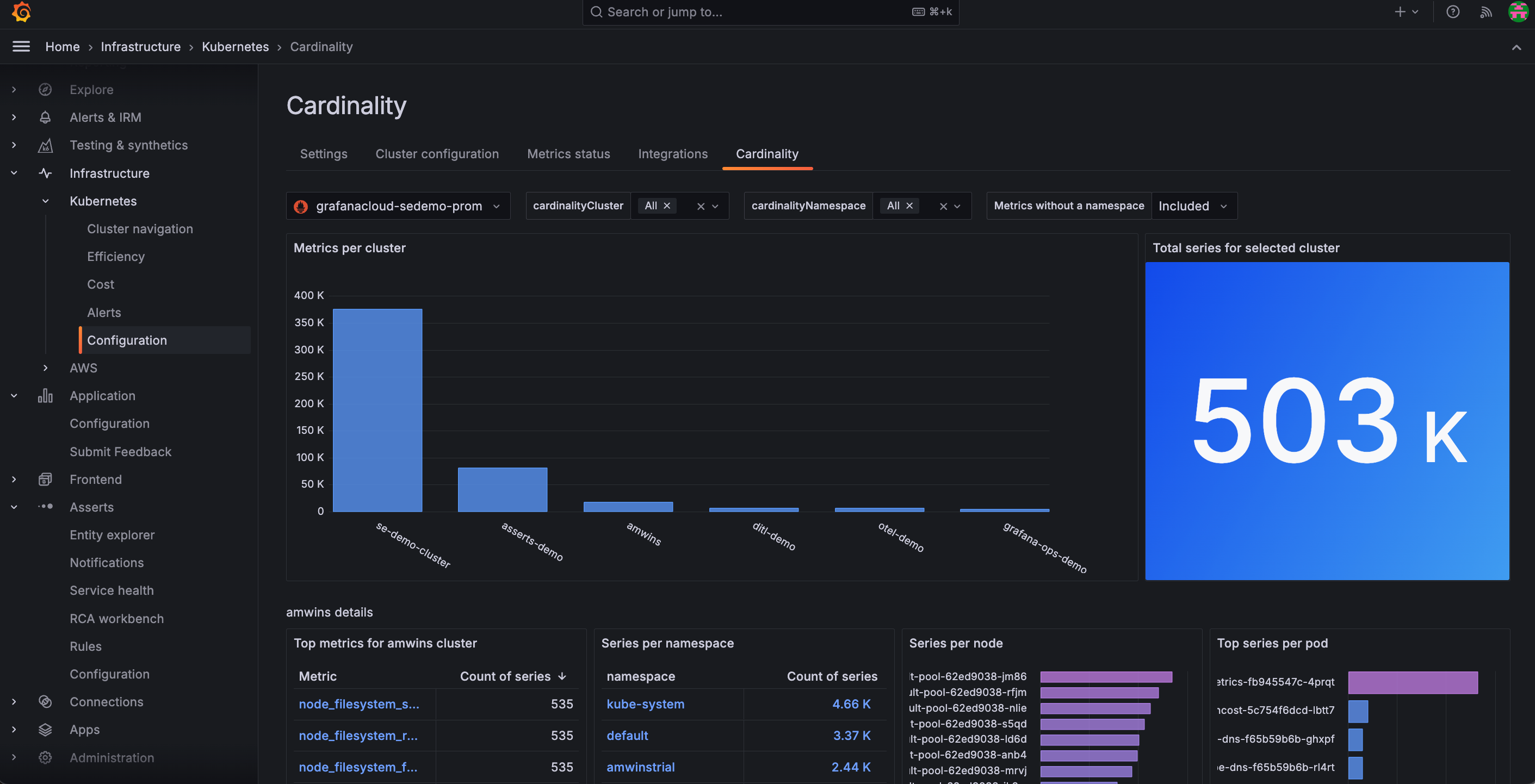

- Use the Cardinality page to discover on a Cluster-by-Cluster basis where all your active series are coming from. From the main menu, click Configuration and then the Cardinality tab.

![Cardinality page within the app Cardinality page within the app]()

Cardinality page within the app - Analyze current metrics usage and associated costs from the billing and usage dashboard located in your Grafana instance.

Analyze usage

For techniques to analyze usage, refer to Analyze Prometheus metrics costs.

Reduce usage

Use and refine an allowlist to reduce metrics to only those you want to receive. An allowlist is a set of metrics and labels that you want to gather while all others are dropped. Out of the box, Kubernetes Monitoring has allowlists configured with Prometheus metric_relabel_configs blocks.

For more about Prometheus drop and keep relabeling options, refer to Relabeling rule fields.

You can remove or modify allowlists by editing the corresponding metric_relabel_configs blocks in your Alloy configuration. To learn more about relabeling to control the metrics you want, refer to Relabel Prometheus metrics to reduce usage.

You can also tune and refine the metrics of an existing allowlist or create a custom allowlist.

Billable series

If your account does not use host-hours pricing, pricing is based on billable series. To learn more about this pricing model, refer to Active series and DPM.

Default telemetry data collection (also called active series) varies depending on your Kubernetes Cluster size (number of Nodes) and running workloads (number of Pods, containers, Deployments, etc.).

When testing on a Cloud provider’s Kubernetes offering, the following active series usage was observed:

- 3-Node Cluster, 17 running Pods, 31 running containers: 3.8k active series

- The only Pods deployed into the Cluster were Grafana Agent and kube-state-metrics. The rest were running in the

kube-systemNamespace and managed by the cloud provider

- The only Pods deployed into the Cluster were Grafana Agent and kube-state-metrics. The rest were running in the

- From this baseline, active series usage roughly increased by:

- 1000 active series per additional Node

- 75 active series per additional Pod (vanilla Nginx Pods were deployed into the Cluster)

These are very rough guidelines and results may vary depending on your Cloud provider or Kubernetes version. Note also that these figures are based on the scrape targets configured above, and not additional targets such as application metrics, API server metrics, and scheduler metrics.

Logs management

Control and manage logs by:

- Only collecting logs from Pods in certain namespaces

- Dropping logs based on content

For more on analyzing, customizing, and de-duplicating logs, refer to Logs in Explore.

Limit logs to only Pods from certain namespaces

By default, Kubernetes Monitoring gathers logs from Pods in every namespace. However, you may only want or need logs from Pods in certain namespaces.

In the Grafana Alloy configuration, you can add a relabel_configs block that either keeps Pods or drops Pods.

For example, the following gathers logs only from the production and staging namespaces:

rule {

source_labels = ["namespace"]

regex = "production|staging"

action = "keep"

}Drop logs based on content

Similarly to filtering to specific namespaces, you can use Loki processing rules to further process and optionally drop log lines.

For example, this processing stage drops any log lines that contain the word debug:

stage.drop {

expression = ".*(debug|DEBUG).*"

}Get support

To open a support ticket, navigate to your Grafana Cloud Portal, and click Open a Support Ticket.