Send Kubernetes metrics, logs, and events with Grafana Agent Operator

Note

Grafana Agent Operator has been deprecated. Configuration with the Grafana Kubernetes Monitoring Helm chart is the recommended method.

When you set up Kubernetes Monitoring using Grafana Agent Operator, Agent Operator deploys and configures Grafana Agent automatically using Kubernetes custom resource definition (CRD) objects. This configuration method provides you with preconfigured alerts.

The telemetry data collected includes:

- Kubernetes cluster metrics

- kubelet and cAdvisor Cluster metrics

- kube-state-metrics

- Container logs

- Kubernetes events

Before you begin

To deploy Kubernetes Monitoring, you need:

- A Kubernetes Cluster, environment, or fleet you want to monitor

- Command-line tools kubectl and Helm (if you choose to install Agent Operator using Helm)

Note

Make sure you deploy the required resources in the same namespace to avoid any missing data.

Install CRDs and deploy Agent Operator

You can set up Agent Operator with or without Helm.

Set up with Helm

Run the following command to deploy Grafana Agent Operator and its associated CRDs:

helm repo add grafana https://grafana.github.io/helm-charts

helm repo update

helm install grafana-agent-operator --create-namespace grafana/grafana-agent-operator -n "NAMESPACE"Set up without Helm

If you don’t want to use Helm, you must install the CRDs and Grafana Agent Operator separately.

Run the following command to deploy the CRDs:

kubectl create -f https://raw.githubusercontent.com/grafana/agent/main/production/operator/crds/monitoring.coreos.com_podmonitors.yaml \ -f https://raw.githubusercontent.com/grafana/agent/main/production/operator/crds/monitoring.coreos.com_probes.yaml \ -f https://raw.githubusercontent.com/grafana/agent/main/production/operator/crds/monitoring.coreos.com_servicemonitors.yaml \ -f https://raw.githubusercontent.com/grafana/agent/main/production/operator/crds/monitoring.grafana.com_grafanaagents.yaml \ -f https://raw.githubusercontent.com/grafana/agent/main/production/operator/crds/monitoring.grafana.com_integrations.yaml \ -f https://raw.githubusercontent.com/grafana/agent/main/production/operator/crds/monitoring.grafana.com_logsinstances.yaml \ -f https://raw.githubusercontent.com/grafana/agent/main/production/operator/crds/monitoring.grafana.com_metricsinstances.yaml \ -f https://raw.githubusercontent.com/grafana/agent/main/production/operator/crds/monitoring.grafana.com_podlogs.yamlSave the following to a file. In the file, replace

NAMESPACEwith the desired namespace for your Grafana Agent Operator. Usekubectl apply -f <file.yaml>to deploy the operator.--- apiVersion: v1 kind: ServiceAccount metadata: name: grafana-agent-operator namespace: NAMESPACE --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: grafana-agent-operator rules: - apiGroups: - monitoring.grafana.com resources: - grafanaagents - metricsinstances - logsinstances - podlogs - integrations verbs: - get - list - watch - apiGroups: - monitoring.grafana.com resources: - grafanaagents/finalizers - metricsinstances/finalizers - logsinstances/finalizers - podlogs/finalizers - integrations/finalizers verbs: - get - list - watch - update - apiGroups: - monitoring.coreos.com resources: - podmonitors - probes - servicemonitors verbs: - get - list - watch - apiGroups: - monitoring.coreos.com resources: - podmonitors/finalizers - probes/finalizers - servicemonitors/finalizers verbs: - get - list - watch - update - apiGroups: - "" resources: - namespaces - nodes verbs: - get - list - watch - apiGroups: - "" resources: - secrets - services - configmaps - endpoints verbs: - get - list - watch - create - update - patch - delete - apiGroups: - apps resources: - statefulsets - daemonsets - deployments verbs: - get - list - watch - create - update - patch - delete --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: grafana-agent-operator roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: grafana-agent-operator subjects: - kind: ServiceAccount name: grafana-agent-operator namespace: NAMESPACE --- apiVersion: apps/v1 kind: Deployment metadata: name: grafana-agent-operator namespace: NAMESPACE spec: minReadySeconds: 10 replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: name: grafana-agent-operator template: metadata: labels: name: grafana-agent-operator spec: containers: - args: - --kubelet-service=default/kubelet image: grafana/agent-operator:v0.35.3 imagePullPolicy: IfNotPresent name: grafana-agent-operator serviceAccountName: grafana-agent-operator

Use a Grafana Cloud Access Policy Token

You can create a new access policy token or use an existing token. See Grafana Cloud Access Policies for more information.

You’ll use the token in future steps.

Deploy kube-state-metrics to your cluster

Save the following to a file. Replace NAMESPACE with the desired namespace for Kube State Metrics, and use kubectl apply -f <file.yaml> to deploy it.

---

apiVersion: v1

automountServiceAccountToken: false

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.8.2

name: kube-state-metrics

namespace: NAMESPACE

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.8.2

name: kube-state-metrics

rules:

- apiGroups:

- ""

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs:

- list

- watch

- apiGroups:

- apps

resources:

- statefulsets

- daemonsets

- deployments

- replicasets

verbs:

- list

- watch

- apiGroups:

- batch

resources:

- cronjobs

- jobs

verbs:

- list

- watch

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

verbs:

- list

- watch

- apiGroups:

- authentication.k8s.io

resources:

- tokenreviews

verbs:

- create

- apiGroups:

- authorization.k8s.io

resources:

- subjectaccessreviews

verbs:

- create

- apiGroups:

- policy

resources:

- poddisruptionbudgets

verbs:

- list

- watch

- apiGroups:

- certificates.k8s.io

resources:

- certificatesigningrequests

verbs:

- list

- watch

- apiGroups:

- storage.k8s.io

resources:

- storageclasses

- volumeattachments

verbs:

- list

- watch

- apiGroups:

- admissionregistration.k8s.io

resources:

- mutatingwebhookconfigurations

- validatingwebhookconfigurations

verbs:

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- networkpolicies

- ingresses

verbs:

- list

- watch

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.8.2

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: NAMESPACE

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.8.2

name: kube-state-metrics

namespace: NAMESPACE

spec:

clusterIP: None

ports:

- name: http-metrics

port: 8080

targetPort: http-metrics

- name: telemetry

port: 8081

targetPort: telemetry

selector:

app.kubernetes.io/name: kube-state-metrics

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.8.2

name: kube-state-metrics

namespace: NAMESPACE

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: kube-state-metrics

template:

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.8.2

spec:

automountServiceAccountToken: true

containers:

- image: registry.k8s.io/kube-state-metrics/kube-state-metrics:v2.8.2

livenessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

name: kube-state-metrics

ports:

- containerPort: 8080

name: http-metrics

- containerPort: 8081

name: telemetry

readinessProbe:

httpGet:

path: /

port: 8081

initialDelaySeconds: 5

timeoutSeconds: 5

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

readOnlyRootFilesystem: true

runAsUser: 65534

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: kube-state-metrics

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

instance: primary

name: ksm-monitor

namespace: NAMESPACE

spec:

endpoints:

- honorLabels: true

interval: 60s

metricRelabelings:

- action: keep

regex: kube_pod_container_status_waiting_reason|cluster:namespace:pod_cpu:active:kube_pod_container_resource_limits|kube_daemonset_status_desired_number_scheduled|kubelet_certificate_manager_client_ttl_seconds|kube_node_status_condition|kubelet_node_config_error|rest_client_requests_total|kube_horizontalpodautoscaler_status_current_replicas|node_namespace_pod_container:container_memory_cache|kubelet_cgroup_manager_duration_seconds_count|kube_horizontalpodautoscaler_status_desired_replicas|container_fs_writes_total|kube_daemonset_status_current_number_scheduled|kube_pod_info|kubelet_pod_worker_duration_seconds_count|kubelet_pleg_relist_interval_seconds_bucket|kube_job_failed|kube_replicaset_owner|namespace_workload_pod:kube_pod_owner:relabel|kubelet_runtime_operations_errors_total|volume_manager_total_volumes|kubelet_server_expiration_renew_errors|container_memory_rss|container_memory_working_set_bytes|kubelet_running_container_count|container_fs_writes_bytes_total|namespace_cpu:kube_pod_container_resource_requests:sum|kubelet_running_pod_count|kube_statefulset_status_replicas_updated|kube_job_status_active|kube_node_status_capacity|kubelet_volume_stats_inodes|kube_statefulset_status_replicas|kube_deployment_status_replicas_updated|kube_node_status_allocatable|kube_statefulset_status_replicas_ready|node_namespace_pod_container:container_memory_working_set_bytes|kubelet_pod_worker_duration_seconds_bucket|kubelet_runtime_operations_total|kube_horizontalpodautoscaler_spec_max_replicas|kube_statefulset_status_current_revision|node_namespace_pod_container:container_memory_rss|kubelet_pleg_relist_duration_seconds_count|kube_daemonset_status_updated_number_scheduled|kube_horizontalpodautoscaler_spec_min_replicas|container_cpu_usage_seconds_total|kubelet_node_name|kubelet_certificate_manager_client_expiration_renew_errors|kube_pod_owner|container_network_transmit_packets_dropped_total|node_quantile:kubelet_pleg_relist_duration_seconds:histogram_quantile|kube_persistentvolumeclaim_resource_requests_storage_bytes|storage_operation_errors_total|kubelet_cgroup_manager_duration_seconds_bucket|kubelet_pleg_relist_duration_seconds_bucket|go_goroutines|kube_statefulset_status_observed_generation|container_fs_reads_total|container_cpu_cfs_periods_total|kubelet_running_containers|kube_daemonset_status_number_misscheduled|container_network_receive_packets_total|kube_node_info|kube_namespace_status_phase|process_resident_memory_bytes|kube_pod_status_phase|container_network_transmit_packets_total|cluster:namespace:pod_cpu:active:kube_pod_container_resource_requests|container_memory_cache|kubelet_running_pods|kube_job_status_start_time|kube_node_spec_taint|container_network_receive_packets_dropped_total|kube_pod_container_resource_requests|kubelet_volume_stats_available_bytes|node_filesystem_avail_bytes|kube_statefulset_metadata_generation|node_namespace_pod_container:container_cpu_usage_seconds_total:sum_irate|cluster:namespace:pod_memory:active:kube_pod_container_resource_requests|cluster:namespace:pod_memory:active:kube_pod_container_resource_limits|kube_daemonset_status_number_available|namespace_cpu:kube_pod_container_resource_limits:sum|container_fs_reads_bytes_total|kube_pod_container_resource_limits|node_namespace_pod_container:container_memory_swap|process_cpu_seconds_total|container_network_receive_bytes_total|kubelet_volume_stats_capacity_bytes|kubelet_volume_stats_inodes_used|kube_statefulset_replicas|kube_statefulset_status_update_revision|kube_deployment_status_replicas_available|kube_deployment_metadata_generation|kubernetes_build_info|namespace_memory:kube_pod_container_resource_limits:sum|namespace_workload_pod|kube_pod_status_reason|kube_deployment_status_observed_generation|container_memory_swap|kube_deployment_spec_replicas|node_filesystem_size_bytes|kubelet_pod_start_duration_seconds_bucket|namespace_memory:kube_pod_container_resource_requests:sum|machine_memory_bytes|kube_resourcequota|container_cpu_cfs_throttled_periods_total|container_network_transmit_bytes_total|storage_operation_duration_seconds_count|kubelet_pod_start_duration_seconds_count|kubelet_certificate_manager_server_ttl_seconds|kube_namespace_status_phase|container_cpu_usage_seconds_total|kube_pod_status_phase|kube_pod_start_time|kube_pod_container_status_restarts_total|kube_pod_container_info|kube_pod_container_status_waiting_reason|kube_daemonset.*|kube_replicaset.*|kube_statefulset.*|kube_job.*|kube_node.*|node_namespace_pod_container:container_cpu_usage_seconds_total:sum_irate|cluster:namespace:pod_cpu:active:kube_pod_container_resource_requests|namespace_cpu:kube_pod_container_resource_requests:sum|node_cpu.*|node_memory.*|node_filesystem.*|node_network_transmit_bytes_total

sourceLabels:

- __name__

path: /metrics

port: http-metrics

relabelings:

- action: replace

replacement: integrations/kubernetes/kube-state-metrics

targetLabel: job

namespaceSelector:

matchNames:

- NAMESPACE

selector:

matchLabels:

app.kubernetes.io/name: kube-state-metricsDeploy Grafana Agent to your cluster

Save the following to a file and replace within the file following:

NAMESPACEwith the namespace for Grafana AgentCLUSTER_NAMEwith the name of your Cluster

Then deploy the file using kubectl apply -f <file.yaml>.

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: grafana-agent

namespace: NAMESPACE

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: grafana-agent

rules:

- apiGroups:

- ""

resources:

- nodes

- nodes/proxy

- nodes/metrics

- services

- endpoints

- pods

- events

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- nonResourceURLs:

- /metrics

- /metrics/cadvisor

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: grafana-agent

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: grafana-agent

subjects:

- kind: ServiceAccount

name: grafana-agent

namespace: NAMESPACE

---

apiVersion: monitoring.grafana.com/v1alpha1

kind: GrafanaAgent

metadata:

name: grafana-agent

namespace: NAMESPACE

spec:

image: grafana/agent:v0.35.3

integrations:

selector:

matchLabels:

agent: grafana-agent

metrics:

externalLabels:

cluster: CLUSTER_NAME

instanceSelector:

matchLabels:

agent: grafana-agent

scrapeInterval: 60s

logs:

instanceSelector:

matchLabels:

agent: grafana-agent

serviceAccountName: grafana-agentDeploy custom resources to collect metrics

Save the following to a file and replace the following:

NAMESPACEwith the namespace for Grafana AgentCLUSTER_NAMEwith the name of your ClusterMETRICS_HOSTwith the hostname for your Prometheus instanceMETRICS_USERNAMEwith the username for your Prometheus instanceMETRICS_PASSWORDwith your Access Policy Token from earlier

Then deploy the file using kubectl apply -f <file.yaml>

---

apiVersion: v1

kind: Secret

metadata:

name: metrics-secret

namespace: NAMESPACE

type: Opaque

stringData:

password: "METRICS_PASSWORD"

username: "METRICS_USERNAME"

---

apiVersion: monitoring.grafana.com/v1alpha1

kind: Integration

metadata:

labels:

agent: grafana-agent

name: node-exporter

namespace: NAMESPACE

spec:

config:

autoscrape:

enable: true

metrics_instance: NAMESPACE/grafana-agent-metrics

procfs_path: host/proc

rootfs_path: /host/root

sysfs_path: /host/sys

name: node_exporter

type:

allNodes: true

unique: true

volumeMounts:

- mountPath: /host/root

name: rootfs

- mountPath: /host/sys

name: sysfs

- mountPath: /host/proc

name: procfs

volumes:

- hostPath:

path: /

name: rootfs

- hostPath:

path: /sys

name: sysfs

- hostPath:

path: /proc

name: procfs

---

apiVersion: monitoring.grafana.com/v1alpha1

kind: MetricsInstance

metadata:

labels:

agent: grafana-agent

name: grafana-agent-metrics

namespace: NAMESPACE

spec:

podMonitorNamespaceSelector: {}

podMonitorSelector:

matchLabels:

instance: primary

remoteWrite:

- basicAuth:

password:

key: password

name: metrics-secret

username:

key: username

name: metrics-secret

url: METRICS_HOST/api/prom/push

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector:

matchLabels:

instance: primary

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

instance: primary

name: cadvisor-monitor

namespace: NAMESPACE

spec:

endpoints:

- bearerTokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

honorLabels: true

interval: 60s

metricRelabelings:

- action: keep

regex: kube_pod_container_status_waiting_reason|cluster:namespace:pod_cpu:active:kube_pod_container_resource_limits|kube_daemonset_status_desired_number_scheduled|kubelet_certificate_manager_client_ttl_seconds|kube_node_status_condition|kubelet_node_config_error|rest_client_requests_total|kube_horizontalpodautoscaler_status_current_replicas|node_namespace_pod_container:container_memory_cache|kubelet_cgroup_manager_duration_seconds_count|kube_horizontalpodautoscaler_status_desired_replicas|container_fs_writes_total|kube_daemonset_status_current_number_scheduled|kube_pod_info|kubelet_pod_worker_duration_seconds_count|kubelet_pleg_relist_interval_seconds_bucket|kube_job_failed|kube_replicaset_owner|namespace_workload_pod:kube_pod_owner:relabel|kubelet_runtime_operations_errors_total|volume_manager_total_volumes|kubelet_server_expiration_renew_errors|container_memory_rss|container_memory_working_set_bytes|kubelet_running_container_count|container_fs_writes_bytes_total|namespace_cpu:kube_pod_container_resource_requests:sum|kubelet_running_pod_count|kube_statefulset_status_replicas_updated|kube_job_status_active|kube_node_status_capacity|kubelet_volume_stats_inodes|kube_statefulset_status_replicas|kube_deployment_status_replicas_updated|kube_node_status_allocatable|kube_statefulset_status_replicas_ready|node_namespace_pod_container:container_memory_working_set_bytes|kubelet_pod_worker_duration_seconds_bucket|kubelet_runtime_operations_total|kube_horizontalpodautoscaler_spec_max_replicas|kube_statefulset_status_current_revision|node_namespace_pod_container:container_memory_rss|kubelet_pleg_relist_duration_seconds_count|kube_daemonset_status_updated_number_scheduled|kube_horizontalpodautoscaler_spec_min_replicas|container_cpu_usage_seconds_total|kubelet_node_name|kubelet_certificate_manager_client_expiration_renew_errors|kube_pod_owner|container_network_transmit_packets_dropped_total|node_quantile:kubelet_pleg_relist_duration_seconds:histogram_quantile|kube_persistentvolumeclaim_resource_requests_storage_bytes|storage_operation_errors_total|kubelet_cgroup_manager_duration_seconds_bucket|kubelet_pleg_relist_duration_seconds_bucket|go_goroutines|kube_statefulset_status_observed_generation|container_fs_reads_total|container_cpu_cfs_periods_total|kubelet_running_containers|kube_daemonset_status_number_misscheduled|container_network_receive_packets_total|kube_node_info|kube_namespace_status_phase|process_resident_memory_bytes|kube_pod_status_phase|container_network_transmit_packets_total|cluster:namespace:pod_cpu:active:kube_pod_container_resource_requests|container_memory_cache|kubelet_running_pods|kube_job_status_start_time|kube_node_spec_taint|container_network_receive_packets_dropped_total|kube_pod_container_resource_requests|kubelet_volume_stats_available_bytes|node_filesystem_avail_bytes|kube_statefulset_metadata_generation|node_namespace_pod_container:container_cpu_usage_seconds_total:sum_irate|cluster:namespace:pod_memory:active:kube_pod_container_resource_requests|cluster:namespace:pod_memory:active:kube_pod_container_resource_limits|kube_daemonset_status_number_available|namespace_cpu:kube_pod_container_resource_limits:sum|container_fs_reads_bytes_total|kube_pod_container_resource_limits|node_namespace_pod_container:container_memory_swap|process_cpu_seconds_total|container_network_receive_bytes_total|kubelet_volume_stats_capacity_bytes|kubelet_volume_stats_used_bytes|kubelet_volume_stats_inodes_used|kube_statefulset_replicas|kube_statefulset_status_update_revision|kube_deployment_status_replicas_available|kube_deployment_metadata_generation|kubernetes_build_info|namespace_memory:kube_pod_container_resource_limits:sum|namespace_workload_pod|kube_pod_status_reason|kube_deployment_status_observed_generation|container_memory_swap|kube_deployment_spec_replicas|node_filesystem_size_bytes|kubelet_pod_start_duration_seconds_bucket|namespace_memory:kube_pod_container_resource_requests:sum|machine_memory_bytes|kube_resourcequota|container_cpu_cfs_throttled_periods_total|container_network_transmit_bytes_total|storage_operation_duration_seconds_count|kubelet_pod_start_duration_seconds_count|kubelet_certificate_manager_server_ttl_seconds|kube_namespace_status_phase|container_cpu_usage_seconds_total|kube_pod_status_phase|kube_pod_start_time|kube_pod_container_status_restarts_total|kube_pod_container_info|kube_pod_container_status_waiting_reason|kube_daemonset.*|kube_replicaset.*|kube_statefulset.*|kube_job.*|kube_node.*|node_namespace_pod_container:container_cpu_usage_seconds_total:sum_irate|cluster:namespace:pod_cpu:active:kube_pod_container_resource_requests|namespace_cpu:kube_pod_container_resource_requests:sum|node_cpu.*|node_memory.*|node_filesystem.*|node_network_transmit_bytes_total

sourceLabels:

- __name__

path: /metrics/cadvisor

port: https-metrics

relabelings:

- sourceLabels:

- __metrics_path__

targetLabel: metrics_path

- action: replace

replacement: integrations/kubernetes/cadvisor

targetLabel: job

scheme: https

tlsConfig:

insecureSkipVerify: true

namespaceSelector:

any: true

selector:

matchLabels:

app.kubernetes.io/name: kubelet

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

instance: primary

name: kubelet-monitor

namespace: NAMESPACE

spec:

endpoints:

- bearerTokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

honorLabels: true

interval: 60s

metricRelabelings:

- action: keep

regex: kube_pod_container_status_waiting_reason|cluster:namespace:pod_cpu:active:kube_pod_container_resource_limits|kube_daemonset_status_desired_number_scheduled|kubelet_certificate_manager_client_ttl_seconds|kube_node_status_condition|kubelet_node_config_error|rest_client_requests_total|kube_horizontalpodautoscaler_status_current_replicas|node_namespace_pod_container:container_memory_cache|kubelet_cgroup_manager_duration_seconds_count|kube_horizontalpodautoscaler_status_desired_replicas|container_fs_writes_total|kube_daemonset_status_current_number_scheduled|kube_pod_info|kubelet_pod_worker_duration_seconds_count|kubelet_pleg_relist_interval_seconds_bucket|kube_job_failed|kube_replicaset_owner|namespace_workload_pod:kube_pod_owner:relabel|kubelet_runtime_operations_errors_total|volume_manager_total_volumes|kubelet_server_expiration_renew_errors|container_memory_rss|container_memory_working_set_bytes|kubelet_running_container_count|container_fs_writes_bytes_total|namespace_cpu:kube_pod_container_resource_requests:sum|kubelet_running_pod_count|kube_statefulset_status_replicas_updated|kube_job_status_active|kube_node_status_capacity|kubelet_volume_stats_inodes|kube_statefulset_status_replicas|kube_deployment_status_replicas_updated|kube_node_status_allocatable|kube_statefulset_status_replicas_ready|node_namespace_pod_container:container_memory_working_set_bytes|kubelet_pod_worker_duration_seconds_bucket|kubelet_runtime_operations_total|kube_horizontalpodautoscaler_spec_max_replicas|kube_statefulset_status_current_revision|node_namespace_pod_container:container_memory_rss|kubelet_pleg_relist_duration_seconds_count|kube_daemonset_status_updated_number_scheduled|kube_horizontalpodautoscaler_spec_min_replicas|container_cpu_usage_seconds_total|kubelet_node_name|kubelet_certificate_manager_client_expiration_renew_errors|kube_pod_owner|container_network_transmit_packets_dropped_total|node_quantile:kubelet_pleg_relist_duration_seconds:histogram_quantile|kube_persistentvolumeclaim_resource_requests_storage_bytes|storage_operation_errors_total|kubelet_cgroup_manager_duration_seconds_bucket|kubelet_pleg_relist_duration_seconds_bucket|go_goroutines|kube_statefulset_status_observed_generation|container_fs_reads_total|container_cpu_cfs_periods_total|kubelet_running_containers|kube_daemonset_status_number_misscheduled|container_network_receive_packets_total|kube_node_info|kube_namespace_status_phase|process_resident_memory_bytes|kube_pod_status_phase|container_network_transmit_packets_total|cluster:namespace:pod_cpu:active:kube_pod_container_resource_requests|container_memory_cache|kubelet_running_pods|kube_job_status_start_time|kube_node_spec_taint|container_network_receive_packets_dropped_total|kube_pod_container_resource_requests|kubelet_volume_stats_available_bytes|node_filesystem_avail_bytes|kube_statefulset_metadata_generation|node_namespace_pod_container:container_cpu_usage_seconds_total:sum_irate|cluster:namespace:pod_memory:active:kube_pod_container_resource_requests|cluster:namespace:pod_memory:active:kube_pod_container_resource_limits|kube_daemonset_status_number_available|namespace_cpu:kube_pod_container_resource_limits:sum|container_fs_reads_bytes_total|kube_pod_container_resource_limits|node_namespace_pod_container:container_memory_swap|process_cpu_seconds_total|container_network_receive_bytes_total|kubelet_volume_stats_capacity_bytes|kubelet_volume_stats_used_bytes|kubelet_volume_stats_inodes_used|kube_statefulset_replicas|kube_statefulset_status_update_revision|kube_deployment_status_replicas_available|kube_deployment_metadata_generation|kubernetes_build_info|namespace_memory:kube_pod_container_resource_limits:sum|namespace_workload_pod|kube_pod_status_reason|kube_deployment_status_observed_generation|container_memory_swap|kube_deployment_spec_replicas|node_filesystem_size_bytes|kubelet_pod_start_duration_seconds_bucket|namespace_memory:kube_pod_container_resource_requests:sum|machine_memory_bytes|kube_resourcequota|container_cpu_cfs_throttled_periods_total|container_network_transmit_bytes_total|storage_operation_duration_seconds_count|kubelet_pod_start_duration_seconds_count|kubelet_certificate_manager_server_ttl_seconds|kube_namespace_status_phase|container_cpu_usage_seconds_total|kube_pod_status_phase|kube_pod_start_time|kube_pod_container_status_restarts_total|kube_pod_container_info|kube_pod_container_status_waiting_reason|kube_daemonset.*|kube_replicaset.*|kube_statefulset.*|kube_job.*|kube_node.*|node_namespace_pod_container:container_cpu_usage_seconds_total:sum_irate|cluster:namespace:pod_cpu:active:kube_pod_container_resource_requests|namespace_cpu:kube_pod_container_resource_requests:sum|node_cpu.*|node_memory.*|node_filesystem.*|node_network_transmit_bytes_total

sourceLabels:

- __name__

path: /metrics

port: https-metrics

relabelings:

- sourceLabels:

- __metrics_path__

targetLabel: metrics_path

- action: replace

replacement: integrations/kubernetes/kubelet

targetLabel: job

scheme: https

tlsConfig:

insecureSkipVerify: true

namespaceSelector:

any: true

selector:

matchLabels:

app.kubernetes.io/name: kubeletDeploy custom resources to collect logs

Save the following to a file and replace the following in the file:

NAMESPACEwith the namespace for the Grafana AgentCLUSTER_NAMEwith the name of your clusterLOGS_HOSTwith the hostname for your Loki instanceLOGS_USERNAMEwith the username for your Loki instanceLOGS_PASSWORDwith your Access Policy Token from earlier

Then deploy the file using kubectl apply -f <file.yaml>.

---

apiVersion: v1

kind: Secret

metadata:

name: logs-secret

namespace: NAMESPACE

type: Opaque

stringData:

password: "LOGS_PASSWORD"

username: "LOGS_USERNAME"

---

apiVersion: monitoring.grafana.com/v1alpha1

kind: LogsInstance

metadata:

labels:

agent: grafana-agent

name: grafana-agent-logs

namespace: NAMESPACE

spec:

clients:

- basicAuth:

password:

key: password

name: logs-secret

username:

key: username

name: logs-secret

externalLabels:

cluster: CLUSTER_NAME

url: LOGS_HOST/loki/api/v1/push

podLogsNamespaceSelector: {}

podLogsSelector:

matchLabels:

instance: primary

---

apiVersion: monitoring.grafana.com/v1alpha1

kind: PodLogs

metadata:

labels:

instance: primary

name: kubernetes-logs

namespace: NAMESPACE

spec:

namespaceSelector:

any: true

pipelineStages:

- cri: {}

relabelings:

- sourceLabels:

- __meta_kubernetes_pod_node_name

targetLabel: __host__

- action: replace

sourceLabels:

- __meta_kubernetes_namespace

targetLabel: namespace

- action: replace

sourceLabels:

- __meta_kubernetes_pod_name

targetLabel: pod

- action: replace

sourceLabels:

- __meta_kubernetes_container_name

targetLabel: container

- replacement: /var/log/pods/*$1/*.log

separator: /

sourceLabels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

targetLabel: __path__

selector:

matchLabels: {}Deploy eventhandler to collect events

Save the following to a file. Replace NAMESPACE with the desired namespace for kube-state-metrics, and use kubectl apply -f <file.yaml> to deploy the file.

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: agent-eventhandler

namespace: NAMESPACE

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: monitoring.grafana.com/v1alpha1

kind: Integration

metadata:

labels:

agent: grafana-agent

name: agent-eventhandler

namespace: NAMESPACE

spec:

config:

cache_path: /etc/eventhandler/eventhandler.cache

logs_instance: NAMESPACE/grafana-agent-logs

name: eventhandler

type:

unique: true

volumeMounts:

- mountPath: /etc/eventhandler

name: agent-eventhandler

volumes:

- name: agent-eventhandler

persistentVolumeClaim:

claimName: agent-eventhandlerDeploy custom resources to collect cost metrics

Save the following to a file, and replace within the file the following:

NAMESPACEwith the namespace for Grafana AgentCLUSTER_NAMEwith the name of your ClusterMETRICS_HOSTwith the hostname for your Prometheus instanceMETRICS_USERNAMEwith the username for your Prometheus instanceMETRICS_PASSWORDwith your Access Policy Token from earlier

Then deploy the file using kubectl apply -f <metrics.yaml>.

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/name: opencost

name: opencost

namespace: NAMESPACE

automountServiceAccountToken: true

---

apiVersion: v1

kind: Secret

metadata:

labels:

app.kubernetes.io/name: opencost

name: opencost

namespace: NAMESPACE

stringData:

DB_BASIC_AUTH_USERNAME: "METRICS_USERNAME"

DB_BASIC_AUTH_PW: "METRICS_PASSWORD"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/name: opencost

name: opencost

namespace: NAMESPACE

rules:

- apiGroups:

- ""

resources:

- configmaps

- deployments

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- daemonsets

- deployments

- replicasets

verbs:

- get

- list

- watch

- apiGroups:

- apps

resources:

- statefulsets

- deployments

- daemonsets

- replicasets

verbs:

- list

- watch

- apiGroups:

- batch

resources:

- cronjobs

- jobs

verbs:

- get

- list

- watch

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

verbs:

- get

- list

- watch

- apiGroups:

- policy

resources:

- poddisruptionbudgets

verbs:

- get

- list

- watch

- apiGroups:

- storage.k8s.io

resources:

- storageclasses

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/name: opencost

name: opencost

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: opencost

subjects:

- kind: ServiceAccount

name: opencost

namespace: NAMESPACE

---

apiVersion: v1

kind: Service

metadata:

labels:

name: opencost

name: opencost

namespace: NAMESPACE

spec:

selector:

name: opencost

type: ClusterIP

ports:

- name: http

port: 9003

targetPort: 9003

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: opencost

name: opencost

namespace: NAMESPACE

spec:

replicas: 1

selector:

matchLabels:

name: opencost

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

name: opencost

spec:

securityContext: {}

serviceAccountName: opencost

tolerations: []

containers:

- image: quay.io/kubecost1/kubecost-cost-model:prod-1.103.1

name: opencost

resources:

limits:

cpu: 999m

memory: 1Gi

requests:

cpu: 10m

memory: 55Mi

readinessProbe:

httpGet:

path: /healthz

port: 9003

initialDelaySeconds: 30

periodSeconds: 10

failureThreshold: 200

livenessProbe:

httpGet:

path: /healthz

port: 9003

initialDelaySeconds: 30

periodSeconds: 10

failureThreshold: 10

ports:

- containerPort: 9003

name: http

securityContext: {}

env:

- name: PROMETHEUS_SERVER_ENDPOINT

value: METRICS_HOST/api/prom

- name: CLUSTER_ID

value: CLUSTER_NAME

- name: DB_BASIC_AUTH_USERNAME

valueFrom:

secretKeyRef:

name: opencost

key: DB_BASIC_AUTH_USERNAME

- name: DB_BASIC_AUTH_PW

valueFrom:

secretKeyRef:

name: opencost

key: DB_BASIC_AUTH_PW

- name: CLOUD_PROVIDER_API_KEY

value: AIzaSyD29bGxmHAVEOBYtgd8sYM2gM2ekfxQX4U

- name: EMIT_KSM_V1_METRICS

value: "false"

- name: EMIT_KSM_V1_METRICS_ONLY

value: "true"

- name: PROM_CLUSTER_ID_LABEL

value: cluster

imagePullPolicy: Always

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

instance: primary

name: opencost

namespace: NAMESPACE

spec:

endpoints:

- honorLabels: true

interval: 60s

path: /metrics

port: http

relabelings:

- action: replace

replacement: integrations/kubernetes/opencost

targetLabel: job

metricRelabelings:

- action: keep

regex: container_cpu_allocation|container_gpu_allocation|container_memory_allocation_bytes|deployment_match_labels|kubecost_cluster_info|kubecost_cluster_management_cost|kubecost_cluster_memory_working_set_bytes|kubecost_http_requests_total|kubecost_http_response_size_bytes|kubecost_http_response_time_seconds|kubecost_load_balancer_cost|kubecost_network_internet_egress_cost|kubecost_network_region_egress_cost|kubecost_network_zone_egress_cost|kubecost_node_is_spot|node_cpu_hourly_cost|node_gpu_count|node_gpu_hourly_cost|node_ram_hourly_cost|node_total_hourly_cost|opencost_build_info|pod_pvc_allocation|pv_hourly_cost|service_selector_labels|statefulSet_match_labels

sourceLabels:

- __name__

scheme: http

namespaceSelector:

matchNames:

- NAMESPACE

selector:

matchLabels:

name: opencostDone with setup

To finish up:

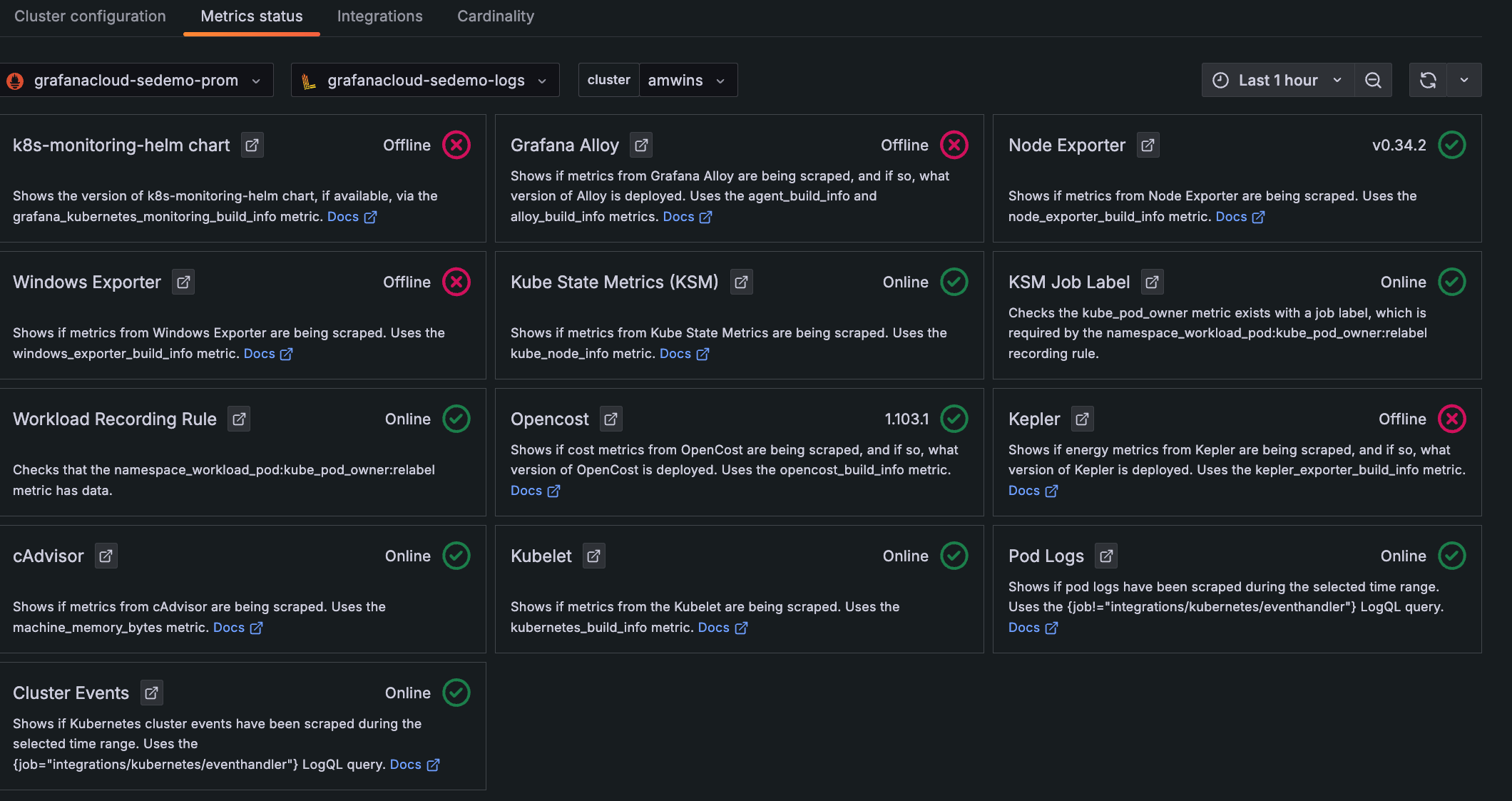

Navigate to Kubernetes Monitoring, and click Configuration on the main menu.

Click the Metrics status tab to view the data status. Your data becomes populated as the system components begin scraping and sending data to Grafana Cloud.

![**Metrics status** tab with status indicators for one Cluster Descriptions and statuses for each item chosen to be configured and whether they are online]()

Metrics status tab with status indicators for one Cluster Click Kubernetes Monitoring on the main menu to view the home page and see any issues currently highlighted. You can drill into the data from here.

Explore your Kubernetes infrastructure:

- Click Cluster navigation in the menu, then click your namespace to view the grafana-agent StatefulSet, the grafana-agent-logs DaemonSet, and the ksm-kube-state-metrics deployment. Click the kube-system namespace to see Kubernetes-specific resources.

- Click the Nodes tab, then click the Nodes of your cluster to view their condition, utilization, and pod density.