Configure Logs with Lambda

Send logs to Grafana Cloud Logs from multiple AWS services using a lambda-promtail function. Complete the following steps to create the lambda-promtail function.

Navigate to Logs with Lambda

Navigate to your Grafana Cloud portal.

In your Grafana Cloud stack, expand Infrastructure in the main menu.

Click AWS, then click Add your AWS services.

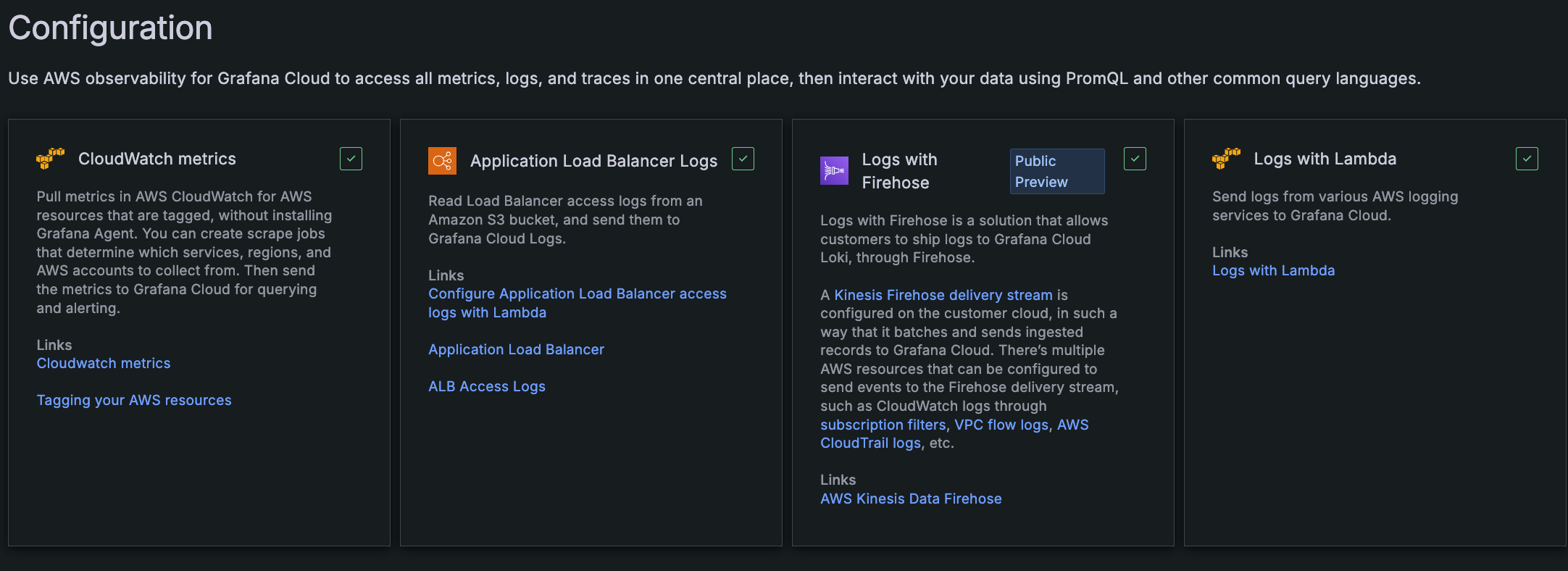

At the Configuration page, find and click the Logs with Lambda tile.

![Configuration choices Configuration choices]()

Configuration choices Click Configure.

![**Configure** button **Configure** button]()

Configure button Perform subsequent steps to configure with:

Configure with CloudFormation

Complete the following process to configure with CloudFormation.

Choose method for creating AWS resources

Click Use CloudFormation.

Upload the lambda-promtail zip file to S3

- Upload the compressed lambda-promtail binary to an S3 bucket in a region where you want to pull logs from. It must be in the same AWS region where the Lambda function runs.

- Copy and run the the command.

Create a Grafana.com API key

Create a Grafana.com API key with the MetricsPublisher role to authenticate with Grafana Cloud Logs.

- In the API token name box, enter your API token name.

- Click Create token to generate the token.

- Copy the generated API key.

Launch CloudFormation stack

- Click Launch CloudFormation stack.

- In AWS, paste the generated API key into the Password field.

- For the LogGroup you want to send to Grafana Cloud, specify the

SubscriptionFilter.

Explore logs

- At the Grafana Configuration Details page, click Go to Explore.

- At the Explore page, select a log stream based on

__aws_cloudwatch_log_groupto verify logs are being forwarded correctly.

Configure with Terraform

Complete the following process to configure with Terraform.

Choose method for creating AWS resources

Click Use Terraform.

Create a Grafana.com API key

- In the API token name box, enter your API token name.

- Click Create token to generate the token.

- Copy the generated API key.

Terraform setup

Configure the AWS CLI. Remember to set the correct AWS region where lambda-promtail should run and pull logs from.

Copy and paste the following snippet into a Terraform file.

terraform { required_providers { aws = { source = "hashicorp/aws" version = "~> 4.0" } } } data "aws_region" "current" {} resource "aws_s3_object_copy" "lambda_promtail_zipfile" { bucket = var.s3_bucket key = var.s3_key source = "grafanalabs-cf-templates/lambda-promtail/lambda-promtail.zip" } resource "aws_iam_role" "lambda_promtail_role" { name = "GrafanaLabsCloudWatchLogsIntegration" assume_role_policy = jsonencode({ "Version" : "2012-10-17", "Statement" : [ { "Action" : "sts:AssumeRole", "Principal" : { "Service" : "lambda.amazonaws.com" }, "Effect" : "Allow", } ] }) } resource "aws_iam_role_policy" "lambda_promtail_policy_logs" { name = "lambda-logs" role = aws_iam_role.lambda_promtail_role.name policy = jsonencode({ "Statement" : [ { "Action" : [ "logs:CreateLogGroup", "logs:CreateLogStream", "logs:PutLogEvents", ], "Effect" : "Allow", "Resource" : "arn:aws:logs:*:*:*", } ] }) } resource "aws_cloudwatch_log_group" "lambda_promtail_log_group" { name = "/aws/lambda/GrafanaCloudLambdaPromtail" retention_in_days = 14 } resource "aws_lambda_function" "lambda_promtail" { function_name = "GrafanaCloudLambdaPromtail" role = aws_iam_role.lambda_promtail_role.arn timeout = 60 memory_size = 128 handler = "main" runtime = "provided.al2023" s3_bucket = var.s3_bucket s3_key = var.s3_key environment { variables = { WRITE_ADDRESS = var.write_address USERNAME = var.username PASSWORD = var.password KEEP_STREAM = var.keep_stream BATCH_SIZE = var.batch_size EXTRA_LABELS = var.extra_labels } } depends_on = [ aws_s3_object_copy.lambda_promtail_zipfile, aws_iam_role_policy.lambda_promtail_policy_logs, aws_cloudwatch_log_group.lambda_promtail_log_group, ] } resource "aws_lambda_function_event_invoke_config" "lambda_promtail_invoke_config" { function_name = aws_lambda_function.lambda_promtail.function_name maximum_retry_attempts = 2 } resource "aws_lambda_permission" "lambda_promtail_allow_cloudwatch" { statement_id = "lambda-promtail-allow-cloudwatch" action = "lambda:InvokeFunction" function_name = aws_lambda_function.lambda_promtail.function_name principal = "logs.${data.aws_region.current.name}.amazonaws.com" } # This block allows for easily subscribing to multiple log groups via the `log_group_names` var. # However, if you need to provide an actual filter_pattern for a specific log group you should # copy this block and modify it accordingly. resource "aws_cloudwatch_log_subscription_filter" "lambda_promtail_logfilter" { for_each = toset(var.log_group_names) name = "lambda_promtail_logfilter_${each.value}" log_group_name = each.value destination_arn = aws_lambda_function.lambda_promtail.arn # required but can be empty string filter_pattern = "" depends_on = [aws_iam_role_policy.lambda_promtail_policy_logs] } output "role_arn" { value = aws_lambda_function.lambda_promtail.arn description = "The ARN of the Lambda function that runs lambda-promtail." }Copy and paste the following snippet into a

variables.tffile.variable "write_address" { type = string description = "This is the Grafana Cloud Loki URL that logs will be forwarded to." default = "" } variable "username" { type = string description = "The basic auth username for Grafana Cloud Loki." default = "" } variable "password" { type = string description = "The basic auth password for Grafana Cloud Loki (your Grafana.com API Key)." sensitive = true default = "" } variable "s3_bucket" { type = string description = "The name of the bucket where to upload the 'lambda-promtail.zip' file." default = "" } variable "s3_key" { type = string description = "The desired path where to upload the 'lambda-promtail.zip' file (defaults to the root folder)." default = "lambda-promtail.zip" } variable "log_group_names" { type = list(string) description = "List of CloudWatch Log Group names to create Subscription Filters for (ex. /aws/lambda/my-log-group)." default = [] } variable "keep_stream" { type = string description = "Determines whether to keep the CloudWatch Log Stream value as a Loki label when writing logs from lambda-promtail." default = "false" } variable "extra_labels" { type = string description = "Comma separated list of extra labels, in the format 'name1,value1,name2,value2,...,nameN,valueN' to add to entries forwarded by lambda-promtail." default = "" } variable "batch_size" { type = string description = "Determines when to flush the batch of logs (bytes)." default = "" }Return to the Grafana Configuration Details page.

Copy and paste the

write_address,username, andpasswordinto the appropriate places in thevariables.tffile.In the

variables.tffile, configure variables according to their descriptions. All resources must be in the same AWS region (Log Group, Lambda function, and S3 bucket for lambda-promtail.zip).Run the Terraform apply command:

terraform apply -var-file="variables.tf"After the Terraform apply command has finished creating the resources, it outputs the

role_arnof the Lambda function that runs lambda-promtail.

The previous Terraform snippets should get you started with a basic configuration for Lambda-Promtail. For additional setup (specifically, VPC subnets and security groups), refer to this extended example Terraform file.

Explore logs

- At the Grafana Configuration Details page, click Go to Explore.

- At the Explore page, select a log stream based on

__aws_cloudwatch_log_groupto verify logs are being forwarded correctly.

Labels

CloudWatch logs sent to Grafana Cloud Logs include the following special labels assigned to them:

__aws_cloudwatch_log_group: Associated CloudWatch log group for this log__aws_cloudwatch_owner: AWS ID of the owner of this log__aws_cloudwatch_log_stream: Associated CloudWatch log stream for this log (ifKEEP_STREAMis set to true)

You can specify extra labels (as key-value pairs) to be added to logs streamed by lambda-promtail: __extra_<name>=<value>.