Key Prometheus concepts every Grafana user should know

Prometheus has become an essential technology in the world of monitoring and observability. I’ve been aware of its importance for some time, but as a performance engineer, my experience with Prometheus had been limited to using it to store some metrics and visualize them in Grafana. Being a Grafanista, I felt I should dig deeper into Prometheus, knowing it had much more to offer than just being a place to throw performance test results.

In seeking out great learning resources, nearly everyone—including Richard “RichiH” Hartmann, one of our many internal experts on the subject—recommended the book “Prometheus Up & Running” by Prometheus server maintainer Julien Pivotto and core developer Brian Brazil.

The book, which was first published in 2018 and re-released as a second edition last year, opened my eyes to the rich ecosystem that is Prometheus, revealing capabilities that go far beyond that of a simple database. These insights are crucial for anyone looking to deepen their understanding of Prometheus, particularly engineers like myself who initially had only a cursory knowledge. The lessons are especially important for Grafana users working with Prometheus data and Kubernetes, as they will benefit considerably from everything the book has to offer.

In this blog post, I’ll share some of my key takeaways from the book that are particularly relevant to engineers working with Grafana. I hope this will spark your interest in reading the book, as this post is no substitute for the depth and detail it provides. So, let’s explore how the invaluable lessons from “Prometheus Up & Running” can enhance the way you manage and visualize your metrics.

Prometheus is more than just a database

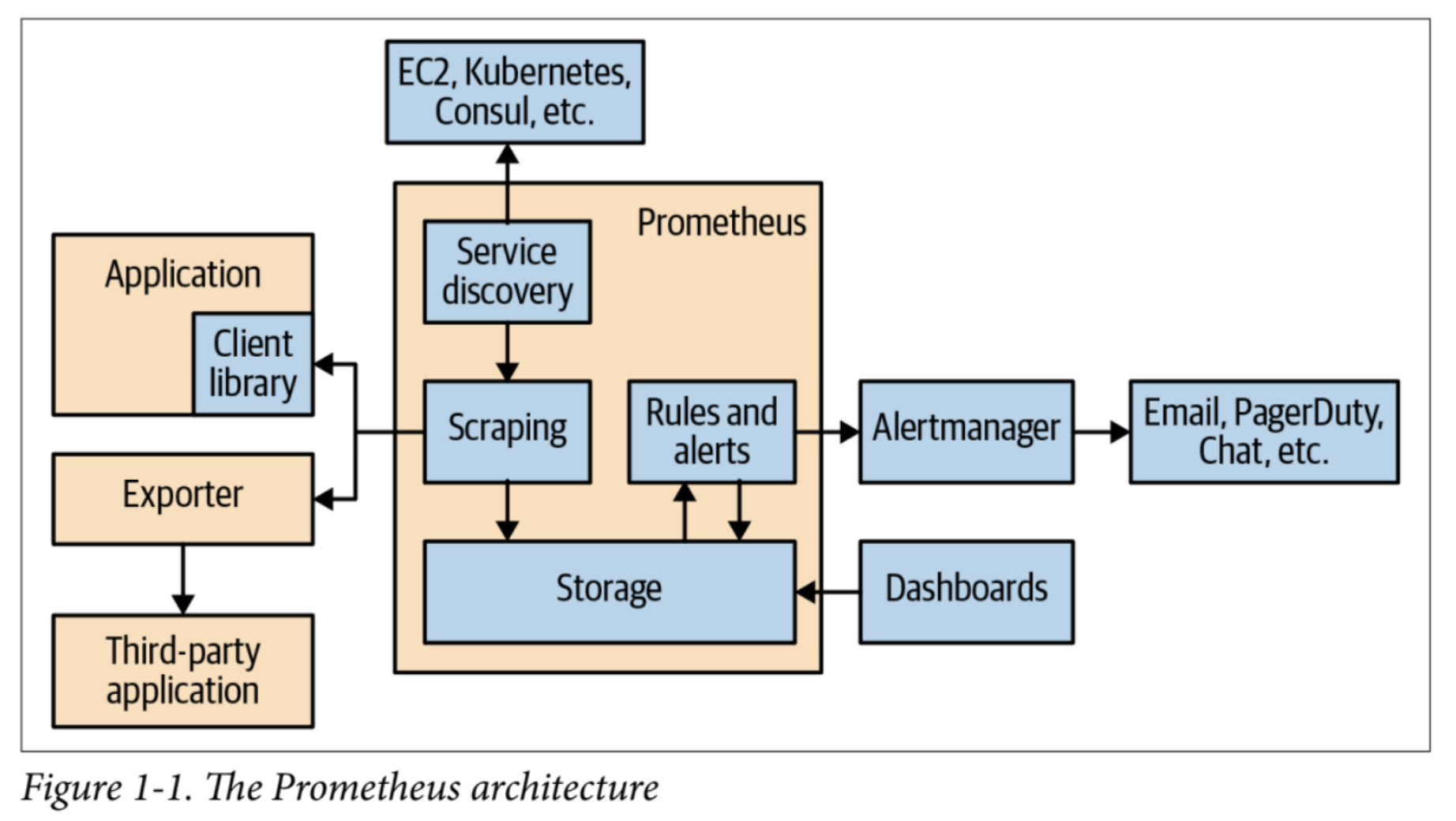

Before reading the book, I thought of Prometheus as just a time series database. But “Prometheus Up & Running” shattered that misconception, revealing that Prometheus is a robust monitoring framework. Yes, it excels at metric storage, but it also provides many more capabilities, including:

- Mechanisms to scrape and retrieve metrics from diverse sources

- Powerful querying capabilities

- The ability to create rules and generate alerts from them

- Dynamic service discovery

We need to think of Prometheus as a multifunctional framework rather than just a database. This shift in perspective allows us to fully utilize it and exploit its comprehensive range of components and features to enhance our observability practices.

Let’s explore some of these components and features packed into Prometheus.

Prometheus components you should know

Of course, the time-based database component in Prometheus is powerful and best known for efficiently storing and retrieving time-series data. Optimized to handle high-cardinality metrics, the database is designed for excellent performance under heavy load and huge volumes of data, making it ideal for real-time monitoring and observability.

But as you can see from the diagram above, there’s more to Prometheus. Next, let’s get a quick overview of Prometheus’ other elements.

Instrumentation and client libraries

A key aspect of Prometheus is instrumentation—the mechanisms to extract metrics. Prometheus provides several ways to do this. It has client libraries available for several programming languages, allowing you to seamlessly instrument your code. This simplifies the process of exposing metrics so Prometheus can easily scrape them.

Node exporter

Need OS-level metrics? Prometheus comes to the rescue with the node exporter, offering visibility into CPU, memory, and disk usage. It’s a go-to resource for hardware-level monitoring and ensures you have a clear view of system health.

Service discovery

In today’s dynamic cloud environments, whether you’re using Kubernetes, Consul, or plain DNS, service discovery helps automatically detect and monitor new instances as they come online. This makes Prometheus highly adaptable to ever-changing environments.

General exporters

We’ve seen easy instrumentation with client libraries and node exporters, but for everything else, general exporters come to the rescue. When direct instrumentation isn’t possible, general exporters expose metrics from diverse sources like databases, message brokers, or even with the blackbox exporter, which allows you to monitor services from an external vantage point.

PromQL

PromQL is at the core of Prometheus’s querying prowess. It is a powerful querying language that allows you to retrieve, manipulate, and analyze your metrics using functions, operators, and aggregations. It provides a versatile solution for your most complex querying needs.

Recording rules

Recording rules were one of the greatest elements I discovered while reading the book. These rules precompute expensive queries or frequently needed data, storing the results as new time series data. Using recording rules can speed up querying, pre-process data, and make results lighter, especially during peak usage.

Alerts

Prometheus comes with powerful alerting capabilities. By using alerting rules, much like recording rules, you can set conditions to trigger notifications. These alerts can be routed via Alertmanager to whatever alerting system you may have, such as email, Slack, or PagerDuty.

Grafana: the default recommendation!

Prometheus has its own visualization functionalities in a web frontend that you can use to both perform queries in PromQL and visualize their results. However, there’s an interesting observation in the book on this subject: Even though Prometheus supports visualizations, the capabilities are not outstanding.

This is where Grafana comes into the picture. In my admittedly biased opinion, one of the standout recommendations in “Prometheus Up & Running” is the use of Grafana as the premier visualization platform for Prometheus metrics. The book praises Grafana’s versatility in connecting to Prometheus, the ease of creating and executing PromQL queries, and its ability to transform complex, raw data into intuitive and beautiful dashboards.

Push vs. pull

A surprising discovery for me while reading the book was learning about the different data models in monitoring systems: push vs. pull. Throughout my career, I had always worked with databases where you submit data, queries, commands, and so on. Especially when storing metrics, all I used to do was send the metrics to the database.

But Prometheus is different. Prometheus is designed to pull data from the targets it monitors.

Think about it—if you are monitoring many items, it would be easy to overload the database. However, if you let the database pull the information at a manageable pace, you get enhanced reliability and flexibility!

A key element is that the monitored entities must publish their metrics in a way that Prometheus can discover, access, and pull them. This is achieved through mechanisms like client libraries, node exporter, service discovery, and general exporters, as described earlier. By leveraging these components, Prometheus efficiently collects data without overwhelming the system, maintaining its robust performance.

A final note here is that Prometheus can also work in the traditional push mechanism. For this, you have to tweak a few configuration settings. Although it can work that way, Prometheus was not designed for this, and the book advises against it.

PromQL: more than just queries

While PromQL is recognized for its strong (and for some, complex) querying capabilities, there is much more to it than just queries. PromQL includes extensive functions and operators that simplify complex calculations, aggregations, data transformations, and much more.

Moreover, PromQL supports recording rules, which enable the precomputation of frequently used queries, thus optimizing performance. This also brings several solutions for common problems related to storing and displaying metrics. More on that in the next section.

Finally, PromQL is crucial for the alerting system in Prometheus. You must define the rules that trigger alerts and notifications using PromQL queries. The possibilities are endless!

Cool issue solutions

As mentioned earlier, discovering PromQL’s support for recording rules opened up all sorts of solutions for problems I have faced in the past.

First, when the granularity of your data becomes less important over time, keeping every single metric can be expensive. With recording rules, you can create a PromQL query to run regularly and consolidate the data. For example, you might not care about the minute-by-minute temperature for last summer—you may just want to store daily maximum, minimum, average, or variance metrics. Recording rules can aggregate these metrics into a new time series, allowing you to discard the excessively granular data from older periods.

Another common issue I’ve faced while visualizing Prometheus data with Grafana is dealing with too many hyper-granular metrics. This can overload Grafana’s frontend, increase data loading times, or even make the user’s browser unresponsive due to the large volume of data points. By using recording rules, you can create a parallel time series with reduced granularity. This allows you to load, refresh, and display data more efficiently in Grafana, reserving the hyper-granular data for detailed zoom-ins.

Since I learned about recording rules, they have become a particularly useful recommendation for many Grafana users I’ve meet with who are struggling with poor performance due to multitudes of metrics. They’re trying to find optimal ways to avoid having Grafana crash or become unresponsive from so much data being displayed, and recording rules have been a big help!

Read the book—seriously

If you weren’t already well-versed in Prometheus, I hope this post has given you some insights into its real powers and depth. It truly is a great observability platform that integrates beautifully with Grafana.

Knowing and understanding all its strengths will make you a super observability engineer! I highly recommend that you check out the book “Prometheus Up & Running.” While this post’s objective was to shed light on these amazing details, to really learn and master Prometheus, there’s nothing better than the notes, exercises, and examples the book provides. It’s a great addition to any Grafana enthusiast’s library. Check it out!