How to integrate Okta logs with Grafana Loki for enhanced SIEM capabilities

Identity providers (IdPs) such as Okta play a crucial role in enterprise environments by providing seamless authentication and authorization experiences for users accessing organizational resources. These interactions generate a massive volume of event logs, containing valuable information like user details, geographical locations, IP addresses, and more.

These logs are essential for security teams, especially in operations, because they’re used to detect and respond to incidents effectively. However, integrating these logs into logging and SIEM platforms can be challenging.

In this blog post, we’ll show you how to effectively set up and run our Okta logs collector to send logs to Grafana Loki instances on-premises or in the cloud. By configuring your environment variables, running the Okta logs collector Docker container, and utilizing the latest versions of Loki and Grafana Alloy, you can monitor, analyze, and set alerts on your Okta logs for deeper insights and improved system security and operational capabilities.

Why we developed the Okta logs collector

Many IdPs offer REST APIs for fetching event logs, with Okta’s System Log API providing logs in JSON format, as shown below. However, integrating these logs into existing systems often involves a complex and time-consuming process, typically requiring custom code to access the logs via an HTTP client library in programming languages like Go.

To simplify and streamline this process, we developed the Okta logs collector. This tool automates the retrieval of logs from the Okta System Log API, enriches the data, and sends it to STDOUT, making it easy to scrape and forward the logs to observability platforms like Loki using agents such as Alloy or Promtail.

To further illustrate why we built the Okta logs collector, let’s briefly look at the challenge developers face with fetching event logs and how we’re attempting to address those challenges.

JSON log event example

Here’s an example of a JSON-formatted log event generated by Okta’s System Log API:

{

"version": "0",

"severity": "INFO",

"client": {

"zone": "OFF_NETWORK",

"device": "Unknown",

"userAgent": {

"os": "Unknown",

"browser": "UNKNOWN",

"rawUserAgent": "UNKNOWN-DOWNLOAD"

},

"ipAddress": "12.97.85.90"

},

"device": {

"id": "guob5wtu7rBggkg9G1d7",

"name": "MacBookPro16,1",

"os_platform": "OSX",

"os_version": "14.3.0",

"managed": false,

"registered": true,

"device_integrator": null,

"disk_encryption_type": "ALL_INTERNAL_VOLUMES",

"screen_lock_type": "BIOMETRIC",

"jailbreak": null,

"secure_hardware_present": true

},

"actor": {

"id": "00u1qw1mqitPHM8AJ0g7",

"type": "User",

"alternateId": "admin@example.com",

"displayName": "John Doe"

},

"outcome": {

"result": "SUCCESS"

},

"uuid": "f790999f-fe87-467a-9880-6982a583986c",

"published": "2017-09-31T22:23:07.777Z",

"eventType": "user.session.start",

"displayMessage": "User login to Okta",

"transaction": {

"type": "WEB",

"id": "V04Oy4ubUOc5UuG6s9DyNQAABtc"

},

"debugContext": {

"debugData": {

"requestUri": "/login/do-login"

}

},

"legacyEventType": "core.user_auth.login_success",

"authenticationContext": {

"authenticationStep": 0,

"externalSessionId": "1013FfF-DKQSvCI4RVXChzX-w"

}

}Challenges with log retrieval

To retrieve these logs, you need an API token. You can test the API using curl or similar HTTP clients:

curl -v -X GET \

-H "Accept: application/json" \

-H "Content-Type: application/json" \

-H "Authorization: SSWS ${api_token}" \

"https://${yourOktaDomain}/api/v1/logsAlthough Okta provides a Go SDK for easier integration, developers still need to write code to fetch and handle the logs, which can be cumbersome and prone to errors. This is where the Okta logs collector shines by automating the entire process.

The Okta logs collector solution

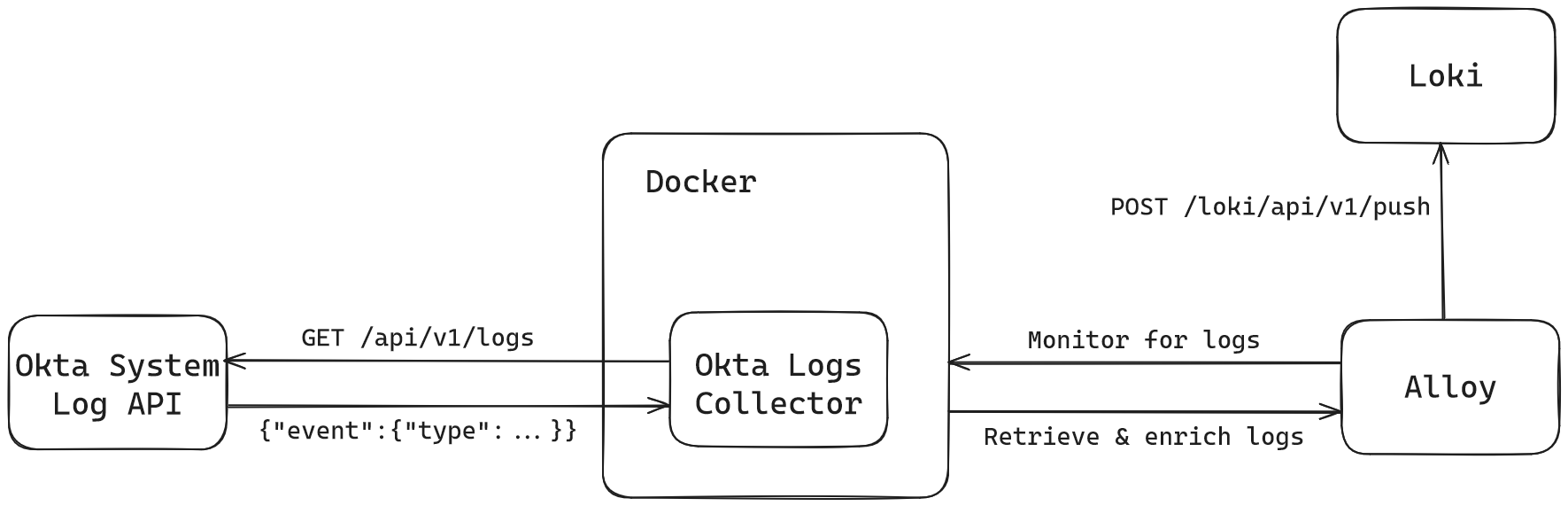

Our okta-logs-collector application periodically fetches logs from Okta’s System Logs API and sends them to the STDOUT. Our solution works in a containerized environment, in which Alloy can discover Docker Engine, receive container logs, and forward them to Loki. Alternatively, you can pipe the application logs to a file on disk, which can then be monitored and read by Alloy using the loki.source.file component and sent to Loki. The containerized solution is shown in the diagram below:

How to Use Okta Logs Collector with Alloy

To effectively set up and run the application, it’s important to understand the example configuration file for Alloy. Components are the building blocks of Alloy, each handling a specific task such as retrieving logs or sending them to Loki. Reference documentation for each component can be found here. The example configuration file utilizes several components, which are explained below.

Component 1: discovery.docker

The discovery.docker component discovers Docker Engine containers and exposes them as targets. This assumes that you’re running Docker Engine locally and you have access to the docker.sock Unix domain socket, which means that you are a member of the docker group on Linux or you’re running as root.

discovery.docker "containers" {

host = "unix:///var/run/docker.sock"

}Component 2: loki.source.docker

The loki.source.docker component reads log entries from Docker containers and forwards them to other loki.* components. Each component can read from a single Docker daemon. In the following example, we’re using the same docker.sock as the host that we used in Component 1. The target is set to discover and collect logs from Docker containers and they will be forwarded to the next component in the chain (using the forward_to argument): loki.process.grafanacloud.receiver. This component will apply a single label to all the logs it retrieves and it will collect logs from containers every 10 seconds (default is 60s).

loki.source.docker "default" {

host = "unix:///var/run/docker.sock"

targets = discovery.docker.containers.targets

forward_to = [loki.process.grafanacloud.receiver]

labels = {

job = "okta-logs-collector",

}

refresh_interval = "10s"

}Component 3: loki.process

The loki.process component receives log entries from other Loki components. It then processes them through various “stages” and forwards the processed logs to specified receivers. Each stage within a loki.process block can parse, transform, and filter log entries. The stages in this pipeline perform the following actions:

- Filter logs to only include those from the

okta-logs-collectorjob. - Extract fields (

eventType,level,timestamp) from JSON-formatted log lines. - Set the log timestamp using the extracted

timestampfield, formatted in RFC3339. - Assign extracted fields (

eventTypeandlevel) as Loki labels for indexing and querying. Theleveleffectively replaces Loki log level.

loki.process "grafanacloud" {

forward_to = [loki.write.grafanacloud.receiver]

stage.match {

// Match only logs from the okta-logs-collector job

selector = "{job=\"okta-logs-collector\"}"

// Extract important labels and the timestamp from the log line

// and map them to Loki labels

stage.json {

expressions = {

eventType = "event.eventType",

level = "event.severity",

timestamp = "time",

}

}

// Use the timestamp from the log line as the Loki timestamp

stage.timestamp {

source = "timestamp"

format = "RFC3339"

}

// Use the extracted labels as the Loki labels for indexing.

// These labels can be used as stream selectors in LogQL.

stage.labels {

values = {

eventType = "",

level = "",

}

}

}

}Component 4: loki.write

The loki.write component receives log entries from other loki components — the last three we just discussed, to be exact — and sends them to Loki using the logproto format. The example uses a Grafana Cloud endpoint with basic authentication.

loki.write "grafanacloud" {

endpoint {

url = "https://<subdomain>.grafana.net/loki/api/v1/push"

basic_auth {

username = "<Your Grafana.com User ID>"

password = "<Your Grafana.com API Token>"

}

}

}Environment variables can also be used to set the username and password (or even the URL):

basic_auth {

username = env("GRAFANA_CLOUD_USER_ID")

password = env("GRAFANA_CLOUD_API_KEY")

}This configuration effectively sets up a pipeline for discovering Docker containers, collecting and processing their logs, and sending the processed logs to Grafana Cloud Logs, which is powered by Loki.

Set up and run Okta logs collector

Having grasped the Alloy configuration, you’re ready to set up and run the Okta logs collector.

- Sign in to Okta. Log in to your Okta account as an administrator.

- Create API Token. Create a new API token for accessing the System Logs API.

- Set Environment Variables. Configure the necessary environment variables in your shell:

export OKTA_URL="https://<account>.okta.com"

export OKTA_API_TOKEN="your-api-token"- Run Okta logs collector. Use Docker to run the Okta logs collector container. You can also run it in Kubernetes.

docker run --name okta-logs-collector \

-e OKTA_URL=${OKTA_URL} \

-e API_KEY=${OKTA_API_TOKEN} \

-e LOOKBACK_INTERVAL=24h \ # Defaults to 1h

-e POLL_INTERVAL=10s \

-e LOG_LEVEL=info \

grafana/okta-logs-collector:latest pollDownload configuration files. Obtain the example configuration files and place them in a convenient location. Note that the Alloy configuration sends all Docker logs to Loki, so you should consider filtering the logs using the container name.

Use Loki on Grafana Cloud or run Loki on-premises. Locate your Grafana Cloud stack and find your Loki instance endpoint. (If you don’t already use Grafana Cloud, you can sign up for a forever-free account today.) Alternatively, an example configuration file is provided for Loki if you want to run Loki on-premises.

Configure Alloy. If you’re using Grafana Cloud Logs, follow these steps.

- Use the provided example configuration and replace these placeholders with actual values:

<subdomain>,<Your Grafana.com User ID>, and<Your Grafana.com API Token>. - Generate an API token to access and push logs to your Loki instance.

- Use the provided example configuration and replace these placeholders with actual values:

Run Alloy. Download, unpack and run the latest version of Alloy.

If you’re using Grafana Cloud Logs, use

cloud-config.alloyinstead. The details of how the example configuration files are structured are explained in the previous section.

./alloy-linux-amd64 run /absolute/path/to/config.alloyHow to observe logs in Loki and Grafana

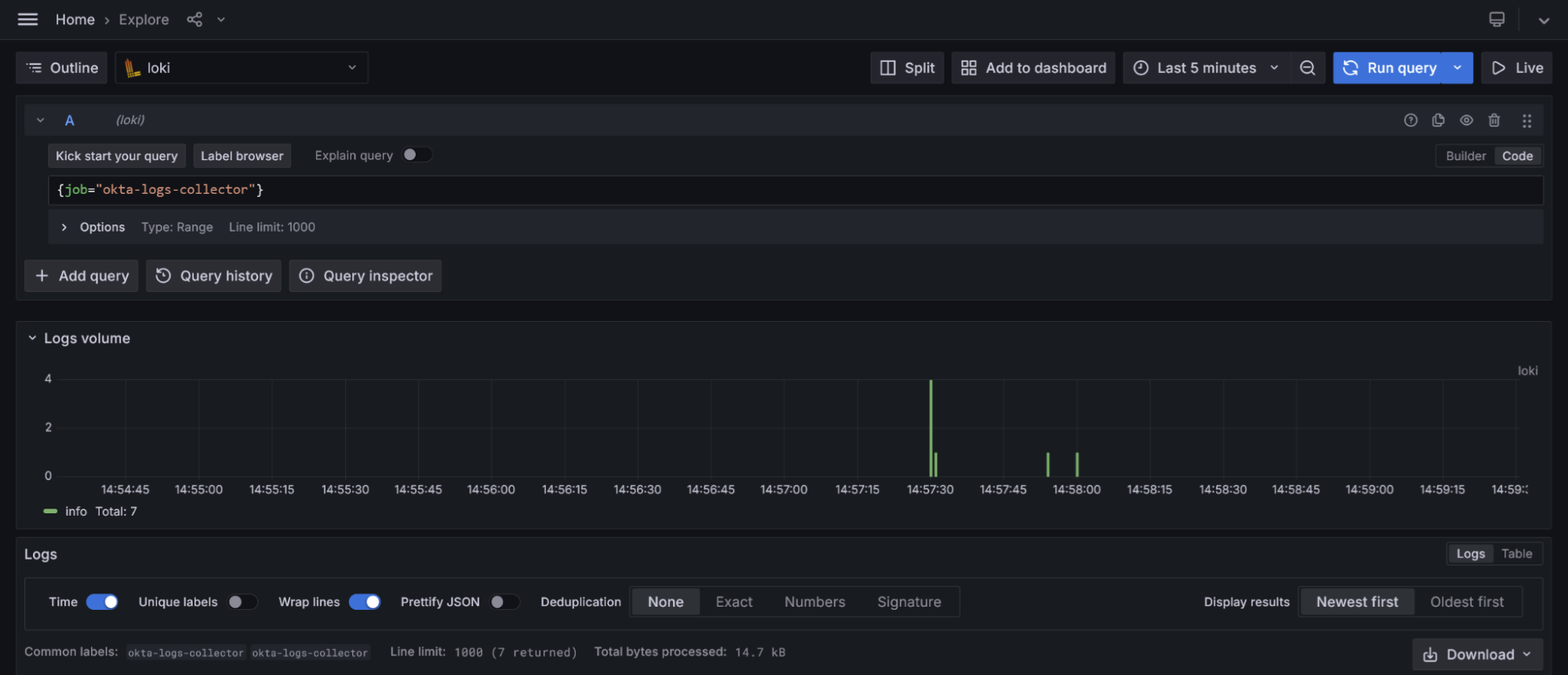

After a few moments, you should see logs appearing in Loki. Use the Loki LogCLI or its data source in Grafana to view and analyze the logs. If you are using Grafana Cloud, go to the Grafana Cloud portal and launch Grafana. From the right menu, navigate to Connections > Data Sources, where you will see that Loki is already added as a data source. Navigate to the Explore page and start querying your Okta logs using the {job="okta-logs-collector"} stream selector. The eventType field is indexed, allowing you to filter specific event types. A complete list of Okta event types (more than 930 as of now) can be found here.

How to use Sigma rules for detecting critical log lines

Ingesting logs into Loki is only half the equation; the other half is detecting critical log lines. These log lines provide crucial evidence, such as identifying whether corporate accounts have been compromised. While you can always write your own LogQL queries to detect specific issues, there are more efficient methods. One such method is leveraging the Sigma ecosystem, which offers an abundant set of declarative rules for discovering these pieces of evidence.

Fortunately, the security operations team has developed a backend for Sigma that converts Sigma rules into Loki queries (LogQL queries). You can leverage existing Sigma rules for Okta to detect critical log lines and set alerts to monitor these events. Follow the instructions provided in this blog post to install Sigma CLI and its plugins and to convert Sigma rules into LogQL queries.

Also, you can continuously validate your Sigma rules against the Sigma JSON Schema by utilizing the sigma-rules-validator GitHub Action. Follow the instructions in this blog post to learn more about the action and how to use it.

What’s next

We hope this project can help you monitor your Okta logs more easily, but we’re not done yet. Stay tuned for version 2 of the Okta logs collector, which we’re developing as an Alloy component. This new version will offer advanced capabilities and seamless integration with Alloy components to better meet your logging needs.

Grafana Cloud is the easiest way to get started with metrics, logs, traces, dashboards, and more. We have a generous forever-free tier and plans for every use case. Sign up for free now!