How we upgraded to MySQL 8 in Grafana Cloud

Starting around June this year, we upgraded our Grafana databases in Grafana Cloud from MySQL 5.7 to MySQL 8, due to MySQL 5.7 reaching end-of-life in October. This project involved tens of thousands of customer databases across dozens of MySQL database servers, multiple cloud providers, and many Kubernetes clusters.

Ultimately, the upgrade resulted in a change to how we provision database server storage capacity, better automation for configuring new databases, and new processes for performing database upgrades and batch migrations. The database server disk bottlenecks we ran into prompted us to take a closer look at our disk performance. As a result, we changed to a storage type with more performance, which cut query execution times in half for our busier database servers.

In this post, we’ll take a closer look at the upgrade approach we took, the challenges we faced, and the Grafana dashboards we used to track our progress.

Note: I always specify in this post whether I’m talking about databases or database servers, since the term “database” is often used to refer to both.

Grafana Cloud’s database architecture 🥞

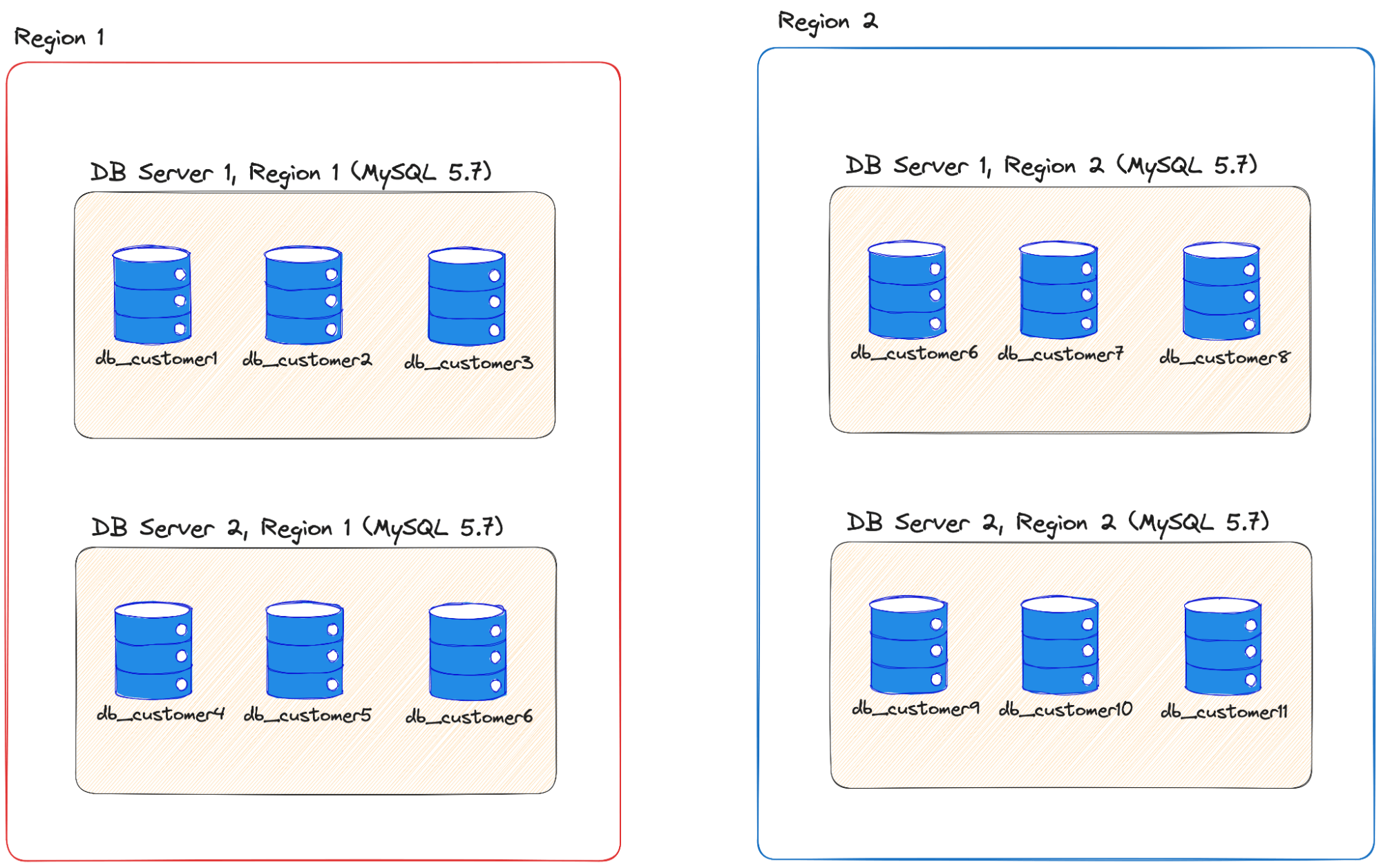

In Grafana Cloud, our database servers run on multiple cloud providers, are distributed globally, and host single-tenant databases. This means that every customer has their own database within the database server they are assigned to. As some Grafana operators may have noticed, Grafana databases are generally quite small. The vast majority of databases in Grafana Cloud are less than 100 MB. A single database server in Grafana Cloud will have between a few hundred to upwards of 1,000 databases.

Upgrade process requirements

When deciding how to perform the upgrades to MySQL 8, the things most important to our engineering team were:

- Keeping customer downtime to a minimum — ideally, less than 1 minute.

- Maintaining a small blast radius. If something was to go wrong, we wanted it to affect as few customers as possible.

- Being able to quickly switch a customer back to MySQL 5.7 if there were incompatibility issues with MySQL 8. Grafana instances are highly configurable, which increased the likelihood we’d encounter some instances with incompatibilities.

- Selectively migrating databases. Some customers were on older versions of Grafana that may not be compatible with MySQL8 and need extra time to upgrade.

Picking a MySQL upgrade process

Database upgrades are nothing new, and existing best practices are well documented. There are generally three ways to approach it:

- Upgrade in-place. This is the easiest approach, by far. However, it comes with too much downtime (usually about 10 minutes), affects every customer on the database server if something goes wrong, and is time-consuming to revert. To make matters worse, if you have a database server with a high total table count (>10K), it can take a long time. As a quick test, we cloned one of our bigger database servers (~70K tables total) and tried an in-place upgrade to see how long it would take. It ran for 24 hours and then timed out 😬.

- Replication. This is a common migration approach, as it results in almost zero downtime. Unfortunately, with this approach, you need to replicate the entire database server, then cutover to the new one all at once. Some cloud providers offer the ability to replicate individual databases through their data migration services, but not all. This means that while we could achieve low downtimes, we would have a large blast radius if something went wrong, since we would have to migrate the whole database server at once.

- Use

mysqldump. The last approach is to use themysqldumputility to take a dump of the current database, then import that dump into the new database. The downside of this is that it can be very slow for large databases, and the source database can’t be written to during the process. Thankfully, Grafana databases are small, so these issues weren’t much of a concern. Usingmysqldump, we could migrate individual databases between database servers with little downtime (less than 1 minute) and maintain a very small blast radius if something was to go wrong. It would also let us migrate individual databases, allowing us to be selective about which databases we migrate and when.

The consensus was to use the third approach 🚀since it satisfied all of our requirements.

Internal tooling to support the MySQL upgrade

We have an internal API endpoint that allows us to migrate a single Grafana database to another target database. During the migration process, the Grafana instance was put into a “migrating” state and was inaccessible. This endpoint uses mysqldump to take a dump of the database, store it in a bucket, then import that dump into the target database. If anything goes wrong during the migration, it exits and keeps the Grafana instance pointing at the source database. If the instance is successfully migrated, but an incompatibility is noticed afterwards, we use the same endpoint to migrate them back to the original database.

Creating a rollout plan

The critical piece of any database upgrade is creating a plan to roll out the upgrade in stages of increasing risk. This way, once we reach production, we’ll have fixed any incompatibilities or bugs throughout the process.

In Grafana Cloud, we have several environments: development, staging, canary, and production. We also have different tiers of customers: Free, Pro, and Advanced. We needed to roll the upgrades out to those environments and customer tiers in that order.

Our plan included following stages:

- Upgrade our development environment.

- Upgrade our staging environment.

- Upgrade our canary environment, while prioritizing our Free tier.

- Upgrade the production environment one cluster at a time, starting with smaller clusters. All clusters would be upgraded outside of typical business hours for their geographical region.

Using a Grafana dashboard to monitor progress 📈

We created a Grafana dashboard to monitor the rollout of the migrations for each cluster. This dashboard tracked how many instances were running on the source and target database servers, instances with a high database error rate, and database server resource utilization. It was important to keep an eye on CPU usage, since MySQL 8 uses a bit more CPU than MySQL 5.7 (about 5-10% more, from what we saw).

Performing the database upgrades

Like most database upgrades, performing them was the easy part. The process was:

- Set up the target database server and migrate a few test instances over to it to ensure it’s working as expected.

- Start migrating the Grafana databases in batches, while monitoring the migrations closely.

- When we have evidence the migrations are rolling out well, prevent the creation of new Grafana instances on the source database server, and instead create them on the target database server.

- For some of the larger clusters, perform the migration batches in parallel, since there were so many databases to migrate.

Challenges during the upgrades

We encountered various issues at different stages of the rollout. Thankfully, due to our decision to roll out incrementally, the blast radius from these was small. The issues encountered were:

- A deprecated MySQL system variable. Grafana can be configured to use different levels of transaction isolation. The system variable for this,

tx_isolation, was renamed in MySQL 8 totransaction_isolation. Any instances using this configuration failed to make queries. This was caught early in our development stage rollout and was a simple bug fix. - Disk performance. We discovered a disk I/O bottleneck when upgrading one cluster. For most cloud provider database servers, disk performance (IOPS) scales with storage capacity. We had a few database servers with low (but plenty of free) storage, which is understandable, since Grafana databases don’t take up much space. During one of the upgrades, we noticed degraded performance due to 100% disk utilization on the database server a few minutes after the upgrade process started. Because of our flexible upgrade process, we were able to put the upgrades on pause and provision all our database servers with more storage capacity before continuing.

- Unusually large databases. This issue was caught proactively, but not until later on. Prior to performing an upgrade, we checked to see if there were any large databases. Restoring dumps of large databases can take a while, so it was important to confirm database sizes before starting the upgrade. Out of tens of thousands of Grafana Cloud customers, there were about five databases that were unusually large for our upgrade process; they ranged from about 3-10GB in size. Our process was still able to handle them, but the downtimes were longer — about 5-10 minutes each. We ended up scheduling these at specific times that worked best for our customers. One improvement we could have explored was downloading the compressed database archives, and uncompressing them ourselves, effectively trading CPU for I/O.

Lessons learned

The upgrade to MySQL 8 was successful, but it may not have been if we had chosen one of the first two approaches discussed. This project highlighted the importance of being able to roll out software changes incrementally with a small blast radius and being able to roll back quickly.

Grafana Cloud is the easiest way to get started with metrics, logs, traces, and dashboards. We have a generous forever-free tier and plans for every use case. Sign up for free today!