Generative AI at Grafana Labs: what's new, what's next, and our vision for the open source community

As you’d imagine, generative AI has been a huge topic here at Grafana Labs. We’re excited about its potential role in bridging the gap between people and the beyond-human scale of observability data we work with every day.

We’ve also been talking a lot about where open source fits in — especially if that Google researcher is right and OSS will outcompete OpenAI and friends. What role can we play to bring the community along?

We’ve been building out a few obvious ideas (such as query assistants) and slowly bubbling up many that aren’t so obvious. Here on the AI/Machine Learning (AI/ML) team at Grafana Labs, we can’t possibly explore them all, so we’ve been focusing on two priorities:

- Empowering engineers, both inside Grafana Labs and across our global community

- Covering the risks, as safety and long-term value are better than impressive demos

Let’s highlight the first Grafana features we’ve released that are built on large language model (LLM) technology, and in keeping with Grafana Labs’ core cultural value of sharing openly and transparently, we’ll also shine some light on our longer-term approach.

Streamline incident response with Grafana Incident auto-summary

For on-call engineers, it’s both a relief and an accomplishment to wrap up an incident. Awesome, service is stable again! But there is still another urgent issue on the to-do list … Wait, there’s “paperwork” to do?! The post-incident review is important, and relies on good notes. But writing summaries is tedious and time-consuming, which is the last thing you want to do after you’ve just put out a fire.

There’s supposed to be a video here, but for some reason there isn’t. Either we entered the id wrong (oops!), or Vimeo is down. If it’s the latter, we’d expect they’ll be back up and running soon. In the meantime, check out our blog!

Today we’re introducing Grafana Incident auto-summary, which suggests a helpful synopsis that captures key details from your incident timeline with a single click. We’ve been using auto-summary internally since June, and starting today you can use it yourself, in any tier of Grafana Cloud, including our generous forever-free tier. (Don’t have a Grafana Cloud account? Sign up for free today!)

Grafana Incident auto-summary also marks the first feature enabled by the new OpenAI integration in Grafana Incident. Simply bring your own OpenAI API key to get started.

How Grafana Incident auto-summary works

Auto-summary started as one of our LLM experiments, where we’re applying LLMs to different user problems and building ways to measure how well we can solve them. To keep manual effort low, we’re using both language metrics as well auto-evaluation: We use GPT to compare generated summaries with human-written reports from our internal incidents. This helped us to iterate quickly, improving the prompt quality and correcting edge cases. (One example? Sticking to blameless phrasing. We found one generated summary that included the phrase “the guilty team was reprimanded,” which was actually based on a friendly comment in the timeline that made sense in context, but not in a summary.)

We’ll be sharing more about our evaluation framework in upcoming blog posts. We’re also aiming to release the tooling and data sets that we’ve made for the community to iterate on genuinely useful features.

This is just the beginning. This is the first AI feature to go out in Grafana Cloud, while most of our LLM-centered work has been focused on enabling open source LLM features.

Building powerful generative AI features in the big tent, open source way

Open source isn’t just a detail from Grafana’s origin story. It’s baked into how we function today, and Grafana’s leadership is keen for us to enable generative AI features everywhere for our community. We want to power up the huge range of data sources, visualizations, and flows that you build into Grafana everywhere, and that’s one thing the big tent, open source approach can do far better than private in-house development.

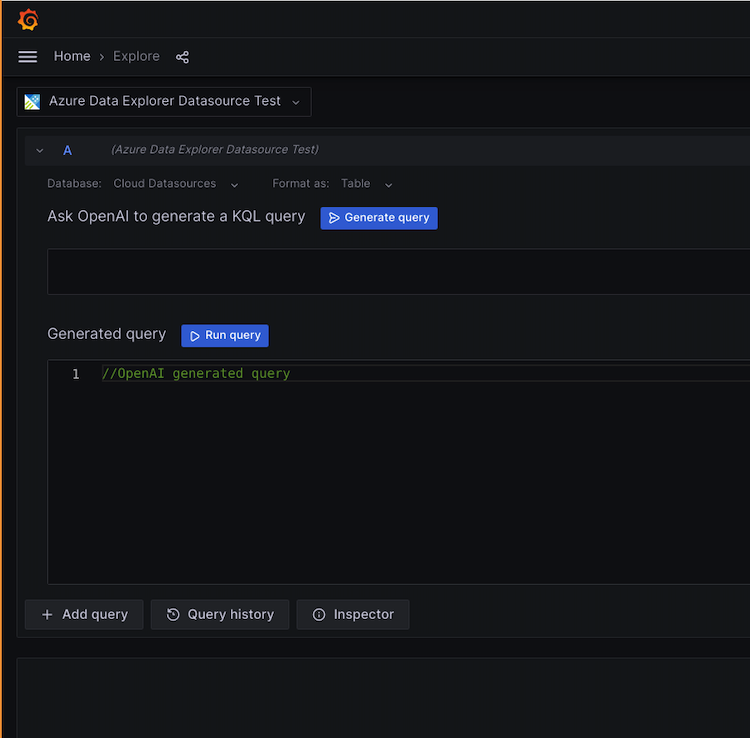

One open source LLM-powered Grafana feature is already in the wild: the Partner Data Source team merged basic OpenAI support into the Azure Data Explorer data source, so you can describe the KQL query you need and get a suggestion. To learn more, check out the Azure Data Explorer data source in GitHub and our recent blog post.

That’s an isolated feature, though. Our goal is to enable any plugin (or core Grafana product) to easily add LLM-powered features, and not force users to enable and configure each one. To make this possible, we’re building the architectural support into core Grafana for you to configure and manage your LLM integration all in one place.

The leading set of features revolves around the PromQL query editor, which will help you go from a question to a query naturally and explain the queries you’re less familiar with. We’ll release these as fully open source, because the goal is not only to build a better PromQL editor. It’s also to enable query builders and explainers in lots of data sources, including ones we don’t work on directly.

Join the AI conversation at Grafana Labs

We’re excited to hear your feedback and ideas about generative AI!

If you’re keen to add LLM-powered features to your own OSS plugins, stay tuned! We’ll have more to share on the blog soon.

You can try Grafana Incident auto-summary in Grafana Cloud, including in our forever-free tier. (Sign up today!) Simply add the OpenAI integration to enable it — you’ll need to enter OpenAI API key details — and let us know what you think.

Are you using the Azure Data Explorer’s new query generator? Open a Github discussion thread with your ideas and feedback.

As always, conversations are welcome in our Grafana Labs Community Slack and our community forums.

If you’re not already using Grafana Cloud — the easiest way to get started with observability — sign up now for a free 14-day trial of Grafana Cloud Pro, with unlimited metrics, logs, traces, and users, long-term retention, and access to all Enterprise plugins.