How we reduced flaky tests using Grafana, Prometheus, Grafana Loki, and Drone CI

Flaky tests are a problem that are found in almost every codebase. By definition, a flaky test is a test that both succeeds and fails without any changes to the code. For example, a flaky test may pass when someone runs it locally, but then fails on continuous integration (CI). Another example is that a flaky test may pass on CI, but when someone pushes a commit that hasn’t touched anything related to the flaky test, the test then fails. The worst case scenario is when simply building the same commit on the same infrastructure multiple times results in the test passing and failing sporadically.

Why are flaky tests bad?

Flaky tests reduce how robust and efficient a system can be. In fact, having a flaky test in a codebase is worse than not having it at all, because tests can fail randomly and the nature of the failure may vary. It introduces confusion, especially for developers who are new to the codebase and haven’t fully understood how one change could lead to losing valuable time in trying to understand how they broke an unrelated test.

Tests that fail randomly and add fuzziness in the expected results cannot really be considered tests. Tests assess the functionality of a program, which means each time we expect the same output if nothing’s touched in the actual code. If the test behavior changes between two consecutive runs, it means that they shouldn’t be regarded as tests, and they should be immediately fixed.

Another example of why it is better to disable flaky tests rather than keep restarting them until they pass is the release process. Imagine that you have a flaky test that fails during the release process on CI. Usually there’s no time to take a step back, triage the failure, and then re-trigger the CI build. Even if the release is not time sensitive, it’ll add more stress to the developers to fix the issue immediately. What’s done in these cases is that the build keeps restarting until it passes. Of course that’s not optimal either. It requires either manual intervention or automation around restarting certain parts of the CI build, which is an anti-pattern.

Using Drone CI

In Grafana Labs, we use Drone CI as our tool for continuous integration. Drone CI is a highly customizable CI platform that lets you take ownership of your pipeline relatively easily. Since Drone CI is a container-native continuous integration system, you can build and run your CI pipelines in containers, making it easy to also run things locally very quickly.

Each Drone CI pipeline exposes some environmental variables, which are specific to each build/pipeline. A brief overview can be found in the Drone documentation. These environmental variables are really useful to make active decisions about builds, customize your pipelines by checking the event that triggered a build (pull request, tag, etc.), and log meaningful messages in Drone Logs. There are other cool things we can do with some remaining environmental variables, too!

Using a Prometheus exporter for Drone environmental variables

Flaky tests became a problem we needed to address because they were not only failing on pull requests and on the main branch. Flaky tests were also failing during our release pipeline, which could delay the releases of our products. Release pipelines run on the scheduled release day, so Grafana can be built, packaged, and published into the wild. So if a flaky test fails in the middle of the release, we need to take a step back, address, and evaluate the pipeline failure, and then triage the issue to the appropriate team and start again — all on the release day. What we needed was a mechanism to be able to monitor our builds, check the health of our release branches, and also create meaningful alerts on CI-related failures.

By checking environmental variables such as DRONE_BUILD_STATUS, DRONE_STAGE_STATUS, DRONE_STEP_NAME we can get really valuable information about the build status. Those environmental variables need to be exported and stored so we can query them and create meaningful dashboards and alerts for our CI builds.

We have configured the Drone server to send information as webhooks to a service which listens for these webhooks. Once the listener gets the information from the webhooks, it exports metrics using a custom Prometheus exporter we’ve created and stores them in Grafana Mimir.

Using PromQL to query Prometheus

We have created a metric called drone_webhook_build_count to be able to export information about our builds on CI. These metrics get populated every time a Drone CI build has been completed regardless of whether it has succeeded or not.

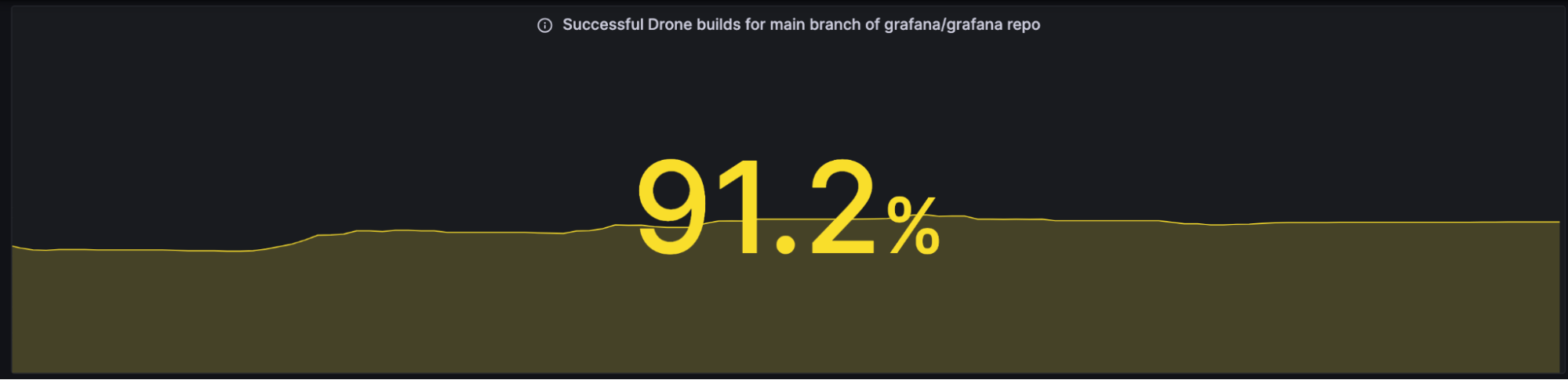

In the following example, we want to find the percentage of our successful builds of the main branch, using the following query:

sum(increase(drone_webhook_build_count{repo="grafana/grafana",target_branch="main",event="push",status="success"}[$__range]))

/

sum(increase(drone_webhook_build_count{repo="grafana/grafana",target_branch="main",event="push"}[$__range]))To explain the above query, we divide the number of successful drone builds by the sum of all successful, failed, and skipped builds.

Another example is the drone_webhook_stage_count, which counts the number of the stages and not the builds itself. In Drone CI, a build is a set of stages and a stage is a set of steps.

sum(increase(drone_webhook_stage_count{stage_status="success", repo="grafana/grafana"}[$__range])) by(stage)

/

sum(increase(drone_webhook_stage_count{stage_status=~"failure|success", repo="grafana/grafana"}[$__range])) by(stage)

To explain the above query, similarly with the build count metric, we now divide the number of successful stage builds by the total number of stage builds. A build may contain several stages, where the stages can be both successful and unsuccessful.

Here, we are able to understand the ratios for different types of stages/pipelines and act accordingly.

Using Grafana Loki to query Drone logs

In addition to Prometheus and PromQL to export valuable metrics, we also use Grafana Loki and LogQL. Similarly to Prometheus, instead of looking for metrics, we search throughout the logs that are produced from Drone CI. We store all the logs from Drone for each specific build in Google Cloud Storage (GCS) buckets and we query them after.

Since every Drone CI step runs in a Docker container, there are some fields that can be easily queried and give back information about the builds and can be found in GitHub.

Moreover, Loki can also detect fields that one can use to query (see detected fields in our Grafana documentation for reference).

The above panel shows the build times per stage, so we can identify the time each stage takes to run. This gives us the space to try and speed things up, time wise. Also, if we identify any abnormalities, we can know that some code we introduced after a merged PR made our stages run slower.

Here is the query to produce the above panel:

avg by(pipeline) (avg_over_time({app="drone-webhook-listener"} | json | msg="Pipeline" | repo=~ "grafana/grafana" | status="success" | pipeline=~"$main-" | unwrap duration [1m])) / 60Identifying and fixing flaky tests

After setting up Prometheus and Loki to be able to visualize metrics and logs, the next step was to try and identify the flaky tests. The way we approached this was to continuously trigger a build for the same commit, using a cronjob every 15 minutes, running for a day. This gave us 96 builds. We were able to gather data for the builds’ success ratio. Together with the ratio, we were able to list the steps that failed using either Loki or Prometheus and then use Loki to query failed test names based on Drone logs, exported from the detected fields.

What we observed was that there were a few tests that sometimes passed and other times failed — thus, flaky. We were able to delegate all the different test failures to different teams by creating issues and asking them to fix or remove the flaky tests. After they addressed all the issues, we ran our builds again for the same commits, plus the flaky test fixes, so we could ensure that everything in our CI was left untouched and the actual code was not touched if it wasn’t necessary.

Doing this over and over again, led to an increase in our successful build ratio, which we regarded as a satisfactory ratio. After we increased the success rate we set up alerts to notify us when the ratio falls below our acceptable build success rate threshold, using Grafana Alerting. When flaky tests appear, it’s highly likely that our build success rate will start decreasing again. When it reaches below our threshold, we get alerts and we create and delegate issues to the appropriate teams to fix.

Flaky tests no more!

Well … that’s not true. Flaky tests can be all over the place, particularly in large projects. The observability layer we have added on top of our CI builds has helped us identify those tests and alert us every time they get introduced. Most of the time it’s difficult to tell if a test will be flaky just by reviewing the code and that’s why we need to monitor every test run in our CI flow.

Being able to use such powerful tools as Grafana with its related data sources and Drone CI gives us both a high- and low-level overview of our builds and flows. Getting the best out of the CI tool we use enables us to get 100% ownership of the whole flow and also take immediate actions which also involve other teams and divisions. That way, we can ensure that our pipelines are always healthy, Grafana is built and packaged properly, and we release the best quality products into the wild!

Grafana Cloud is the easiest way to get started with metrics, logs, traces, and dashboards. We have a generous free forever tier and plans for every use case. Sign up for free now!