How to autoscale Grafana Loki queries using KEDA

Grafana Loki is Grafana Labs’ open source log aggregation system inspired by Prometheus. Loki is horizontally scalable, highly available, and multi-tenant. In addition, Grafana Cloud Logs is our fully managed, lightweight, and cost-effective log aggregation system based on Grafana Loki, with free and paid options for individuals, teams, and large enterprises.

We operate Grafana Cloud Logs at a massive scale, with clusters distributed across different regions and cloud platforms such as AWS, Microsoft Azure, and Google Cloud. Our cloud offering also ingests hundreds of terabytes of data and queries petabytes of data every day across thousands of tenants. On top of that, there are large swings in resource consumption over the course of any given day, which makes it exceedingly difficult to manually scale clusters in a way that’s responsive for users and cost-effective for us.

In this blog, we’ll describe how Grafana Labs’ engineers used Kubernetes-based Event Driven Autoscaler (KEDA) to better handle Loki queries on the backend.

Why we need autoscaling

One of the Loki read-path components in charge of processing queries for Grafana Cloud Logs is the querier, which is the component that matches LogQL queries to logs. As you can imagine, we need many queriers to achieve such a high level of throughput. However, that need fluctuates due to significant changes in our clusters’ workload throughout the day. Until recently, we manually scaled the queriers according to the workload, but this approach had three main problems.

- It creates delays in properly scaling up queriers in response to workload increases.

- We may over-provision clusters and leave queriers idle for sustained periods.

- It leads to operational toil because we have to manually scale queriers up and down.

To overcome these problems, we decided to add autoscaling capabilities to our querier deployments in Kubernetes.

Why we chose KEDA

Kubernetes comes with a built-in solution to horizontally autoscale pods: HorizontalPodAutoscaler (HPA). You can use HPA to configure autoscaling for components such as StatefulSets and Deployments based on metrics from Kubernetes’ metrics server. The metrics server exposes CPU and memory usage for pods out of the box, but you can feed it more metrics if needed.

CPU and memory usage metrics are often enough to decide when to scale up or down, but there may be other metrics or events to consider. In our case, we were interested in scaling based on some Prometheus metrics. Since Kubernetes 1.23, HPA has supported external metrics, so we could have chosen a Prometheus adapter to scale based on Prometheus metrics. However, KEDA makes this even easier.

KEDA is an open source project initially developed by Microsoft and Red Hat, and we were already using it internally for a similar use case with Grafana Mimir. In addition to familiarity, we chose it because it was more mature and because it allows scaling any pod based on events and metrics from different sources, such as Prometheus. KEDA is built on top of HPA and exposes a new Kubernetes metrics server that serves the new metrics to the HPAs created by KEDA.

How we use KEDA on queriers

Queriers pull queries from the query scheduler queue and process them on the querier workers. Therefore, it makes sense to scale based on:

- The scheduler queue size

- The queries running in the queriers

On top of that, we want to avoid scaling up for short workload spikes. In those situations, the queries would likely process the workload quicker than the time it would take to scale up.

With this in mind, we scale based on the number of enqueued queries plus the running queries. We call these inflight requests. The query scheduler component will expose a new metric, cortex_query_scheduler_inflight_requests, that tracks the inflight requests using a summary for percentiles. By using percentiles, we can avoid scaling up when there is a short-lived spike in the metric.

With the resulting metric, we can use the quantile (Q) and range (R) parameters in the query to fine-tune our scaling. The higher Q is, the more sensitive the metric is to short-lived spikes. As R increases, we reduce the metric variation over time. A higher value for R helps prevent the autoscaler from modifying the number of replicas too frequently.

sum(

max_over_time(

cortex_query_scheduler_inflight_requests{namespace="%s", quantile="<Q>"}[<R>]

)

)We then need to set a threshold so we can calculate the desired number of replicas from the value of the metric. Querier workers process queries from the queue, and each querier is configured to run six workers. We want to leave some workforce headroom for spikes, so we aim to use 75% of those workers. Therefore, our threshold will be a floor of 75% of six workers per querier, or four workers.

This equation defines the desired number of replicas from the current replica(s), the value of the metric, and the threshold we configured:

desiredReplicas = ceil[currentReplicas * ( currentMetricValue / threshold )]

For example, if we have one replica and 20 inflight requests, and we aim to use 75% of the six workers available per worker (four), the new desired number of replicas would be five.

desiredReplicas = ceil[1 * (20 / 4)] = 5

With this in mind, we can now create our KEDA ScaledObject to control queriers’ autoscaling. The following resource definition configures KEDA to pull metrics from http://prometheus.default:9090/prometheus. It also scales up to 50 queriers, scales down to 10 queriers, uses the 75th percentile for the inflight requests metric, and aggregates its maximum value over two minutes. The scaling threshold remains four workers per querier.

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: querier

namespace: <REDACTED INTERNAL DEV ENV>

spec:

maxReplicaCount: 50

minReplicaCount: 10

pollingInterval: 30

scaleTargetRef:

kind: Deployment

name: querier

triggers:

- metadata:

metricName: querier_autoscaling_metric

query: sum(max_over_time(cortex_query_scheduler_inflight_requests{namespace=~"REDACTED", quantile="0.75"}[2m]))

serverAddress: http://prometheus.default:9090/prometheus

threshold: "4"

type: prometheusTesting with Grafana k6

We performed several experiments and benchmarks to validate our proposal before deploying it to our internal and production environments. And to carry out those benchmarks, what better way than with Grafana k6?

Using k6’s Grafana Loki extension, we created a k6 test that iteratively sends different types of queries to a Loki development cluster from several virtual users (VUs; effectively runner threads). We tried to simulate real-world variable traffic using the following sequence:

- For two minutes, increase from 5 VUs to 50.

- Stay constant with 50 VUs for one minute.

- Increase from 50 VUs to 100 VUs in 30 seconds and back to 50 in another 30 seconds to create a workload spike.

- Repeat the previous spike.

- Finally, go from 50 VUs to zero within two minutes.

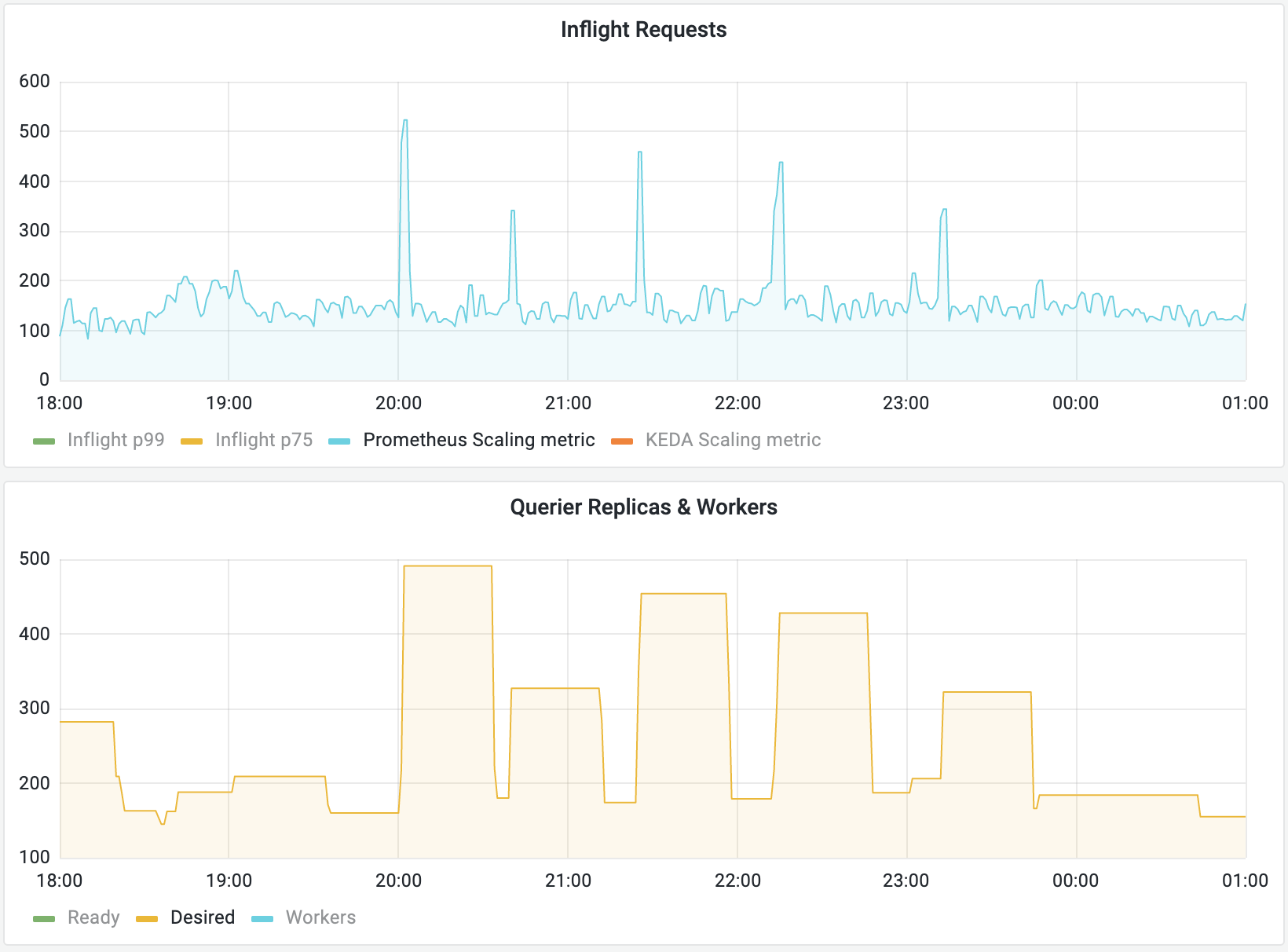

In the images below, we can see how the test looked in the cloud service, as well as how the number of inflight requests and querier replicas changed during the test. First, queriers scale up as the workload increases, and finally, queriers go back down a few minutes after the test finished.

Once we validated that our approach worked as expected, the next step was to try it with some real-world workloads.

Deploying and fine-tuning

To validate that our implementation satisfies our needs in a real-world scenario we deployed the autoscaler in our internal environments. Until this point, we had performed our experiments on isolated environments where k6 created all the workload. Next, we had to estimate appropriate values for the maximum and the minimum number of replicas.

For the minimum number of replicas, we checked the average number of inflight requests 75% of the time in the seven previous days, targeting a 75% utilization of our queriers.

clamp_min(ceil(

avg(

avg_over_time(cortex_query_scheduler_inflight_requests{namespace=~"REDACTED", quantile="0.75"}[7d])

) / scalar(floor(vector(6 * 0.75)))

), 2)For the maximum number of replicas, we combined the current number of replicas with the number of queriers required to process the 50th percentile of inflight requests during the previous seven days. Since each querier runs six workers, we divide the inflight requests by six.

clamp_min(ceil(

(

max(

max_over_time(cortex_query_scheduler_inflight_requests{namespace=~"REDACTED", quantile="0.5"}[7d])

) / 6

>

max (kube_deployment_spec_replicas{namespace=~"REDACTED", deployment="querier"})

)

or

max(

kube_deployment_spec_replicas{namespace=~"REDACTED", deployment="querier"}

)

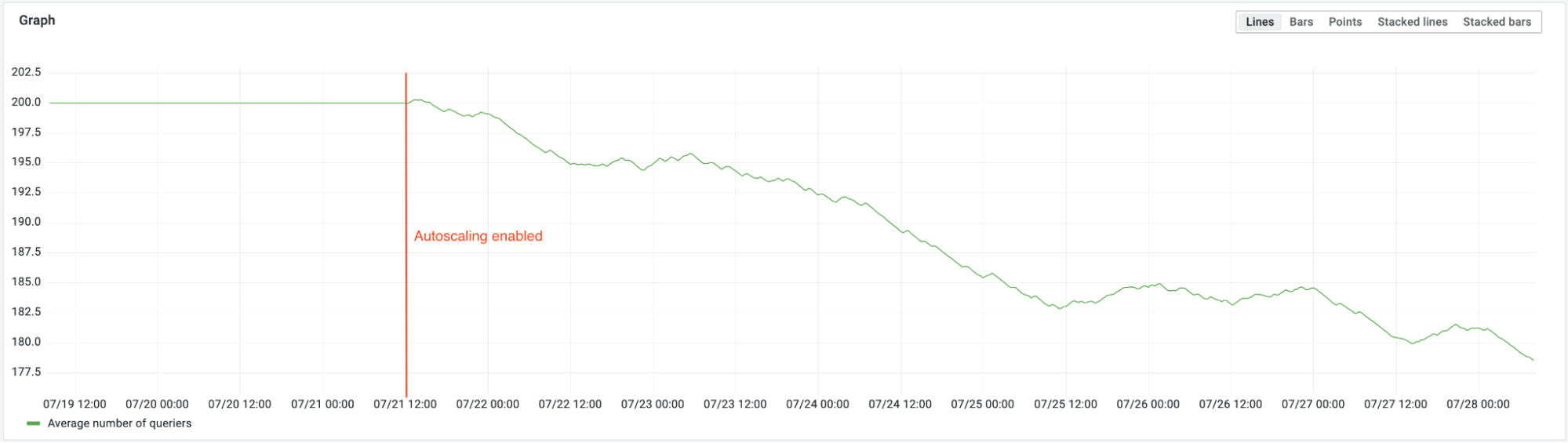

), 20)Having estimated some good numbers for minimum and max replicas, we deployed the autoscaler on our internal environment. As you can see in the following image, we achieved the desired outcome: The queriers scaled up as the workload increased and scaled down after the workload decreased.

You may have noticed frequent fluctuations — also known as flapping — in the number of replicas due to constant changes in the scaling metric values. If you scale down lots of pods too soon after scaling them up, you end up consuming a lot of resources in Kubernetes to schedule the pods. Moreover, it may affect the query latency as we need to bring up new queriers to handle these queries too often. Fortunately, HPA provides a mechanism to configure a stabilization window to mitigate these frequent fluctuations, and as you may have guessed, so does KEDA.

The spec.advanced.horizontalPodAutoscalerConfig.behavior.scaleDown.stabilizationWindowSeconds parameter allows you to configure a cooldown period between zero seconds (scale immediately) and 3,600 seconds (one hour), with a default value of 300 seconds (five minutes). The algorithm is simple: We use a sliding window that spans the configured time and sets the number of replicas to the highest number reported within that time frame. In our case, we configured a stabilization window of 30 minutes:

spec:

advanced:

horizontalPodAutoscalerConfig:

behavior:

scaleDown:

stabilizationWindowSeconds: 1800The image below shows how the shape of the graph is more uniform now in regards to the number of replicas. There are still some cases where the queriers scaled up shortly after scaling down, but there will always be edge cases where this will happen. We still need to find a good value for this parameter that suits the shape of our workloads, but overall, we can see there are fewer spikes.

And even though we configured a considerably higher number of maximum replicas than we did when we manually scaled Loki, the average number of replicas is lower after adding the autoscaler. A lower average number of replicas translates to a lower cost of ownership for the querier deployment.

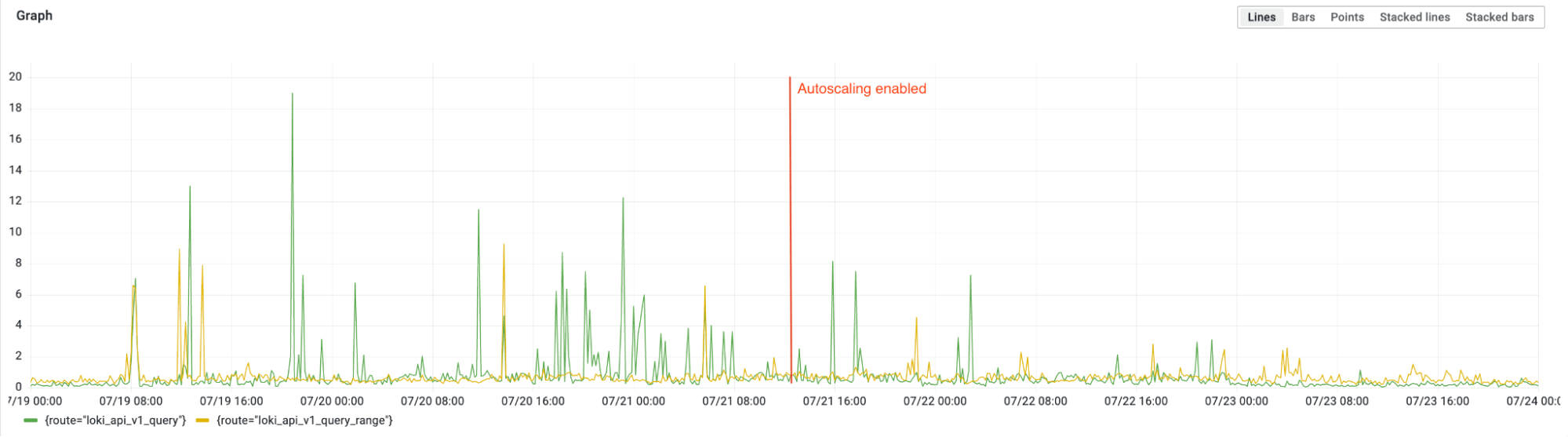

Moreover, we can see there are no symptoms of query latency degradation due to the autoscaler. The following image shows the p90 latency, in seconds, of queries and range queries before and after enabling the autoscaling on July 21 at 12:00 UTC.

Finally, we needed a way to know when to increase our maximum number of replicas. For that, we created an alert that fires when the autoscaler runs at the maximum configured number of replicas for an extended time. The following code snippet contains this metric and will fire if it evaluates to true for at least three hours.

name: LokiAutoscalerMaxedOut

expr: kube_horizontalpodautoscaler_status_current_replicas{namespace=~"REDACTED"} == kube_horizontalpodautoscaler_spec_max_replicas{namespace=~"REDACTED"}

for: 3h

labels:

severity: warning

annotations:

description: HPA {{ $labels.namespace }}/{{ $labels.horizontalpodautoscaler }} has been running at max replicas for longer than 3h; this can indicate underprovisioning.

summary: HPA has been running at max replicas for an extended timeLearn more about autoscaling Grafana Loki

A new operational guide for autoscaling is available in the Loki documentation. We look forward to improving Loki’s ease of use and making Loki even more cost-efficient. We hope you find this blog post useful, be sure to check out more of our content about Loki.

Grafana Cloud is the easiest way to get started with metrics, logs, traces, and dashboards. We have a generous free forever tier and plans for every use case. Sign up for free now!