Grafana alerts as code: Get started with Terraform and Grafana Alerting

Alerting infrastructure is often complex, with many pieces of the pipeline that often live in different places. Scaling this across many teams and organizations is an especially challenging task.

As organizations grow in size, the observability component tends to grow along with it. For example, you may have many components, each of which needs a different set of alerts. You may have several teams, each with a different channel where notifications should be delivered. You might have several deployment pipelines, which all need to keep your alerts in sync with your code. Not to mention how keeping track of all this manually is time-consuming and prone to errors.

As-code workflows help you tame the complexity of observability at scale. With Infrastructure as Code (IaC), you can define your observability tooling as part of the components that need it. You can store your entire configuration in version control. You can even deploy it right alongside the rest of your infrastructure, no manual tasks required!

That’s why we are happy to announce the general availability of Terraform provider support for Grafana Alerting. This provider makes it easy to create, manage, and maintain your entire Grafana Alerting stack as code.

Join us for a tour of the new functionality and get started managing your Grafana Alerting infrastructure as code today.

Connect Terraform to Grafana

In order to provision alerts in Grafana, you’ll need Grafana version 9.1 or later, and Terraform provider version 1.27.0 or later.

Create an API key for provisioning

You can create a normal Grafana API key to authenticate Terraform with Grafana. Most existing tooling using API keys should automatically work with the new Grafana Alerting support.

We also provide dedicated RBAC roles for alert provisioning. This lets you easily authenticate as a service account with the minimum permissions needed to provision your Grafana Alerting infrastructure. Or, if you prefer basic auth, that works too!

Configure the Terraform provider

The Grafana Alerting support is included as part of the Grafana Terraform provider. You can see an example of how to configure it below.

terraform {

required_providers {

grafana = {

source = "grafana/grafana"

version = ">= 1.28.2"

}

}

}

provider "grafana" {

url = <YOUR_GRAFANA_URL>

auth = <YOUR_GRAFANA_API_KEY>

}Provision your Grafana Alerting stack

Now let’s get started with building our Grafana Alerting stack using Terraform!

Contact points and templates

Contact points are what connect an alerting stack to the outside world. They tell Grafana how to connect to your external systems and where to deliver notifications. We provide over 15 different integrations to choose from.

Let’s look at an example of how to create a contact point that sends alert notifications to Slack.

resource "grafana_contact_point" "my_slack_contact_point" {

name = "Send to My Slack Channel"

slack {

url = <YOUR_SLACK_WEBHOOK_URL>

text = <<EOT

{{ len .Alerts.Firing }} alerts are firing!

Alert summaries:

{{ range .Alerts.Firing }}

{{ template "Alert Instance Template" . }}

{{ end }}

EOT

}

}After running terraform apply, you can go to the Grafana UI and check the details of our contact point. Since the resource was provisioned through Terraform, it will appear locked in the UI. This helps you make sure that your Alerting stack always stays in sync with your code.

We can also use the Test button to verify that the contact point is working correctly.

The text field, representing the content of the message that is sent, supports Go-style templating. This allows you to manage your Grafana Alerting message templates directly in Terraform.

You can also re-use the same templates across many contact points. In the example above, we embed a shared template using the statement {{ template “Alert Instance Template” . }} This fragment can then be managed separately in Terraform:

resource "grafana_message_template" "my_alert_template" {

name = "Alert Instance Template"

template = <<EOT

{{ define "Alert Instance Template" }}

Firing: {{ .Labels.alertname }}

Silence: {{ .SilenceURL }}

{{ end }}

EOT

}Notification policies and routing

Notification policies tell Grafana how to route alert instances to our contact points.

Let’s look at a notification policy that routes all alerts to the contact point we just created. We’ll also group the alerts by alertname, which means that any notifications coming from alerts which share the same name will be grouped into the same Slack message.

resource "grafana_notification_policy" "my_policy" {

group_by = ["alertname"]

contact_point = grafana_contact_point.my_slack_contact_point.name

}Mute timings

Mute timings provide the ability to mute alert notifications for defined time periods.

Let’s look at an example that mutes alert notifications on weekends.

resource "grafana_mute_timing" "my_mute_timing" {

name = "My Mute Timing"

intervals {

weekdays = ["saturday", "sunday"]

}

}You can apply mute timings to specific notifications by referencing them in your notification policy. Let’s look at an example of how we might mute all notifications with the label a=b on weekends.

resource "grafana_notification_policy" "my_policy" {

group_by = ["alertname"]

contact_point = grafana_contact_point.my_slack_contact_point.name

policy {

matcher {

label = "a"

match = "="

value = "b"

}

group_by = ["alertname"]

contact_point = grafana_contact_point.my_slack_contact_point.name

mute_timings = [grafana_mute_timing.my_mute_timing.name]

}

}Alert rules

Finally, alert rules enable you to alert against any Grafana data source. This can be a data source that you already have configured, or you can even define your data sources in Terraform right next to your alert rules.

First, let’s create a data source to query and a folder to store our rules in. We’ll just use the built-in TestData data source for now.

resource "grafana_data_source" "testdata_datasource" {

name = "TestData"

type = "testdata"

}

resource "grafana_folder" "rule_folder" {

title = "My Rule Folder"

}Then, let’s define an alert rule. To learn more about what you can do with alert rules, check out our guide on how to create Grafana-managed alerts.

Rules are always organized into groups, so let’s wrap our rule using the grafana_rule_group resource.

resource "grafana_rule_group" "my_rule_group" {

name = "My Alert Rules"

folder_uid = grafana_folder.rule_folder.uid

interval_seconds = 60

org_id = 1

rule {

name = "My Random Walk Alert"

condition = "C"

for = "0s"

// Query the datasource.

data {

ref_id = "A"

relative_time_range {

from = 600

to = 0

}

datasource_uid = grafana_data_source.testdata_datasource.uid

// `model` is a JSON blob that sends datasource-specific data.

// It's different for every datasource. The alert's query is defined here.

model = jsonencode({

intervalMs = 1000

maxDataPoints = 43200

refId = "A"

})

}

// The query was configured to obtain data from the last 60 seconds. Let's alert on the average value of that series using a Reduce stage.

data {

datasource_uid = "-100"

// You can also create a rule in the UI, then GET that rule to obtain the JSON.

// This can be helpful when using more complex reduce expressions.

model = <<EOT

{"conditions":[{"evaluator":{"params":[0,0],"type":"gt"},"operator":{"type":"and"},"query":{"params":["A"]},"reducer":{"params":[],"type":"last"},"type":"avg"}],"datasource":{"name":"Expression","type":"__expr__","uid":"__expr__"},"expression":"A","hide":false,"intervalMs":1000,"maxDataPoints":43200,"reducer":"last","refId":"B","type":"reduce"}

EOT

ref_id = "B"

relative_time_range {

from = 0

to = 0

}

}

// Now, let's use a math expression as our threshold.

// We want to alert when the value of stage "B" above exceeds 70.

data {

datasource_uid = "-100"

ref_id = "C"

relative_time_range {

from = 0

to = 0

}

model = jsonencode({

expression = "$B > 70"

type = "math"

refId = "C"

})

}

}

}The alert will then appear under the alert rules panel. Here, you can see whether or not the rule is firing. You can also see a visualization of each of the rule’s query stages.

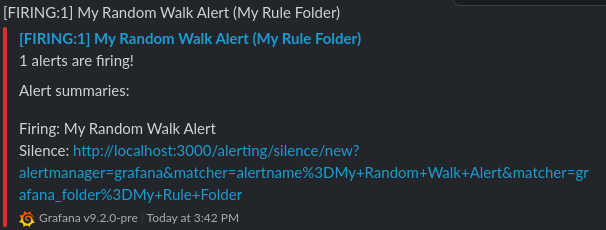

When our alert fires, Grafana will route a notification through the policy we defined earlier.

Since we configured that policy to send alert notifications to our Slack contact point, Grafana’s embedded Alertmanager will automatically post a message to our Slack channel!

Learn more about Grafana Alerting

Since rolling out the new Grafana Alerting experience in Grafana 9.0, we’ve added lots of new features and improvements. To learn more, you can watch our deep dive on Grafana Alerting, or read our Grafana Alerting documentation.

If you’re looking for more ways to provision your alerting stack, check out our blog post on file provisioning.

Finally, if you don’t see a feature that you want, or if you have any feedback about either the new Terraform support or Grafana Alerting in general, please let us know! You can do this by opening an issue in the provider GitHub repository, the Grafana GitHub repository, or by asking in the #alerting channel in the Grafana Labs Community Slack.

Grafana Cloud is the easiest way to get started with metrics, logs, traces, and dashboards. We have a generous free forever tier and plans for every use case. Sign up for free now!