How Grafana Mimir helped Pipedrive overcome Prometheus scalability limits

Karl-Martin Karlson has been working on Pipedrive’s observability team for more than four years, implementing and supporting several observability platforms such as Grafana, Prometheus, Graylog, and New Relic.

In sales, as in life, you can’t control your results — but you can control your actions.

With that in mind, a team of sales professionals set out in 2010 to build a customer relationship management (CRM) tool that helps users visualize their sales processes and get more done. So, they created Pipedrive, the first CRM platform made for salespeople, by salespeople. Pipedrive is based around activity-based selling, a proven approach that’s all about scheduling, completing, and tracking activities.

Pipedrive, however, quickly had another revelation: You can’t control your actions (or scale a business), if you don’t have control over your observability. Thus, we implemented Prometheus more than five years ago, and it quickly became the company standard as to how a service should export monitoring metrics.

In our stack, when a new microservice is deployed, all a developer needs to do is add the prometheus_exporter annotation and include the correct libraries to their service. The metrics are then automatically picked up and can be queried through Grafana in a matter of one Prometheus scrape interval.

The problem: Scaling a federated Prometheus deployment

Our monitoring setup has grown along with the company, expanding from one physical location to a federated setup with three physical data centers in addition to AWS deployed in five product regions and five test/dev regions. Grafana is used across our entire company, with roughly 500 active users leveraging Grafana for visualization and alerting.

Up until this year, we were using a federated deployment model where we had a Prometheus federator in each data center scraping metrics from all services, VMs, hosts, and two main Prometheis (test and live) that scraped metrics from these federators to a central aggregated location.

But around eight months ago, we started noticing problems with our live Prometheus instance, which started to crash and run out of memory for no obvious reason. Increasing resources only worked up until 32v CPU and 256GB of memory — beyond that adding more resources was futile and did not fix our issues. Prometheus restarts also took up to 15 minutes to replay WALs. These were not delays we could afford as our entire observability and alerting strategy depended on Prometheus being available.

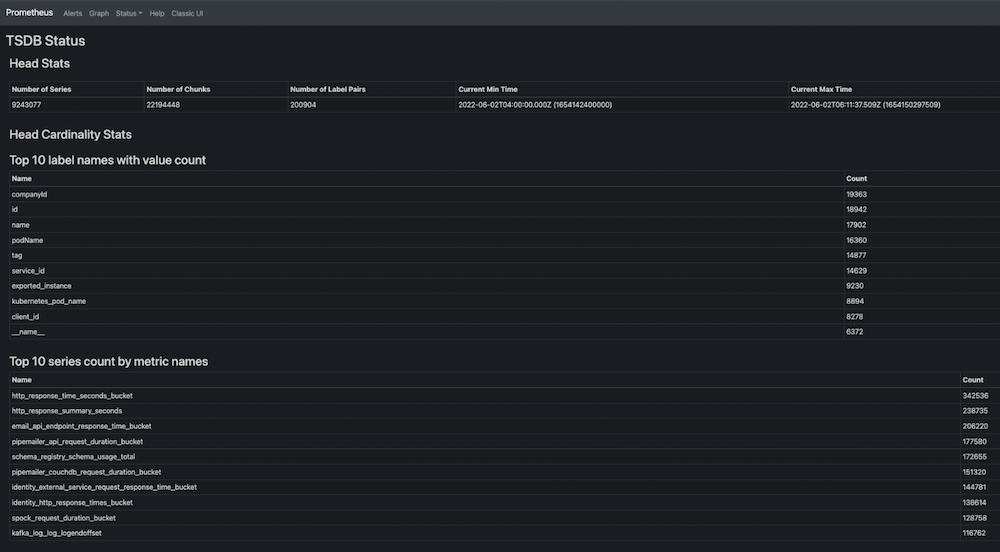

For the aggregated Prometheus instance, problems started when we hit ~8 million active series, ~20 million chunks, and ~200k label pairs.

Around the same time we started looking into replacing our aggregated Prometheus instances, Grafana Labs rolled out Grafana Mimir. Taking into account all the features Mimir had introduced, such as fast query performance and enhanced compactors, we decided to jump right in and implement Grafana Mimir into our stack.

Configuring Grafana Mimir at Pipedrive

It took a team of two engineers roughly two to three months to fully implement, scale, and optimize Grafana Mimir in our setup. We started by gradually implementing Mimir while our entire Prometheus stack was still operational and replicated all the data without any changes or service disruption.

To gracefully migrate from Prometheus to Mimir, we configured our Prometheus federators to remote write to Mimir and made Mimir available as a new data source in Grafana for everyone to test out during the PoC period.

After Mimir data was synced with all of our Prometheis, we transparently started to redirect query requests going to our aggregated Prometheus instances to Mimir, and we gradually optimized the Mimir configuration and deployment for all users and services using Prometheus metrics. In particular, we spent some time fine-tuning the Mimir per-tenant limits as well as CPU and memory requirements for each Mimir microservice, tailoring the configuration to our usage patterns.

The migration was fully transparent for our users — and a huge win for our observability team.

Grafana Mimir at Pipedrive: By the numbers

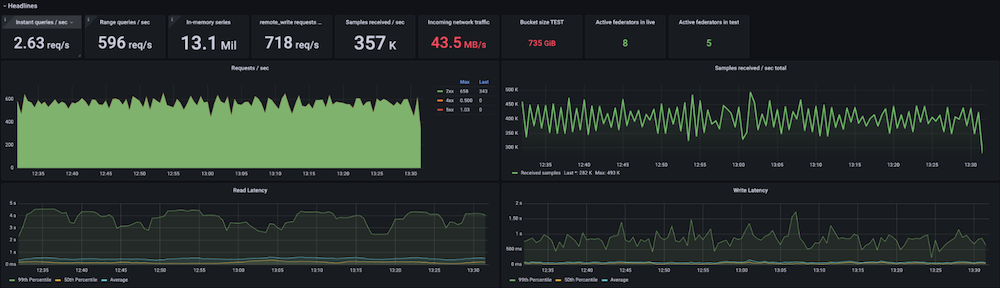

Pipedrive’s Grafana Mimir production cluster is currently running on Kubernetes, using the microservices deployment mode, handling the following traffic:

- Between 12 and 15 million active series, depending on the time of day

- 400K samples/sec received on the write path

- 600 queries/sec successfully executed on the read path on average

- Pod resources:

- 52 ingesters ( 1.5CPU / 9GB memory)

- 30 distributors( 1.2CPU / 1.5GB memory)

- 10 store-gateway ( 3CPU / 5GB memory)

- 2 compactors ( 2CPU / 3GB memory)

- 18 queriers ( 1.5CPU / 3GB memory )

- 18 query-frontend ( 0.3CPU / 0.5GB memory)

Benefits of Grafana Mimir

The biggest benefit of migrating to Mimir was solving the Prometheus scalability limits we were facing. Previously, our main Prometheus instances were going out-of-memory under high load. This led to on-call engineers receiving pages day and night, and the whole company losing valuable observability data until Prometheus recovered, which typically took a long time. Migrating to Mimir gave us much more room to scale as Pipedrive services started to expose more and more metrics each day.

Moreover, with Grafana Mimir’s cardinality analysis API and active series custom trackers, we’ve been able to inspect what services expose the highest cardinality metrics, which allows us to consistently fine-tune them.

Also if there is a single (unintentional!) malicious query, it cannot bring down the entire Mimir stack. The worst case scenario that we observed in Mimir is that a few query frontend and/or querier pods get OOM killed, but that does not have a significant impact for other requests happening at the same time. Within Prometheus, the same scenario would have led to restarting the entire service, which in our case meant roughly 10 minutes of downtime for our entire metrics stack and all on-call engineers receiving false alerts.

Mimir per-tenant limits have been very useful, too. They help limit the amount of data ingested and queried on a per-tenant basis and prevent the whole system from being overloaded or even crashing under heavy load. Limits gave us the ability to solve the root cause of high cardinality metrics at the product service level, without compromising the whole observability stack until the issue was resolved.

The future of the Grafana LGTM stack at Pipedrive

We continue to work on optimizing our Grafana Mimir setup within our stack and finding what configurations and limits work for us. We are also leveraging preconfigured Grafana Mimir dashboards and alerts.

Grafana together with Grafana Mimir have become the standard tools that we use to monitor services and processes at Pipedrive. And we indoctrinate our engineers from the get go: Every new engineer joining Pipedrive receives an onboarding session explaining the basics of Grafana and Mimir to get them jump-started with our stack — which is only expanding from here. Since we’ve had a great experience with Grafana platforms so far, we are planning to test Grafana Loki and Grafana Tempo in the coming months to replace our existing logging and tracing platforms.

Grafana Cloud is the easiest way to get started with metrics, logs, traces, and dashboards. We have a generous free forever tier and plans for every use case. Sign up for free now!