How to monitor your Apache Spark cluster with Grafana Cloud

Here at Grafana Labs, when we’re building integrations for Grafana Cloud, we’re often thinking about how to help users get started on their observability journey. We like to focus some of our attention on the different technologies you might come across along the way. That way, we can share our tips on the best ways to interact with them while you’re using Grafana Labs products.

In this post, I’m going to focus on one of the most used platforms within the Apache family, Spark, and walk you through how to use the new Apache Spark integration for Grafana Cloud. (Don’t have a Grafana Cloud account? Sign up for free now!)

The basics

Simply put, Apache Spark is a powerful data processing framework that can quickly process large data sets and distribute the workload across multiple instances.

Along with being fast, Spark is also flexible. It can be deployed in a variety of ways, provides native bindings for the Java, Scala, Python, and R programming languages, and supports SQL, streaming data, machine learning, and graph processing.

Due to all this power, it has become one of the key open source distributed data processing systems for developers.

Monitoring Apache Spark with Grafana Cloud

An Apache Spark application is composed mainly of 3 components: The Driver Program, responsible for running the main() function of the application and allocating Executor processes inside the Worker Nodes allocated by the Cluster Manager. For more information regarding the Spark cluster deployment architecture, please refer to the cluster mode overview docs.

With this first version of the Apache Spark integration for Grafana Cloud, we focused on the Worker Nodes, since they are responsible for actually doing the work. We plan to release new versions of this integration down the road, which will also include dashboards to monitor the Driver Program.

This integration is based on the built-in Prometheus plugin, available from version 3.0 upwards, which should be enabled following the documentation. This tutorial by dzlab might be helpful as well.

The next step is to configure the Grafana Agent to scrape your Apache Spark nodes. Please refer to the Spark integration documentation for details.

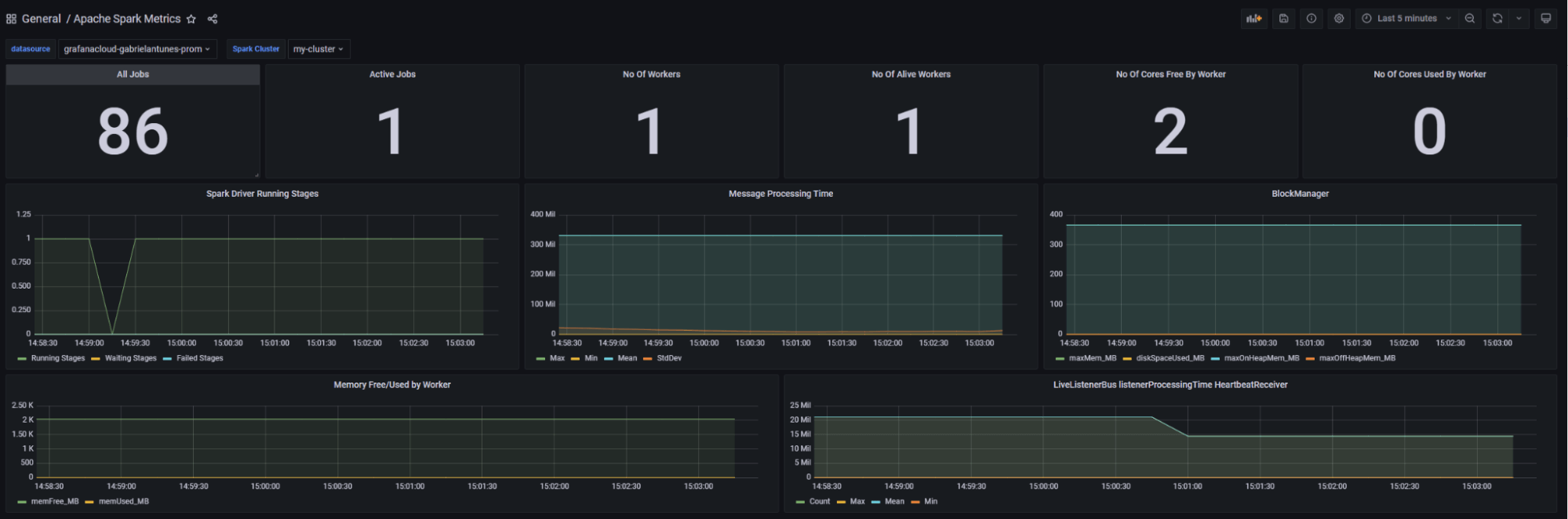

The integration is composed of a single dashboard that gives you a unified overview of the status of the Worker Nodes and the jobs running alongside it, including the count of active jobs, count of workers on the cluster, count cores used by worker, memory consumption-related metrics, and more.

Give the Apache Spark integration for Grafana Cloud a try, and let us know what you think or share your feedback on what other features you think would be helpful to fully observing Spark clusters. You can reach out to us in our Grafana Labs Slack Community via #Integrations.

Grafana Cloud is the easiest way to get started with metrics, logs, traces, and dashboards. We have a generous free forever tier and plans for every use case. Sign up for free now!