Gardener, SAP's Kubernetes-as-a-service open source project, is moving its logging stack to Loki

Kristian Zhelyazkov is a developer at SAP working on Gardener, the SAP-driven Kubernetes-as-a-service open source project. In this guest blog post, he explains why the project is moving its logging stack to Loki.

About Gardener

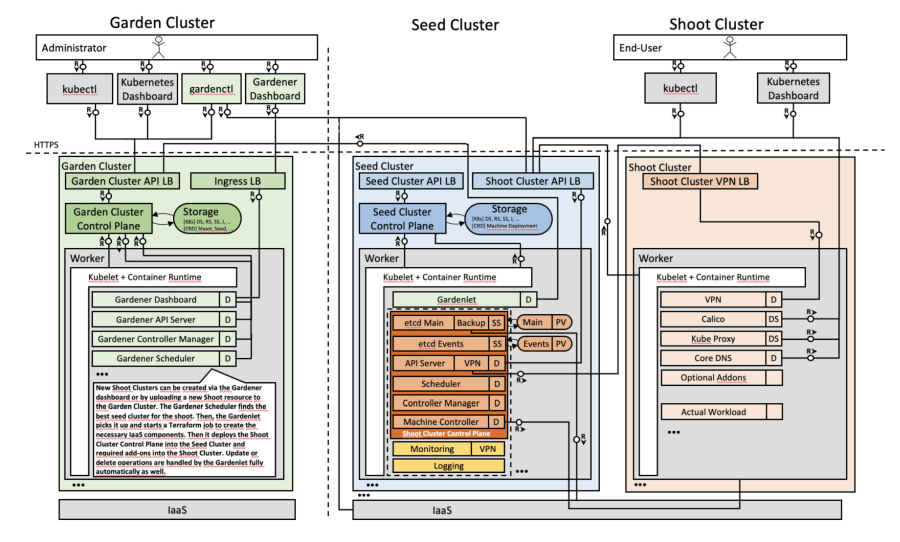

Gardener delivers fully managed Kubernetes clusters as a service and provides a fully validated extensibility framework that can be adjusted to any programmatic cloud or infrastructure provider.

Gardener’s main principle is to leverage Kubernetes concepts for all of its tasks.

In essence, Gardener is an extension API server that comes along with a bundle of custom controllers. It introduces new API objects in an existing Kubernetes cluster (which is called the garden cluster) to manage end-user Kubernetes clusters (called shoot clusters).

These shoot clusters are described via declarative cluster specifications, which are observed by the controllers, and their control planes are hosted on dedicated seed clusters. They bring up the clusters, reconcile their state, perform automated updates, and make sure they are always up and running.

Challenges

Gardener manages thousands of Kubernetes clusters around the globe for all of SAP’s development, quality, as well as internal and external production requirements. It also serves other community members (PingCAP, FI-TS, and more) as their multi-cloud orchestration tool of choice.

Gardener provides an automatically created and configured logging stack for each cluster. The end user has access to the logs from control plane components like kube-apiserver, kube-controller-manager, and kube-scheduler via automatically created Grafana dashboards.

Gardener clusters come with a monitoring and logging stack for the control planes of these clusters. While the monitoring stack is straightforward, the logging stack was more of a challenge, as it had to come with these requirements:

Resource efficient (CPU, memory)

Secure and reliable

Multi-tenant (per cluster)

Dynamic vertical scaling

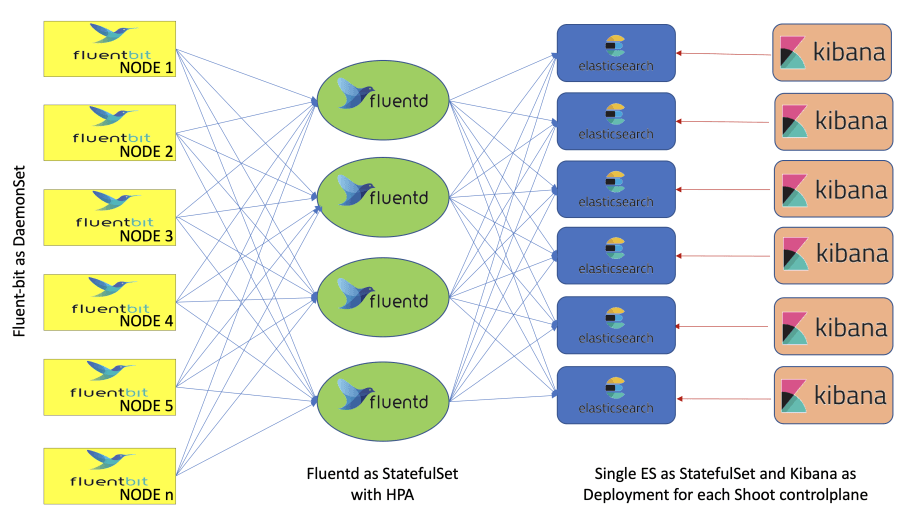

The old logging architecture

Limitations

Two middle layer components (Fluentd and Elasticsearch) made it difficult to trace an issue.

Elasticsearch is a heavyweight Java application for which performing dynamic vertical scaling is really hard.

High resource consumption for Fluentd.

Using Grafana as monitoring UI and Kibana as logging UI is not optimal from a usability point of view.

How we found a better solution with Loki

Two years ago, colleagues from the Gardener team attended KubeCon North America and saw a presentation about Loki. They liked what they saw and shared their impressions with the team. After some more discussions and research, we decided to start using Loki in Gardener.

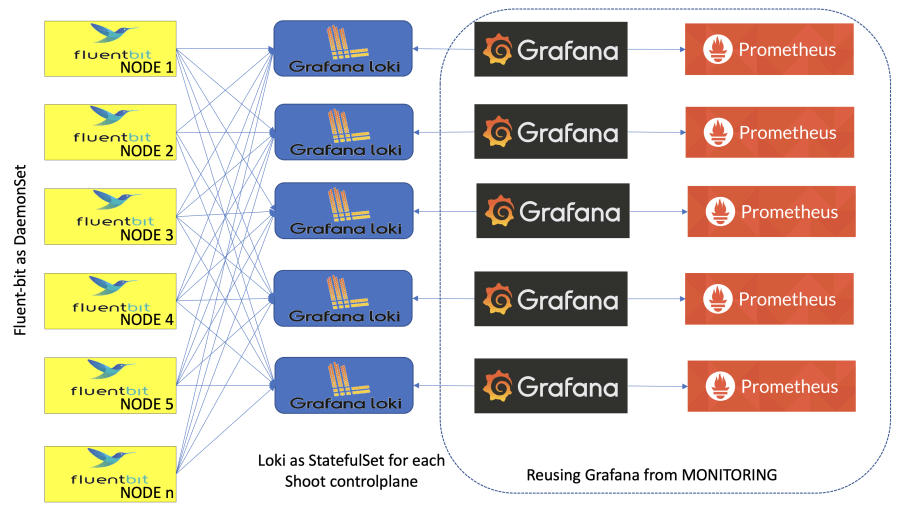

For each Kubernetes cluster, Gardener keeps logs for its control plane in a separate Loki instance. Each Loki instance has 30 GB of storage with a retention policy of two weeks. We need these 30 GB to be enough for keeping the logs for two weeks for the control plane.

I have tested Loki for the past month with my local setup, which keeps logs for one control plane (one Loki instance), and it worked well. The logs storage was around 10 GB for two weeks.

In the future, I would like Loki to have a retention based on the volume. Currently, if the volume (30 GB) is filled and the logs are one week old, the Loki will crash because it will not be able to consume more logs. It would be good if hitting this limit triggers deletion of the oldest logs. (Editor’s note: There’s an open issue addressing this.)

Gardener’s new logging stack architecture

Improvements

Fluentd was replaced by the Fluent Bit Golang plugin, which dynamically serves the log messages to the right Loki instance.

It is really easy to scale Loki vertically.

There is one harmonized monitoring and logging UI (Grafana), so that the metrics and the logs are in one place. We have a Prometheus, and it scrapes metrics from Loki. For now, we have only one alert, for Up/Down.

This stack requires a lot fewer resources (CPU, memory) compared to the previous (EFFK) stack.

Conclusion

There is an open pull request for adding the new logging stack to Gardener’s repo. I recently presented it at Gardener’s community meeting, and everyone was happy with the preliminary results. Now we are just waiting for it to be deployed on our production landscapes.