How We’re (Ab)using Hashicorp’s Consul at Grafana Labs

Hashicorp’s Consul service is a distributed, highly available system that provides a service mesh solution, including service discovery, configuration, and segmentation functionality.

Cortex uses Consul’s KV store to share information that’s necessary for distributing data to its components. While writing to Consul has been useful at Grafana Labs, we’ve found that as we expanded the operations, problems started arising. So we came up with workaround solutions to use – or arguably “abuse” – the service in order to utilize it at greater scale and with higher efficiency.

Consul and Cortex

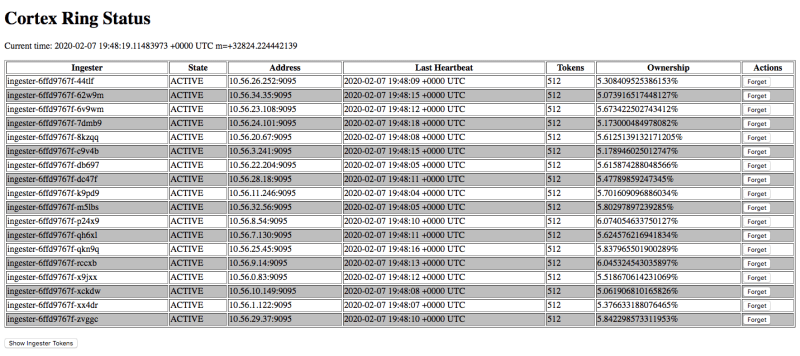

Consul is a consistent KV store that utilizes raft underneath. This means that you can run a Consul cluster, and be sure that all the nodes in the cluster will return the same data when queried. In Cortex, we use Consul with only a single key: the Cortex ring structure, which contains the list of ingesters (their names, the list of owned tokens, and the timestamp of the last update). When distributors send data to ingesters, they know which ingester to go to based on this ring. Arguably, we don’t need a highly consistent or a highly available store for this, and the original Cortex design document even talked about simply using gossip protocol to share the ring.

When ingesters join the cluster, they randomly generate their tokens, and then create an entry into the ring by writing tokens to it. When distributors receive data through an API, they check the ring to see which range of tokens the ingester is assigned to and send the data over accordingly.

To write to Consul, an ingester uses the Compare-and-Swap (CAS) operation. It first downloads the latest version to the ring, updates the ring in memory, and then sends it back to the store. But this only works if the ring hasn’t changed while this process is happening. The ingester knows the version of the ring it has received, so when it sends the ring back to Consul, it can include the original version number. Consul then checks if that version number is still current and only updates the ring if it is.

The hashring is used to distribute values. When queriers are handling the queries, they will fetch the latest data from the ingesters based on the ring data.

Basically, the distributors and queriers are constantly watching the ring for changes, and ingesters update the ring.

Initial Problems When Scaling

The Ingester continually updates its own ring entry with the current timestamp every five seconds (heartbeating). If the distributor and querier see an ingester that hasn’t had a timestamp updated in more than 30 seconds, that indicates that ingester isn’t healthy (e.g. it may have crashed).

We were doing fine when there were up to 20 ingesters each updating its heartbeat every 5 seconds, which is 4 updates a second in total. But when it was scaled to 50 ingesters, the CAS started breaking down. With many ingesters doing the update at the same time, many of CAS operations fail, and then ingesters need to retry them. This increases the load on Consul. Furthermore with this many ingesters, the size of the ring can reach a megabyte or two (although we have optimized this since).

By default Consul was storing up to 1,024 versions of a key. That initially seemed like an efficient number of versions for the compaction process, but we discovered that because Consul uses BoltDB, it wasn’t actually deleting the old versions. Instead, it was turning the data into zeroes – so with a 2 megabyte key with a thousand versions, it was suddenly trying to write 2 gigabytes of zeroes. Needless to say, this slowed down the process and caused the ingesters to fail their heartbeating.

So we came up with two solutions:

- Compact every 128 versions instead of 1,024.

- Mount a memory disk and write to RAM instead of writing directly to disk.

By essentially “abusing” Consul in this way, we were able to scale up the number of ingesters without running into the inherent issues caused by the built-in Bolt DB method of deletion.

Valuing Consistency over Reliability

Now that we could run on a greater scale, we started looking at what kind of guarantees were needed. Since Consul is a distributed system that needs three nodes to function in, it has high reliability. But what we needed were consistency guarantees.

After a deep dive, we realized it was safe to lose the reliability guarantee; even if the Consul data is lost accidentally, the ingesters, in their heartbeat, will still update the ring with all the tokens while updating the timestamp, giving Consul all the data it requires.

So here, we’re “abusing” the system by only running a single Consul, instead of having to worry about the three-node replicas and clusters. This allows for Consul to be down for up to 30 seconds with no issues. After all, distributors have to wait that long to see if there’s a mismatch. If you have an ephemeral single node Consul cluster, you’ll still see consistency and performance without any outages. If an issue does arise, it would restart and continue in step. Further, as there was no data to recover, it would start in less than a second.

Fixing Our Use of the API

The distributors and queriers ask Consul to be notified any time the ring changes. They send the request to Consul and wait for the notification – and when it arrives, they send a new request for another notification, so that they are constantly updated.

With the number of distributors and queriers growing, Consul got busier and busier with distributing notifications to them. Even if the total number of updates is low (e.g. with 30 ingesters each updating the ring every 15 seconds, it’s only two updates per second in total), sending each update to all distributors and queriers is a lot of work (with 30 distributors and 30 queriers, it adds up to 120 notifications sent out per second). This was, in essence, killing Consul by abusing its API.

Hashicorp has its own recommendations of how to use the blocking API of Consul, which Cortex uses for watching the ring. One of the suggestions is to use rate limit for watching. By allowing each distributor and querier to only issue one API call per second, Consul has more air to breathe and operate with more efficiency.

What’s Next

We’re in the process of introducing the gossip protocol to share the ring among ingesters, distributors and queriers. This will make it possible to run Cortex without Consul dependency, while still retaining the properties required for our usage. But for now, these three methods of “abuse” have allowed us to use Consul in a more streamlined way.