Lock-free Observations for Prometheus Histograms

There’s a famous Go proverb that states: Don’t communicate by sharing memory; share memory by communicating.

At GopherCon UK 2019, Björn Rabenstein, an engineer at Grafana Labs and Prometheus maintainer, told the audience that when it comes to observations for Prometheus histograms, that saying doesn’t hold true.

Rabenstein’s presentation was “kind of a sequel” to a presentation he gave at GopherCon in 2015. He said that anyone who doesn’t know how to increment a floating point number in a concurrency-safe way should first watch [that talk] before trying to understand his latest one. He also recommended “Concurrency Patterns in Go” by Arne Claus.

Before delving into specifics, Rabenstein briefly discussed communicating sequential processes (CSP). Even though he thinks it’s nice to circumvent certain problems with concurrent programming, he said that “might be too high-level” for a simple low-level operation like incrementing a single number.

For several of those operations in the Prometheus instrumentation library, he chose to use the atomic package instead – but said doing so is “close to committing heresy.” (The Go site even includes a warning about using the functions with “great care.”) Still, he said his rationale was two-fold: First, he had a low-level application. On top of that, “You just have to increment something very often in a concurrency-safe way,” he said, “and the atomic package gives you really simple tools leveraging CPU instructions to atomically increment a number – usually an integer. If you want to do it with a float, it’s a bit more complicated.”

Understanding Histograms – and the Atomic Package Problem

Prometheus uses histograms as a powerful type of metric; it’s essentially a bucketed counter. The problem with them, he said, is that updating a histogram doesn’t boil down to incrementing one number. Updating a histogram happens with something called an observation.

“What you observe is often latency of requests served by your microservice instance – let’s say it’s 104 milliseconds for a particular request,” he said. Then you increment the bucket that counts the requests with 100 to 200 millisecond latency, but you also have to increment not only a total count of all observations but also a sum of observations.

Prometheus is a pull-based monitoring system, which can create a separate issue: “The Prometheus server walks around and collects metrics for monitored binaries – for example your microservice instance,” he said. “And that’s a race – as in you have to increment three numbers. And if the Prometheus server comes along and scrapes those values when you had already incremented the first two but were not done yet with the third one, then you have a data race.”

Nothing bad happens in your code, but you get inconsistent results, and you have to do something about it. “The atomic package has no way to increment three numbers atomically,” he said, “yet we have naively used separate atomic operations on every single number, assuming nobody would ever notice the inconsistencies. But if your open source project is in widespread use, everything will be noted at some point.” (And in this case, that happened in 2017 when someone reported that “histogram.Write creates invalid results when racing with observe.”)

The Search for a Solution

Rabenstein ruled out Go’s CSP approach because he said it’s not efficient enough for a low-level operation like this. He considered another concurrency pattern: Use mutexes – they’re familiar to Java programmers and also called locks – which provide an easy, straightforward solution to the problem. But that is very slow in highly-concurrent scenarios, he said, so he ruled out that option, too.

What he wanted was something lock-free or wait-free (Arne Claus’ presentation explains those terms), so he studied the Go Memory Model, which essentially allows your compiler to reorder instructions. The document itself states that if you have to read the whole thing to understand the behavior of your program, “you’re being too clever.”

Rabenstein admitted he read the whole document.

Why? People are running Prometheus code everywhere, like for rail platform displays in Germany and to monitor wind turbines. “So to stop global warming, I really have to make my code efficient,” he said. “That’s my excuse for being too clever.”

Even after reading it, he still wasn’t sure if the atomic operations have a certain guaranteed order, so he did more research and eventually became confident that the order of atomic operations is guaranteed even if looking at them from another goroutine.

The Code

Important note: Even if the method is called Write, this is about the read path. One person’s read is another person’s write . . .

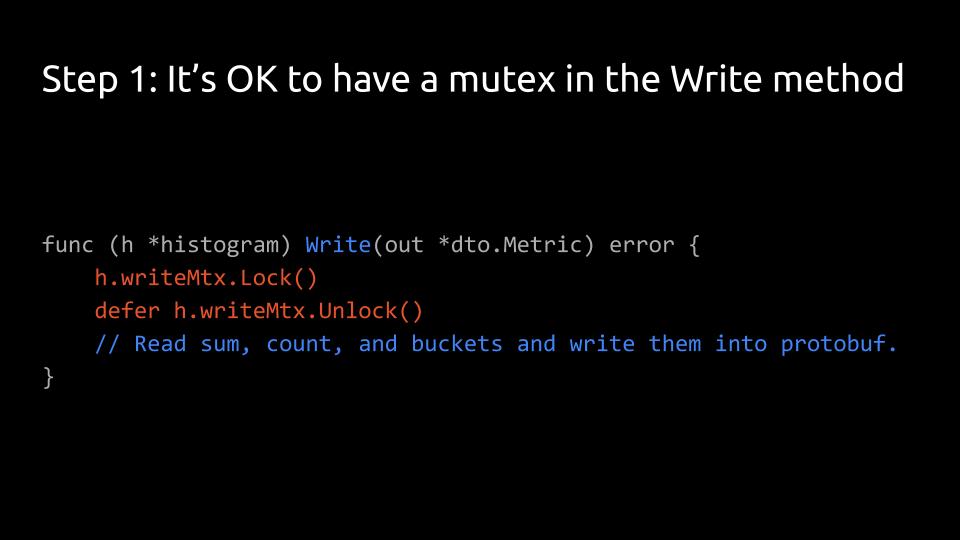

The Write method uses a mutex, despite the performance concerns raised above. This is because the method is only called once per scrape, every 15 seconds or so.

The observe path, however, should not be locked with a mutex.

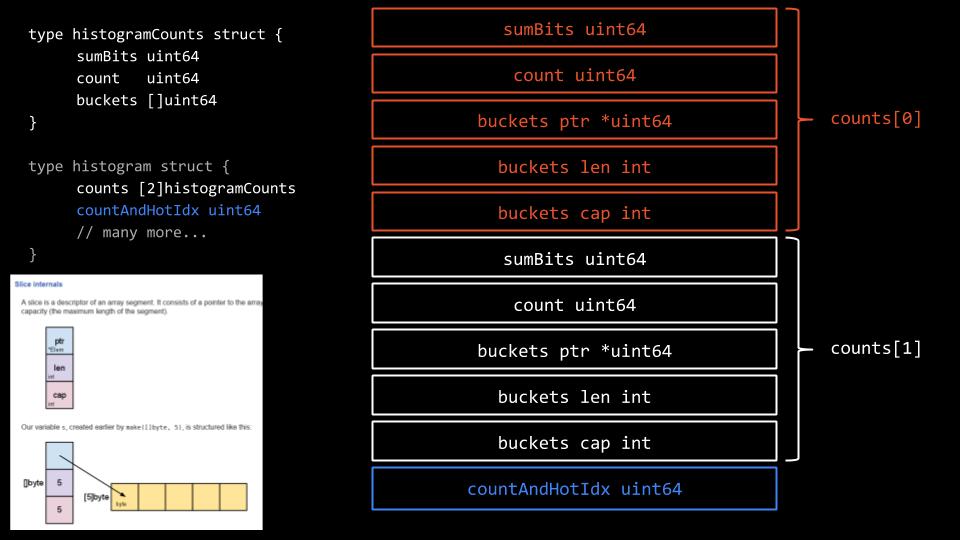

This is the new struct “histogramCounts,” bundling everything counted: the observed values in “buckets,” the total number of observations in “count,” and the sum of observation in “sumBits” – the latter is a float, but to use atomic operations on it, you have to save its bit pattern in a “uint64.”

Rabenstein used “histogramCounts” twice here. (This is a rare use of an array instead of a slice.) Of these two counts, he declared one hot and one cold. The observations all work on the hot counts, and the cold counts are used in the read path.

“hotIdx” is used to mark which of the two counts is the hot one. “Since atomic operations only work on integers,” he said, “I use a uint32 as the smallest type that has atomic operations. If ‘hotIdx’ is even, counts zero is the hot counts. If it’s odd, counts one is the hot counts.”

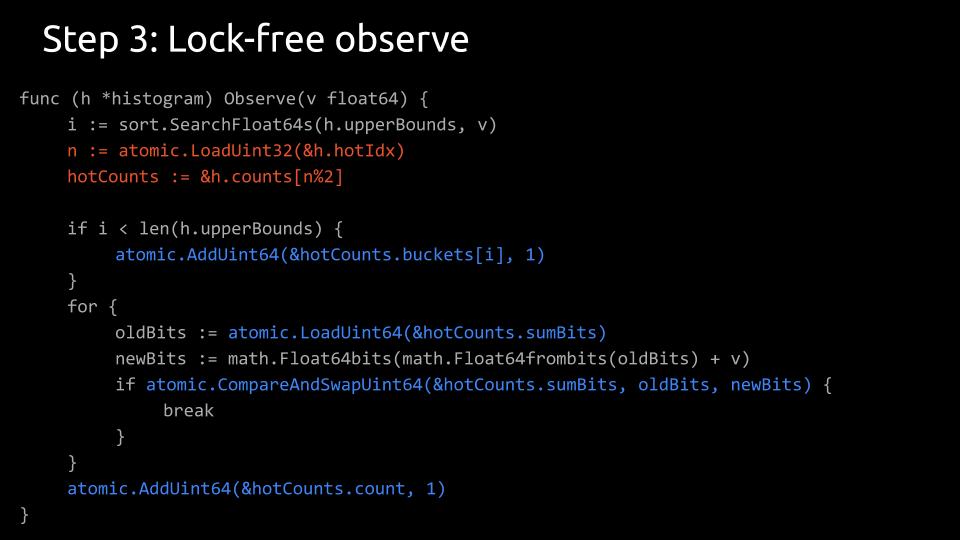

The observe method above is the write path. Rabenstein atomically loaded “hotIdx” to find out which counts is the hot counts, then he atomically incremented all the appropriate numbers in the hot counts. This is all lock-free and concurrency-safe.

The complicated part here is how to atomically increment a float. He said it may look weird with the “CompareAndSwap,” etc., but it works. (His 2015 talk offers a detailed explanation.)

Back to write method (which is the read path, as noted above) . . .

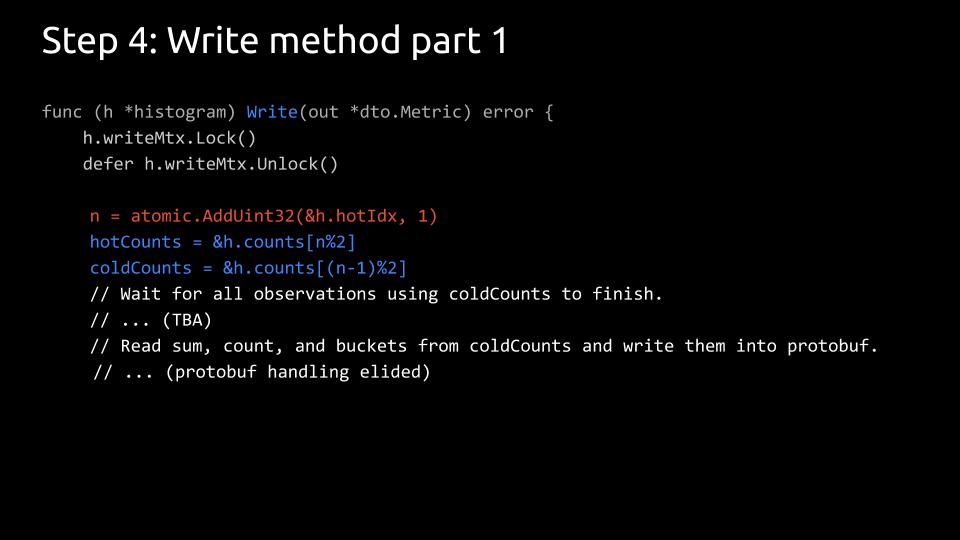

If you only ever look at the cold counts, nothing will ever arrive there, so first you have to swap the hot and cold counts by incrementing the hot index by one, which makes even odd and odd even.

Now the formerly hot count is cold and can be safely read once all ongoing observations have finished. The formerly cold count is now hot and will be used in future observations.

The challenge is waiting for the right amount of time. (There’s more on that topic below.)

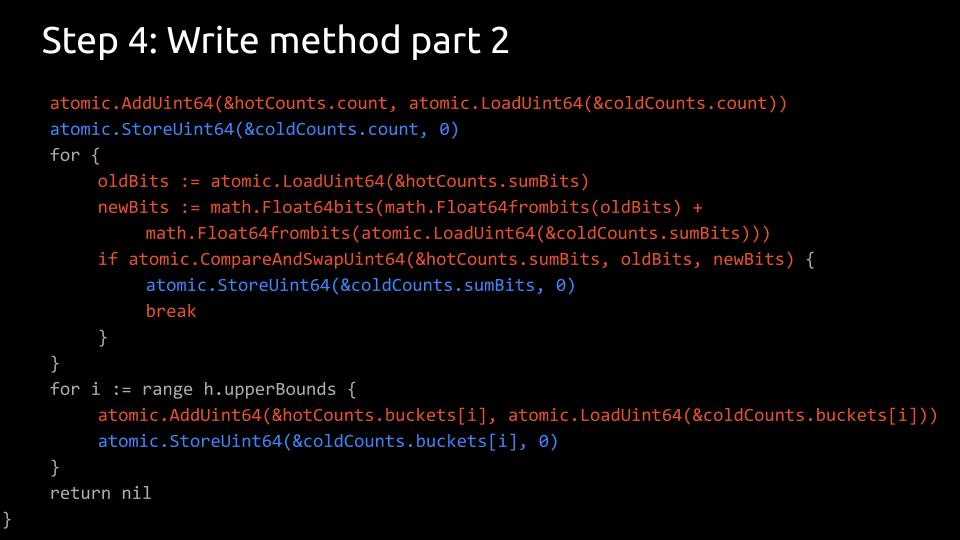

This second part of the write method isn’t as complicated as it looks, Rabenstein said.

He had to reset the cold counts so they can be used in the next swap. And he also had to update the hot counts to include all the values from the cold counts – of course, you want to continue counting. That’s all fine to do in atomic operations here.

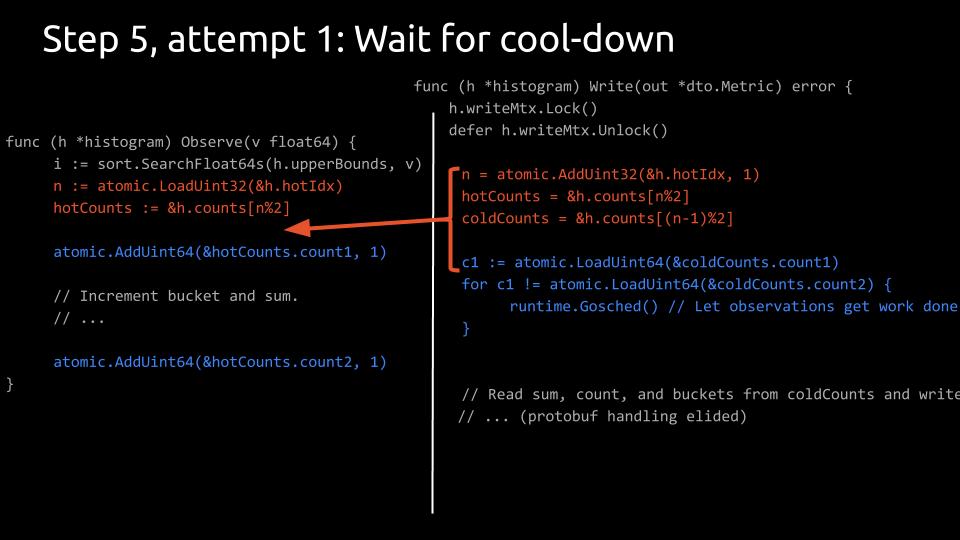

Once that was settled, he decided to look at an attempt to wait for the ongoing observations to finish, here called “cool-down.” This is where the ordering guarantee from the memory model kicks in: that atomic operations are guaranteed to happen in the order that you put them into your code.

Rabenstein used the same code as before, but he extended his counts to have the total number of observations there twice: “count1” and “count2.” Each observation starts with incrementing “count1” and ends with incrementing “count2.” If they are both the same, no observation is ongoing; if they are still different, there are ongoing observations.

The for-loop in the write method waits for “count1” and “count2” to become equal. If they aren’t, it’s important to tell the scheduler to do work. If you don’t, and you only have one core, this goroutine will never let any other goroutine get work done and the for-loop will spin forever.

Rabenstein wrote a unit test to do this a million times and learned that the code works most of the time – which is not good enough.

Rabenstein said the bug is a nice example of where step-by-step debugging is completely useless because it’s a concurrency problem.

The issue? The left and the right part could interrupt each other at any time. The unhappy path starts if the observe method is interrupted after the two orange lines, above left. In this case, the next thing that happens is the execution of the write method up to and including the atomic read of “count1” into the “c1” variable (the first blue line, as marked by the orange bracket and arrow). The counts that the observe method is still acting on is now declared as cold, and the write method is expected to wait for the observe method to finish.

From that point, there are two possible courses of events:

In one scenario, the rest of the observe method is executed first to completion before the write method continues. Then the remainder of the write method looks not at the old “count1” value, but at the new “count2” value. The latter is now incremented, while the former is not. Therefore, the “for” loop in the write method will spin forever.

The other course of action is even worse: Here, only the next line of the observe method is executed (incrementing “count1”). Then, both methods happen to run concurrently so that the “for” loop in the write method is entered before the observe method terminates. The “for” loop will immediately exit because the old value of “count1” is equal to the current value of “count2.” Now the write method will read from the cold counts while the observe method is still modifying it. Exactly what you wanted to avoid!

Additional Attempts

Regarding his next attempt, Rabenstein noted you can’t increment two numbers atomically, but you can put two numbers into one integer.

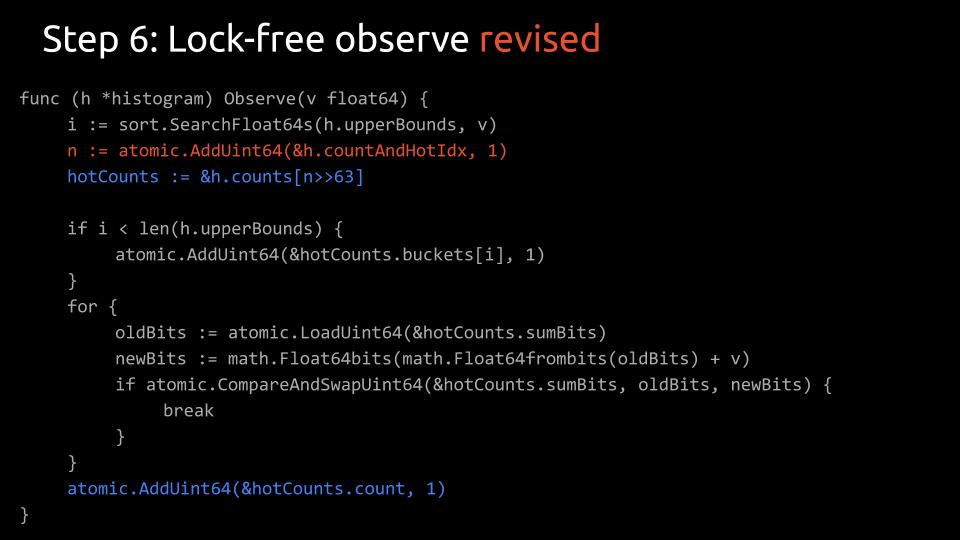

“63 bits ought to be enough for anybody,” he explained. You can use the least significant 63 bits of a uint64 for the count of observations, and use the most significant bit as the index of the hot counts struct.

(Note: He started this with the least significant bit as the marker, but in a pull request on GitHub, @pascaldekloe pointed out that it’s much easier using the most significant bit.)

“countAndHotIdx” is new here. You put two things into one variable and then you can do atomic operations on it.

This is the revised observe. You increment this “countAndHotIdx” by one, which increments the count of observations (in the least significant 63 bits) while leaving the most significant bit intact. At the same time you read out the new value of “countAndHotIdx”. By bit shifting this value 63 bits to the right, you can find out what the hot counts are.

What was previously in “count1” is now in the least significant 63 bits of “countAndHotIdx”, and “count2” is now simply called “count” again. It is incremented in the last (blue) line of the observe method.

In this way, you can read one number (a very small one, namely the single bit that is the index of the hot counts) and increment another number (the remaining 63 bits) in one atomic operation.

Next, the write method has to be revised.

First you increment “countAndHotIdx,” Rabenstein explained, not simply by one, but by one left-shifted by 63 bits. That increments the most significant bit of “countAndHotIdx.” And at the same time, you again read out the new value, of which the least significant 63 bits are your count now. The most significant bit gives you the hot counts and cold counts after the swap.

Then you wait for the count in the cold counts to be the same as the count you read from “countAndHotIdx.”

Does it work?

Yes! It worked in his unit test where he did this a million times. It worked in practice.

Except when it didn’t. After a while, somebody filed an issue again . . .

Finding the Bug

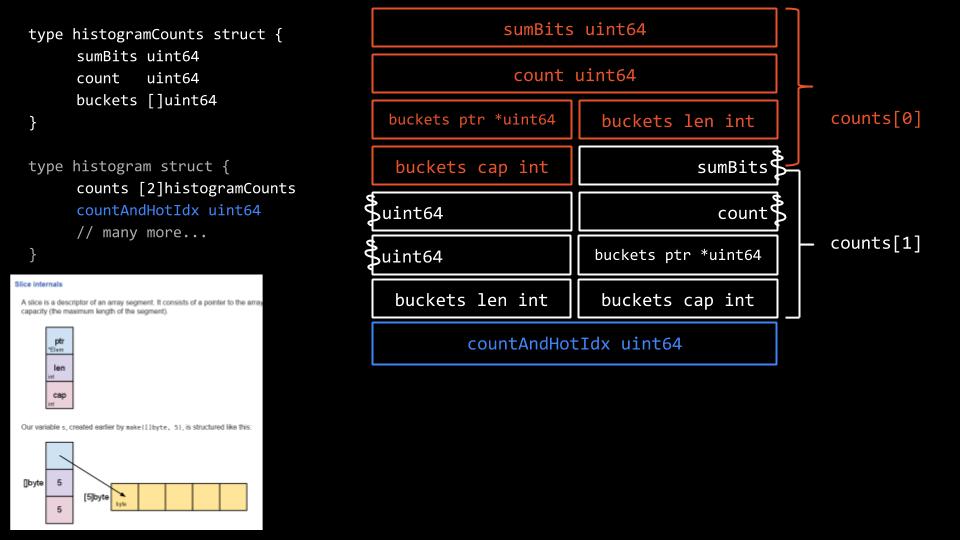

The bug only showed itself on 32 bit platforms. That is related to a known issue in the atomic package. “The variables that you act on atomically have to be 64 bit aligned, even on 32 bit platforms,” he explained.

He thought he’d done it correctly, since he intentionally put everything that acts atomically on it in the beginning of the struct. But somehow it didn’t work.

The next step he took was to go into the details and find out how things are actually laid out.

Here, you have the counts – sumBits uint64 and count uint64 – and the slice for the buckets. But what does a Go slice look like internally? It consists of three things: a pointer to the underlying array, and two integers – one for the length and one for the capacity of the slice. All of these have a size of 64 bits. “Therefore, everything is nicely aligned,” he noted.

This is how it looks on a 32bit platform. “Unfortunately, here the pointers have a size of 32bit, and the integers for length and capacity are 32bit, too," he said. “There are three things in a slice, each 32bit wide, and three is an odd number. We end up with the second slice being misaligned.”

Problem Solved

How did Rabenstein fix the issue? He could have tried to insert a field for padding but decided that was not robust enough.

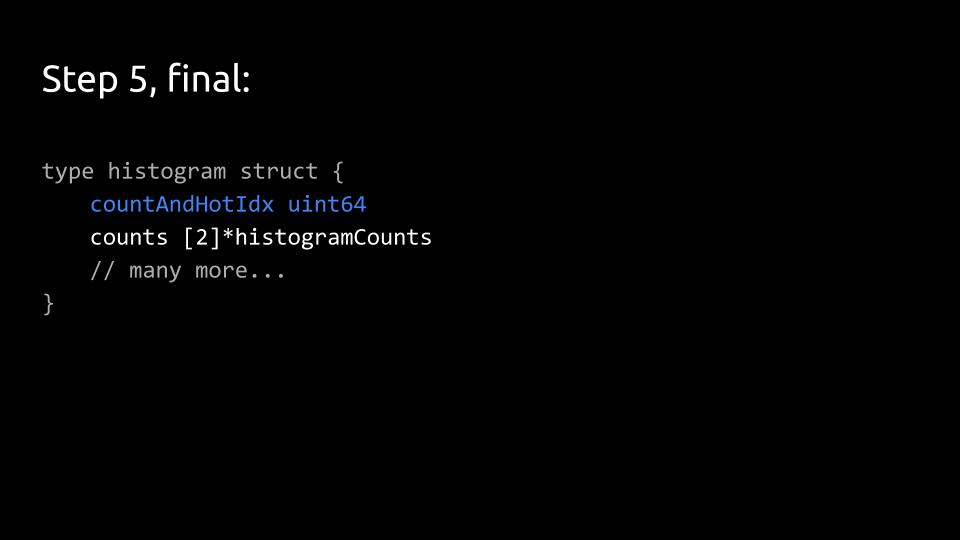

Instead, he made a tiny change: “You just turn the array of two ‘histogramCounts’ into an array of two pointers to a ‘histogramCounts,’ because then the ‘histogramCounts’ are allocated on the heap, automatically 64bit-aligned.”

It’s ever-so-slightly less performing, he said, but it’s safer.

His final code – with a lot of inline comments – is posted here.

“Again, don’t be too clever,” he concluded. “You should not try this at home for no reason.”