![[PromCon EU Recap] 'Fixing' Remote Write](/static/assets/img/blog//RemoteWrite_2.jpg?w=752)

[PromCon EU Recap] 'Fixing' Remote Write

During a lightning talk at PromCon EU last November, Grafana Labs developer Callum Styan, who contributes to Cortex and Prometheus, talked about improvements that have been made to Prometheus remote write – the result of about six months of work.

At the start, Styan provided a brief summary of remote write basics.

He reminded the audience that remote write will – if you configure it to – send all of your metrics somewhere other than Prometheus for various reasons, such as long-term storage or some other system for a different query language.

In his case, he wanted it for Cortex and different query parallelization, which is provided by Cortex.

The Issues

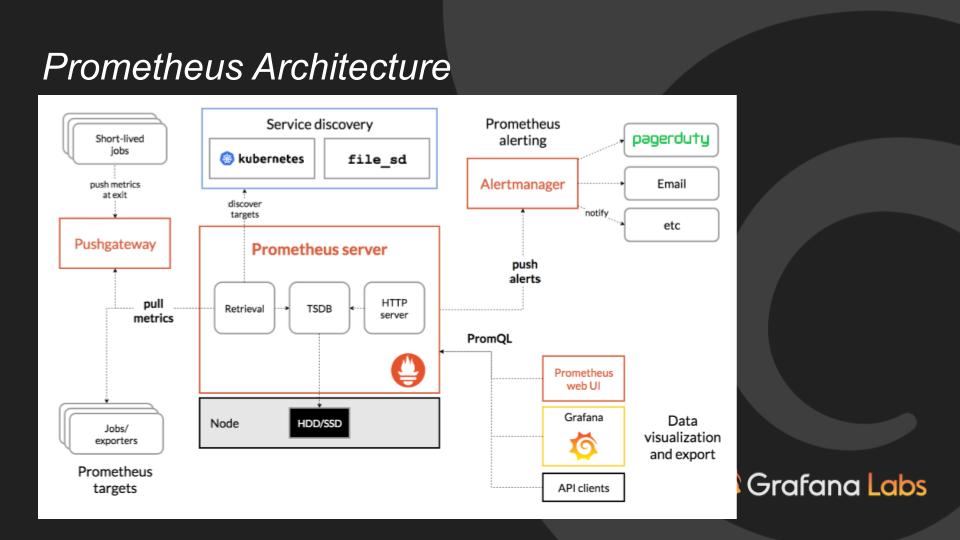

Previously, the retrieval portion of Prometheus would scrape targets (see Retrieval, above), and remote write would make a copy of every sample and buffer unsent samples in memory until it could successfully send them on to the remote write system.

That wasn’t great, Styan said: “What happens if the remote right endpoint goes down, and you continually buffer data?”

As he explained, that buffer was a fixed size, so if filling the buffer didn’t OOMkill your Prometheus, remote write would eventually just start dropping samples when the buffer filled up, which is something you don’t want either. “If this remote storage system is supposed to be for your long-term storage but you don’t end up with all your data there,” he said, “that’s not great either.”

The Fix

Here is the way remote write works now:

It reads the same write-ahead log (WAL) that Prometheus is already generating.

“All of your data scraped, the write-ahead log is written, all that data is there until eventually something’s written to long-term storage on disk,” he said. “So we just tail the same write-ahead log that is already being written.”

If the buffer is full, it doesn’t read in more data.

“There’s still an internal fixed-size buffer,” he said. “The catch is, we don’t continue to read the write-ahead log, if the buffer is already full. So, in theory, remote write will no longer OOM your Prometheus.”

You have to cache labels.

There is one side-effect from the changes: “When you scrape your endpoints, you get the metric name, all the possible labels, and then the value,” he said. “But in the write-ahead log format you get the labels and metric name once, and then from there on out, you just have a reference to an ID, and you have to go look at those labels.”

“Newer versions of remote write in the happy path use more memory,” he added, “but in the worst-case scenario, it won’t crash.”

In other words . . . Prometheus’ write-ahead log contains all information needed for remote write to work, both scraped samples (timestamp and value) and records containing label data. By reusing the WAL, remote write essentially has an on-disk buffer of about 2h of data. This means that as long as remote write doesn’t fall too far behind, it will never lose data and doesn’t buffer too much into memory, avoiding OOMkill scenarios.

Additional Work

There has been more going on since these changes were made. “We’re still working on the sharding bits,” he said. “It mostly works now, other than when you restart Prometheus, it doesn’t necessarily catch up very well.”

Since the refactor discussed in this talk, additional minor improvements to remote write have been made:

- Improve accuracy of sharding calculation (and see here, too)

- Decode WAL records in a separate Go routine

- Additional metrics for debugging: here, here and here

- Change cached label type to reduce memory usage

For more on Styan’s remote write work, check out his blog post, “What’s New in Prometheus 2.8: WAL-Based Remote Write.”